CNN应用Keras Tuner寻找最佳Hidden Layers层数和神经元数量

介绍:

Keras Tuner是一种用于优化Keras模型超参数的开源Python库。它允许您通过自动化搜索算法来寻找最佳的超参数组合,以提高模型的性能。Keras Tuner提供了一系列内置的超参数搜索算法,如随机搜索、网格搜索、贝叶斯优化等。它还支持自定义搜索空间和搜索算法。通过使用Keras Tuner,您可以更轻松地优化模型的性能,节省调参的时间和精力。

数据:

from tensorflow.keras.datasets import fashion_mnist

(x_train, y_train), (x_test, y_test) = fashion_mnist.load_data()

'''

Label Description

0 T-shirt/top

1 Trouser

2 Pullover

3 Dress

4 Coat

5 Sandal

6 Shirt

7 Sneaker

8 Bag

9 Ankle boot

'''

import matplotlib.pyplot as plt

%matplotlib inline

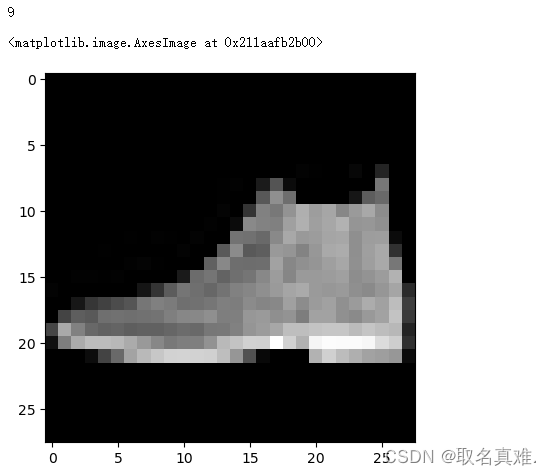

print(y_test[0])

plt.imshow(x_test[0], cmap="gray")

#each having 1 channel (grayscale, it would have been 3 in the case of color, 1 each for Red, Green and Blue)

建模:

x_train = x_train.reshape(-1, 28, 28, 1)

x_test = x_test.reshape(-1, 28, 28, 1)

from tensorflow import keras

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Dense, Flatten, Activation

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

model = keras.models.Sequential()

model.add(Conv2D(32, (3, 3), input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(32, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten()) # this converts our 3D feature maps to 1D feature vectors

model.add(Dense(10))

model.add(Activation("softmax"))

model.compile(optimizer="adam",

loss="sparse_categorical_crossentropy",

metrics=["accuracy"])

model.fit(x_train, y_train, batch_size=64, epochs=1, validation_data = (x_test, y_test)) ![]()

Keras Tuner:

from kerastuner.tuners import RandomSearch

from kerastuner.engine.hyperparameters import HyperParameters

def build_model(hp): # random search passes this hyperparameter() object

model = keras.models.Sequential()

model.add(Conv2D(hp.Int('input_units',

min_value=32,

max_value=256,

step=32), (3, 3), input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

for i in range(hp.Int('n_layers', 1, 4)): # adding variation of layers.

model.add(Conv2D(hp.Int(f'conv_{i}_units',

min_value=32,

max_value=256,

step=32), (3, 3)))

model.add(Activation('relu'))

model.add(Flatten())

model.add(Dense(10))

model.add(Activation("softmax"))

model.compile(optimizer="adam",

loss="sparse_categorical_crossentropy",

metrics=["accuracy"])

return model

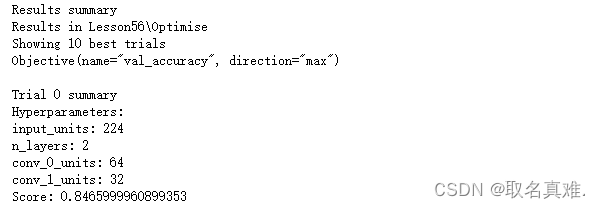

tuner = RandomSearch(

build_model,

objective='val_accuracy',

max_trials=1, # how many model variations to test?

executions_per_trial=1, # how many trials per variation? (same model could perform differently)

directory='Lesson56',

project_name='Optimise')

tuner.search(x=x_train,

y=y_train,

verbose=1, # just slapping this here bc jupyter notebook. The console out was getting messy.

epochs=1,

batch_size=64,

#callbacks=[tensorboard], # if you have callbacks like tensorboard, they go here.

validation_data=(x_test, y_test))

tuner.results_summary()