Kubernetes精讲之控制器的使用

一 什么是控制器

官方文档:

工作负载管理 | Kubernetes

控制器也是管理pod的一种手段

- 自主式pod:pod退出或意外关闭后不会被重新创建

- 控制器管理的 Pod:在控制器的生命周期里,始终要维持 Pod 的副本数目

Pod控制器是管理pod的中间层,使用Pod控制器之后,只需要告诉Pod控制器,想要多少个什么样的Pod就可以了,它会创建出满足条件的Pod并确保每一个Pod资源处于用户期望的目标状态。如果Pod资源在运行中出现故障,它会基于指定策略重新编排Pod

当建立控制器后,会把期望值写入etcd,k8s中的apiserver检索etcd中我们保存的期望状态,并对比pod的当前状态,如果出现差异代码自驱动立即恢复

二 控制器常用类型

| 控制器名称 | 控制器用途 |

| Replication Controller | 比较原始的pod控制器,已经被废弃,由ReplicaSet替代 |

| ReplicaSet | ReplicaSet 确保任何时间都有指定数量的 Pod 副本在运行 |

| Deployment | 一个 Deployment 为 Pod和 ReplicaSet提供声明式的更新能力 |

| DaemonSet | DaemonSet 确保全指定节点上运行一个 Pod 的副本 |

| StatefulSet | StatefulSet 是用来管理有状态应用的工作负载 API 对象。 |

| Job | 执行批处理任务,仅执行一次任务,保证任务的一个或多个Pod成功结束 |

| CronJob | Cron Job 创建基于时间调度的 Jobs。 |

| HPA全称Horizontal Pod Autoscaler | 根据资源利用率自动调整service中Pod数量,实现Pod水平自动缩放 |

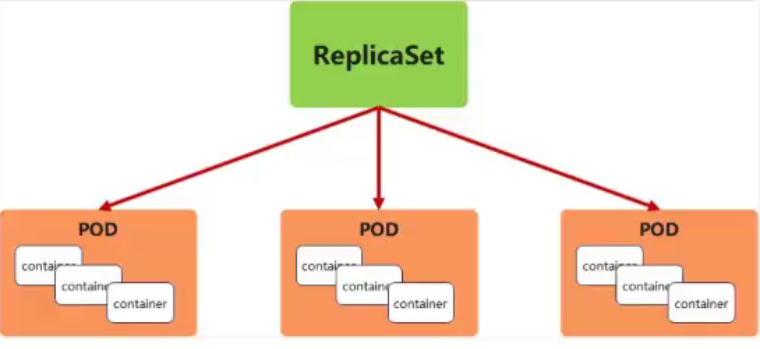

三 replicaset控制器

3.1 replicaset功能

- ReplicaSet 是下一代的 Replication Controller,官方推荐使用ReplicaSet

- ReplicaSet和Replication Controller的唯一区别是选择器的支持,ReplicaSet支持新的基于集合的选择器需求

- ReplicaSet 确保任何时间都有指定数量的 Pod 副本在运行

- 虽然 ReplicaSets 可以独立使用,但今天它主要被Deployments 用作协调 Pod 创建、删除和更新的机制

3.2 replicaset参数说明

| 参数名称 | 字段类型 | 参数说明 |

| spec | Object | 详细定义对象,固定值就写Spec |

| spec.replicas | integer | 指定维护pod数量 |

| spec.selector | Object | Selector是对pod的标签查询,与pod数量匹配 |

| spec.selector.matchLabels | string | 指定Selector查询标签的名称和值,以key:value方式指定 |

| spec.template | Object | 指定对pod的描述信息,比如lab标签,运行容器的信息等 |

| spec.template.metadata | Object | 指定pod属性 |

| spec.template.metadata.labels | string | 指定pod标签 |

| spec.template.spec | Object | 详细定义对象 |

| spec.template.spec.containers | list | Spec对象的容器列表定义 |

| spec.template.spec.containers.name | string | 指定容器名称 |

| spec.template.spec.containers.image | string | 指定容器镜像 |

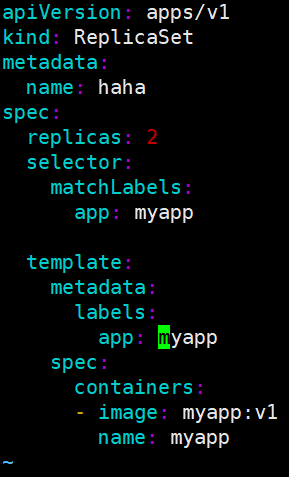

3.3 replicaset 示例

[root@K8s-master ~]# kubectl create deployment haha --image myapp:v1 --dry-run=client -o yaml > haha.yml

[root@K8s-master ~]# vim haha.yml

apiVersion: apps/v1

kind: ReplicaSet #改为ReplicaSet

metadata:

name: haha #指定pod名称,一定小写,如果出现大写报错

spec:

replicas: 2 #指定维护pod数量为2

selector: #指定检测匹配方式

matchLabels: #指定匹配方式为匹配标签

app: myapp #指定匹配的标签为app=myapp

template: #模板,当副本数量不足时,会根据下面的模板创建pod副本

metadata:

labels:

app: myapp

spec:

containers:

- image: myapp:v1

name: myapp

#replicaset是通过标签匹配pod

[root@K8s-master ~]# kubectl apply -f haha.yml

replicaset.apps/haha created

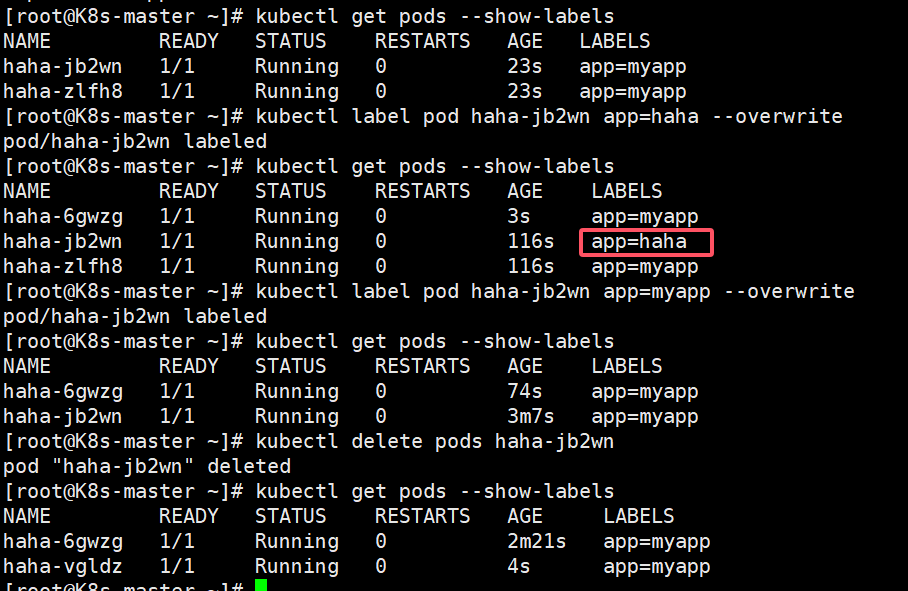

[root@K8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

haha-jb2wn 1/1 Running 0 23s app=myapp

haha-zlfh8 1/1 Running 0 23s app=myapp

[root@K8s-master ~]# kubectl label pod haha-jb2wn app=haha --overwrite

pod/haha-jb2wn labeled

[root@K8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

haha-6gwzg 1/1 Running 0 3s app=myapp

haha-jb2wn 1/1 Running 0 116s app=haha

haha-zlfh8 1/1 Running 0 116s app=myapp

#恢复标签后

[root@K8s-master ~]# kubectl label pod haha-jb2wn app=myapp --overwrite

pod/haha-jb2wn labeled

[root@K8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

haha-6gwzg 1/1 Running 0 74s app=myapp

haha-jb2wn 1/1 Running 0 3m7s app=myapp

#replicaset自动控制副本数量,pod可以自愈

[root@K8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

haha-6gwzg 1/1 Running 0 74s app=myapp

haha-jb2wn 1/1 Running 0 3m7s app=myapp

[root@K8s-master ~]# kubectl delete pods haha-jb2wn

pod "haha-jb2wn" deleted

[root@K8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

haha-6gwzg 1/1 Running 0 2m21s app=myapp

haha-vgldz 1/1 Running 0 4s app=myapp

#回收资源

[root@K8s-master ~]# kubectl delete -f haha.yml

replicaset.apps "haha" deleted

注意replicaset无法对版本进行维护

四 deployment 控制器

4.1 deployment控制器的功能

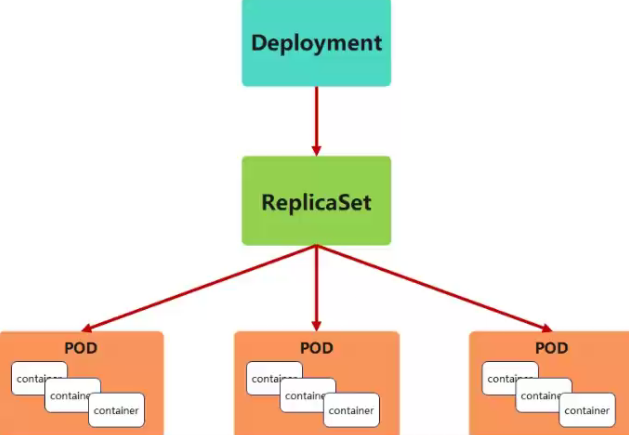

- 为了更好的解决服务编排的问题,kubernetes在V1.2版本开始,引入了Deployment控制器。

- Deployment控制器并不直接管理pod,而是通过管理ReplicaSet来间接管理Pod

- Deployment管理ReplicaSet,ReplicaSet管理Pod

- Deployment 为 Pod 和 ReplicaSet 提供了一个申明式的定义方法

- 在Deployment中ReplicaSet相当于一个版本

典型的应用场景:

- 用来创建Pod和ReplicaSet

- 滚动更新和回滚

- 扩容和缩容

- 暂停与恢复

4.2 deployment控制器示例

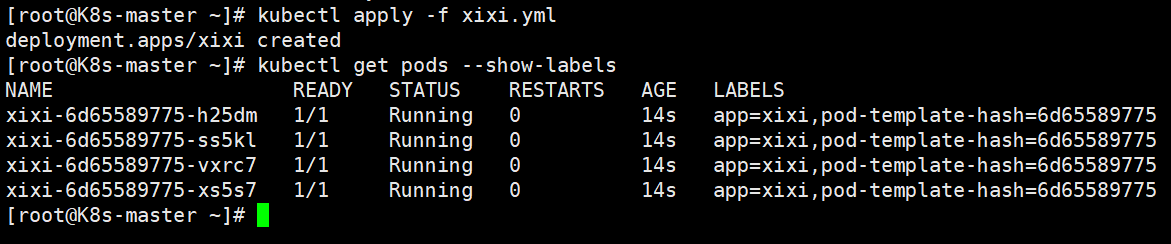

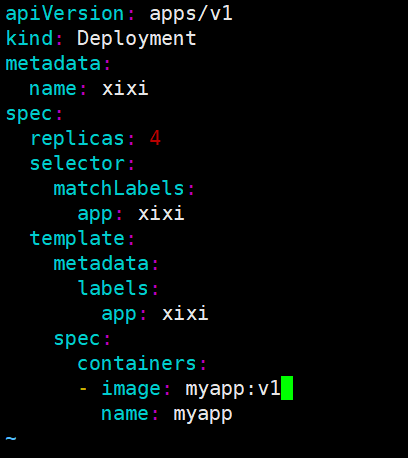

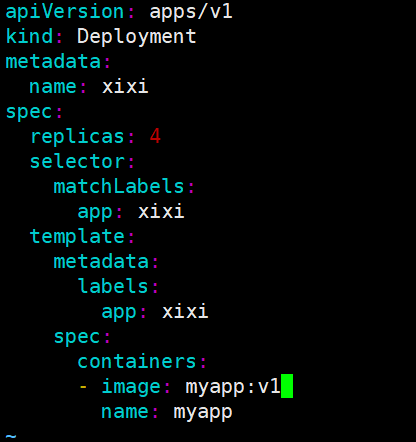

[root@K8s-master ~]# kubectl create deployment xixi --image myapp:v1 --dry-run=client -o yaml > xixi.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: xixi

spec:

replicas: 4

selector:

matchLabels:

app: xixi

template:

metadata:

labels:

app: xixi

spec:

containers:

- image: myapp:v1

name: myapp

#建立pod

[root@K8s-master ~]# kubectl apply -f xixi.yml

deployment.apps/xixi created

#查看pod信息

[root@K8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

xixi-6d65589775-h25dm 1/1 Running 0 14s app=xixi,pod-template-hash=6d65589775

xixi-6d65589775-ss5kl 1/1 Running 0 14s app=xixi,pod-template-hash=6d65589775

xixi-6d65589775-vxrc7 1/1 Running 0 14s app=xixi,pod-template-hash=6d65589775

xixi-6d65589775-xs5s7 1/1 Running 0 14s app=xixi,pod-template-hash=6d65589775

4.2.1 版本迭代

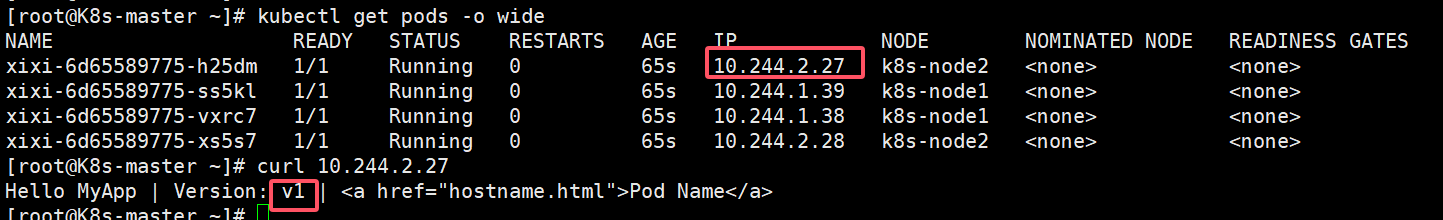

[root@K8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xixi-6d65589775-h25dm 1/1 Running 0 65s 10.244.2.27 k8s-node2 <none> <none>

xixi-6d65589775-ss5kl 1/1 Running 0 65s 10.244.1.39 k8s-node1 <none> <none>

xixi-6d65589775-vxrc7 1/1 Running 0 65s 10.244.1.38 k8s-node1 <none> <none>

xixi-6d65589775-xs5s7 1/1 Running 0 65s 10.244.2.28 k8s-node2 <none> <none>

#pod运行容器版本为v1

[root@K8s-master ~]# curl 10.244.2.27

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

#

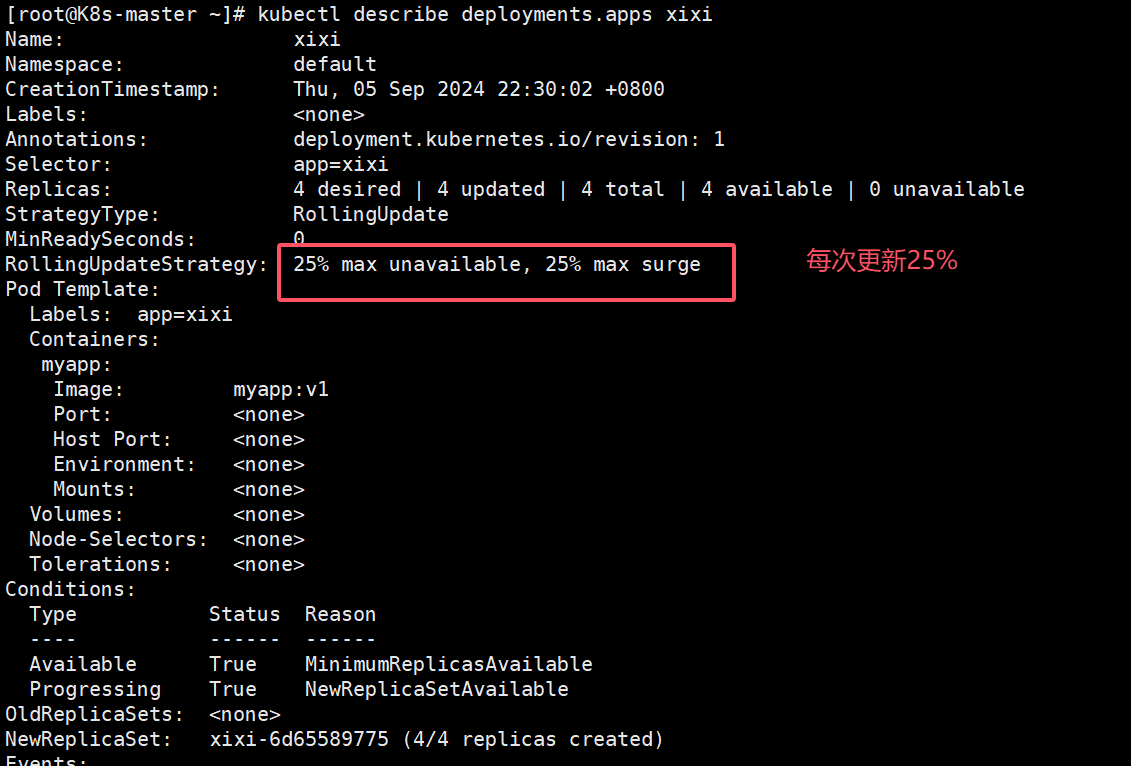

[root@K8s-master ~]# kubectl describe deployments.apps xixi

Name: xixi

Namespace: default

CreationTimestamp: Thu, 05 Sep 2024 22:30:02 +0800

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=xixi

Replicas: 4 desired | 4 updated | 4 total | 4 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge #默认每次更新25%

Pod Template:

Labels: app=xixi

Containers:

myapp:

Image: myapp:v1

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Node-Selectors: <none>

Tolerations: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: xixi-6d65589775 (4/4 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 3m37s deployment-controller Scaled up replica set xixi-6d65589775 to 4

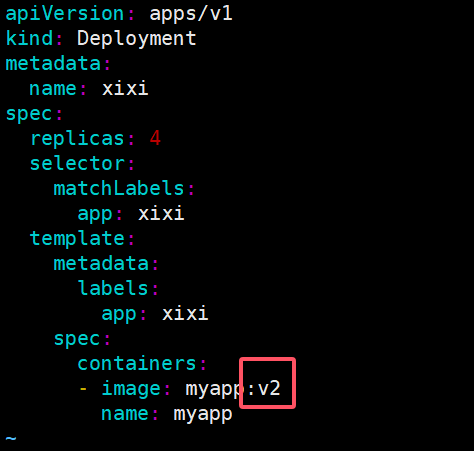

#更新容器运行版本

[root@K8s-master ~]# vim xixi.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: xixi

spec:

replicas: 4

selector:

matchLabels:

app: xixi

template:

metadata:

labels:

app: xixi

spec:

containers:

- image: myapp:v2 #更新为版本2

name: myapp

[root@K8s-master ~]# kubectl apply -f xixi.yml

deployment.apps/xixi configured

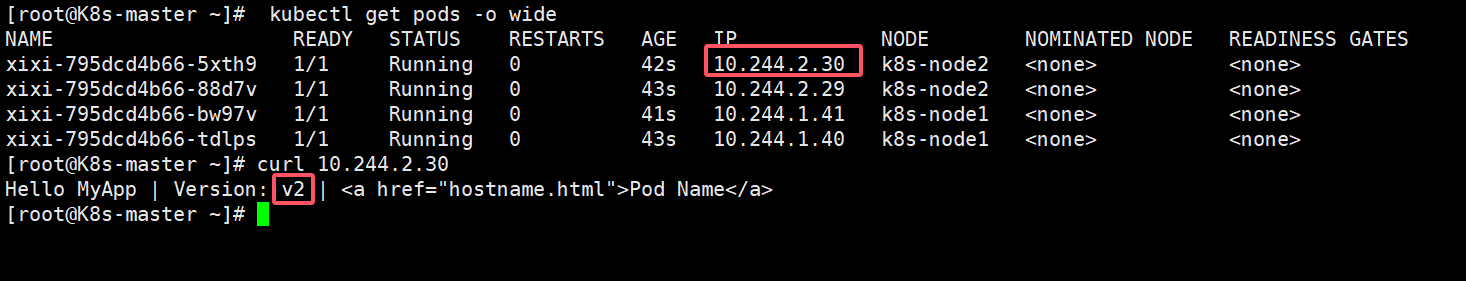

#测试更新效果

[root@K8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xixi-795dcd4b66-5xth9 1/1 Running 0 42s 10.244.2.30 k8s-node2 <none> <none>

xixi-795dcd4b66-88d7v 1/1 Running 0 43s 10.244.2.29 k8s-node2 <none> <none>

xixi-795dcd4b66-bw97v 1/1 Running 0 41s 10.244.1.41 k8s-node1 <none> <none>

xixi-795dcd4b66-tdlps 1/1 Running 0 43s 10.244.1.40 k8s-node1 <none> <none>

[root@K8s-master ~]# curl 10.244.2.30

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

更新前

更新后

更新的过程是重新建立一个版本的RS,新版本的RS会把pod 重建,然后把老版本的RS回收

[root@K8s-master ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/xixi-795dcd4b66-5xth9 1/1 Running 0 3m53s

pod/xixi-795dcd4b66-88d7v 1/1 Running 0 3m54s

pod/xixi-795dcd4b66-bw97v 1/1 Running 0 3m52s

pod/xixi-795dcd4b66-tdlps 1/1 Running 0 3m54s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/xixi 4/4 4 4 10m

NAME DESIRED CURRENT READY AGE

replicaset.apps/xixi-6d65589775 0 0 0 10m

replicaset.apps/xixi-795dcd4b66 4 4 4 3m54s

4.2.2 版本回滚

[root@K8s-master ~]# vim xixi.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: xixi

spec:

replicas: 4

selector:

matchLabels:

app: xixi

template:

metadata:

labels:

app: xixi

spec:

containers:

- image: myapp:v1 #回滚到之前版本

name: myapp

[root@K8s-master ~]# kubectl apply -f xixi.yml

deployment.apps/xixi configured

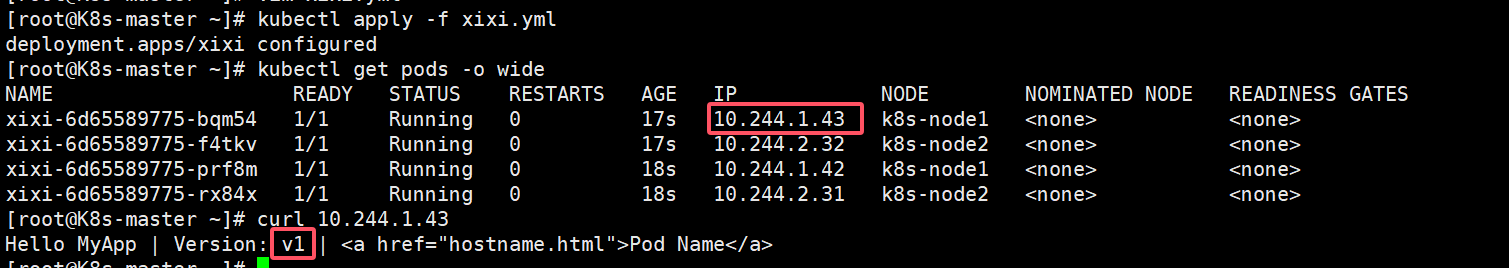

[root@K8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xixi-6d65589775-bqm54 1/1 Running 0 17s 10.244.1.43 k8s-node1 <none> <none>

xixi-6d65589775-f4tkv 1/1 Running 0 17s 10.244.2.32 k8s-node2 <none> <none>

xixi-6d65589775-prf8m 1/1 Running 0 18s 10.244.1.42 k8s-node1 <none> <none>

xixi-6d65589775-rx84x 1/1 Running 0 18s 10.244.2.31 k8s-node2 <none> <none>

[root@K8s-master ~]# curl 10.244.1.43

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

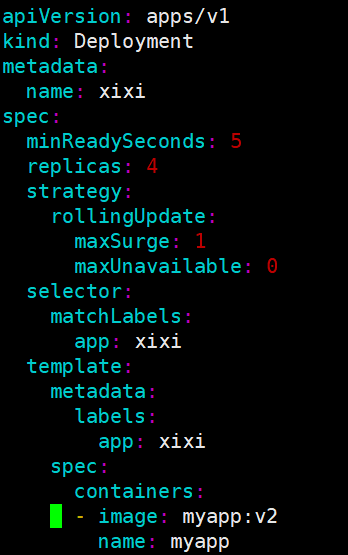

4.2.3 滚动更新策略

[root@K8s-master ~]# vim xixi.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: xixi

spec:

minReadySeconds: 5 #最小就绪时间,指定pod每隔多久更新一次

replicas: 4

strategy: #指定更新策略

rollingUpdate:

maxSurge: 1 #比定义pod数量多几个

maxUnavailable: 0 #比定义pod个数少几个

selector:

matchLabels:

app: xixi

template:

metadata:

labels:

app: xixi

spec:

containers:

- image: myapp:v2

name: myapp

[root@K8s-master ~]# kubectl apply -f xixi.yml

#查看效果,速度要快

[root@K8s-master ~]# kubectl get all

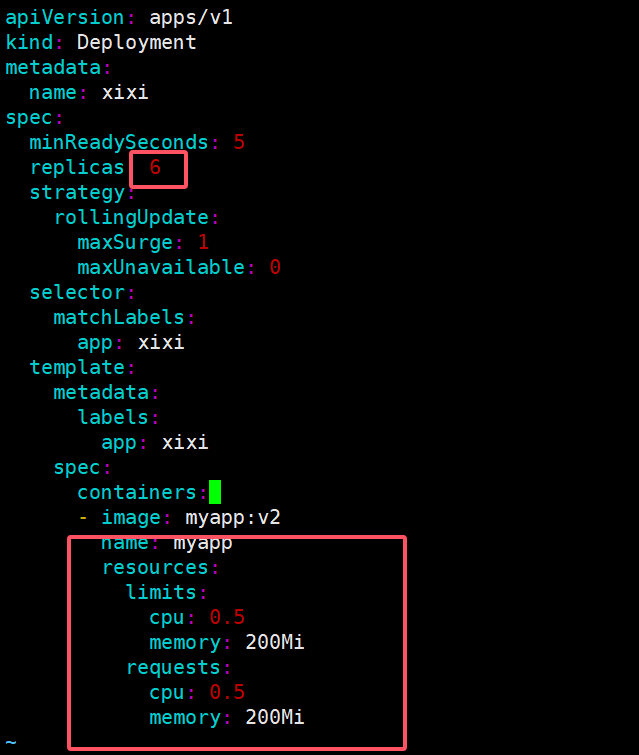

4.2.4 暂停及恢复

在实际生产环境中我们做的变更可能不止一处,当修改了一处后,如果执行变更就直接触发了

我们期望的触发时当我们把所有修改都搞定后一次触发

暂停,避免触发不必要的线上更新

#暂停

[root@K8s-master ~]# kubectl rollout pause deployment xixi

deployment.apps/xixi paused

#调整副本数

[root@K8s-master ~]# vim xixi.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: xixi

spec:

minReadySeconds: 5

replicas: 6

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

selector:

matchLabels:

app: xixi

template:

metadata:

labels:

app: xixi

spec:

containers:

- image: myapp:v2

name: myapp

resources:

limits:

cpu: 0.5

memory: 200Mi

requests:

cpu: 0.5

memory: 200Mi

#调整副本数,不受影响

[root@K8s-master ~]# kubectl describe pods xixi-795dcd4b66-

xixi-795dcd4b66-7kxtw xixi-795dcd4b66-7slzs xixi-795dcd4b66-l8r78 xixi-795dcd4b66-tcdvj xixi-795dcd4b66-txclm xixi-795dcd4b66-w9c8t

#但是更新镜像和修改资源并没有触发更新

[root@K8s-master ~]# kubectl rollout history deployment xixi

deployment.apps/xixi

REVISION CHANGE-CAUSE

5 <none>

6 <none>

#恢复后开始触发更新

[root@K8s-master ~]# kubectl rollout resume deployment xixi

deployment.apps/xixi resumed

[root@K8s-master ~]# kubectl rollout history deployment xixi

deployment.apps/xixi

REVISION CHANGE-CAUSE

5 <none>

6 <none>

7 <none>

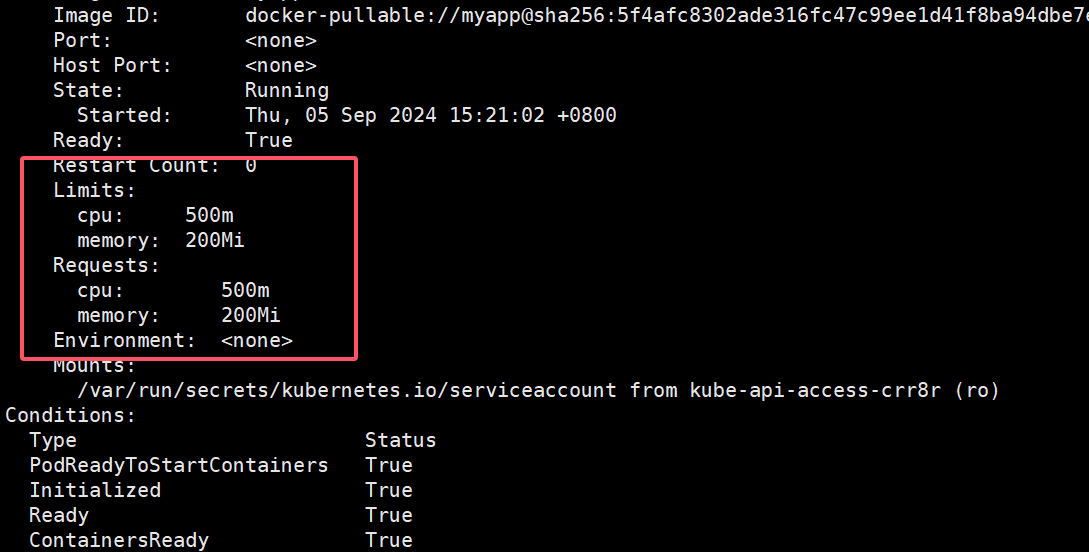

#查看cpu调用

[root@K8s-master ~]# kubectl describe pods xixi-784bf57f98-58rt8

....

Restart Count: 0

Limits:

cpu: 500m

memory: 200Mi

Requests:

cpu: 500m

memory: 200Mi

Environment: <none>

......

#回收

[root@K8s-master ~]# kubectl delete -f xixi.yml

deployment.apps "xixi" deleted

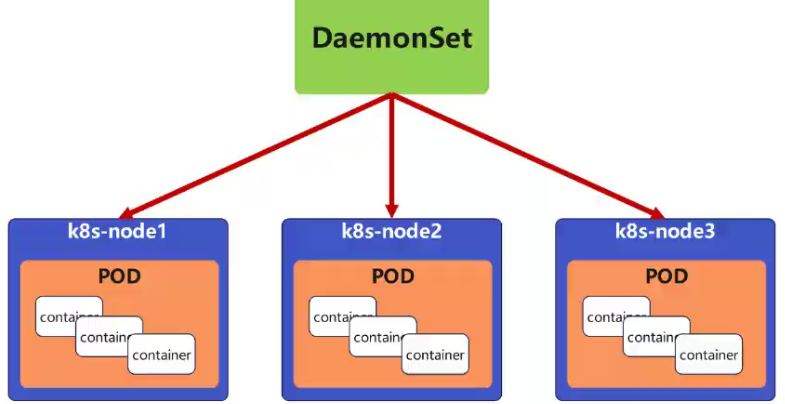

五 daemonset控制器

5.1 daemonset功能

DaemonSet 确保全部(或者某些)节点上运行一个 Pod 的副本。当有节点加入集群时, 也会为他们新增一个 Pod ,当有节点从集群移除时,这些 Pod 也会被回收。删除 DaemonSet 将会删除它创建的所有 Pod

DaemonSet 的典型用法:

- 在每个节点上运行集群存储 DaemonSet,例如 glusterd、ceph。

- 在每个节点上运行日志收集 DaemonSet,例如 fluentd、logstash。

- 在每个节点上运行监控 DaemonSet,例如 Prometheus Node Exporter、zabbix agent等

- 一个简单的用法是在所有的节点上都启动一个 DaemonSet,将被作为每种类型的 daemon 使用

- 一个稍微复杂的用法是单独对每种 daemon 类型使用多个 DaemonSet,但具有不同的标志, 并且对不同硬件类型具有不同的内存、CPU 要求

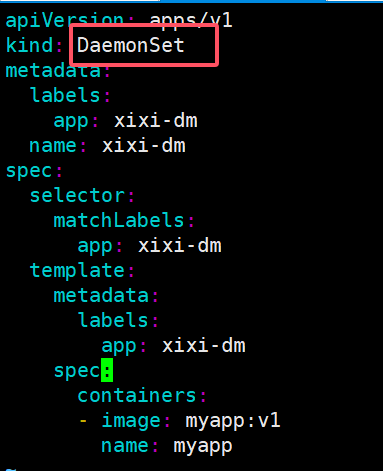

5.2 daemonset 示例

[root@K8s-master ~]# cp xixi.yml xixi-dm.yml

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: xixi-dm

name: xixi-dm

spec:

selector:

matchLabels:

app: xixi-dm

template:

metadata:

labels:

app: xixi-dm

spec:

containers:

- image: myapp:v1

name: myapp

#未设置对污点的容忍

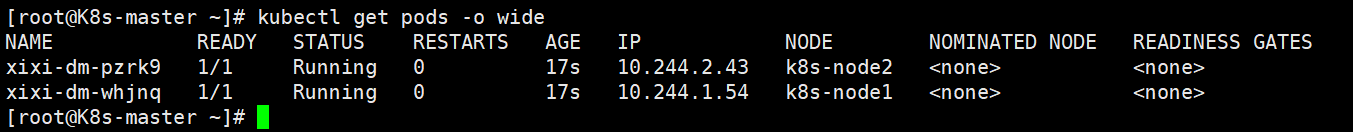

[root@K8s-master ~]# kubectl apply -f xixi-dm.yml

daemonset.apps/xixi-dm created

[root@K8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xixi-dm-pzrk9 1/1 Running 0 11s 10.244.2.43 k8s-node2 <none> <none>

xixi-dm-whjnq 1/1 Running 0 11s 10.244.1.54 k8s-node1 <none> <none>

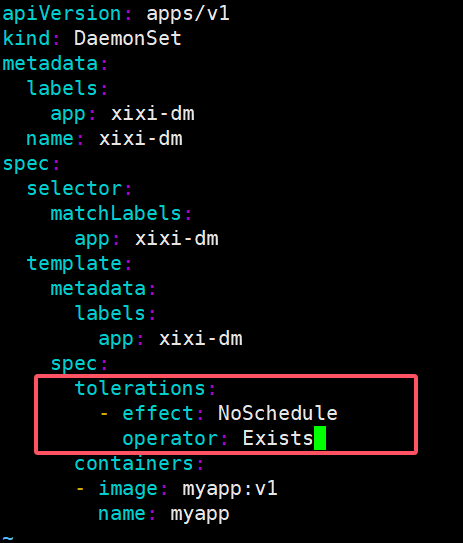

#设置对污点的容忍

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: xixi-dm

name: xixi-dm

spec:

selector:

matchLabels:

app: xixi-dm

template:

metadata:

labels:

app: xixi-dm

spec:

tolerations: #对于污点节点的容忍

- effect: NoSchedule

operator: Exists #对污点的容忍度,强容忍

containers:

- image: myapp:v1

name: myapp

[root@K8s-master ~]# kubectl apply -f xixi-dm.yml

daemonset.apps/xixi-dm configured

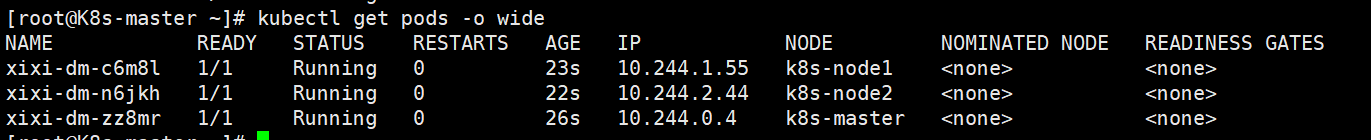

[root@K8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xixi-dm-c6m8l 1/1 Running 0 23s 10.244.1.55 k8s-node1 <none> <none>

xixi-dm-n6jkh 1/1 Running 0 22s 10.244.2.44 k8s-node2 <none> <none>

xixi-dm-zz8mr 1/1 Running 0 26s 10.244.0.4 k8s-master <none> <none>

未设置对污点的容忍

设置对污点的容忍

[root@k8s2 pod]# cat daemonset-example.yml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: daemonset-example

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

tolerations: #对于污点节点的容忍

- effect: NoSchedule

operator: Exists #对污点的容忍度,强容忍

containers:

- name: nginx

image: nginx

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-87h6s 1/1 Running 0 47s 10.244.0.8 k8s-master <none> <none>

daemonset-n4vs4 1/1 Running 0 47s 10.244.2.38 k8s-node2 <none> <none>

daemonset-vhxmq 1/1 Running 0 47s 10.244.1.40 k8s-node1 <none> <none>

#回收

[root@k8s2 pod]# kubectl delete -f daemonset-example.yml六 job 控制器

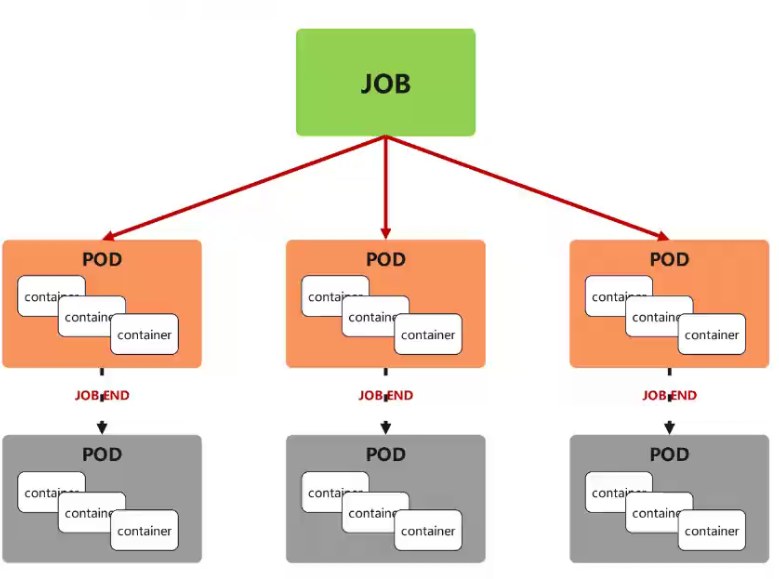

6.1 job控制器功能

Job,主要用于负责批量处理(一次要处理指定数量任务)短暂的一次性(每个任务仅运行一次就结束)任务

Job特点如下:

- 当Job创建的pod执行成功结束时,Job将记录成功结束的pod数量

- 当成功结束的pod达到指定的数量时,Job将完成执行

6.2 job 控制器示例:

需要一个镜像

![]()

#导入镜像

[root@K8s-master ~]# docker load -i perl-5.34.tar.gz

c9a63110150b: Loading layer 129.1MB/129.1MB

e019be289189: Loading layer 11.3MB/11.3MB

6e632f416458: Loading layer 19.31MB/19.31MB

327e42081bbe: Loading layer 156.5MB/156.5MB

3ffc178e6d86: Loading layer 537.7MB/537.7MB

89ab64e009d0: Loading layer 3.584kB/3.584kB

213cb8592bff: Loading layer 57.87MB/57.87MB

Loaded image: perl:5.34.0

#上传镜像

[root@K8s-master ~]# docker tag perl:5.34.0 reg.harbor.org/library/perl:5.34.0

[root@K8s-master ~]# docker push reg.harbor.org/library/perl:5.34.0

The push refers to repository [reg.harbor.org/library/perl]

213cb8592bff: Pushed

89ab64e009d0: Pushed

3ffc178e6d86: Pushed

327e42081bbe: Pushed

6e632f416458: Pushed

e019be289189: Pushed

c9a63110150b: Pushed

5.34.0: digest: sha256:7cf07e3b102361df7f0e9f8b1311baed15d4190f26b3e096f03ac87d6dab7aeb size: 1796

配置

[root@K8s-master ~]# kubectl create job haha-job --image perl:5.34.0 --dry-run=client -o yaml > haha-job.yml

[root@K8s-master ~]# vim haha-job.yml

apiVersion: batch/v1

kind: Job

metadata:

name: haha-job

spec:

completions: 6 #一共完成任务数为6

parallelism: 2 #每次并行完成2个

template:

spec:

containers:

- image: perl:5.34.0

name: perl

command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"] #计算Π的后2000位

restartPolicy: Never #关闭后不自动重启

backoffLimit: 4 #运行失败后尝试4重新运行

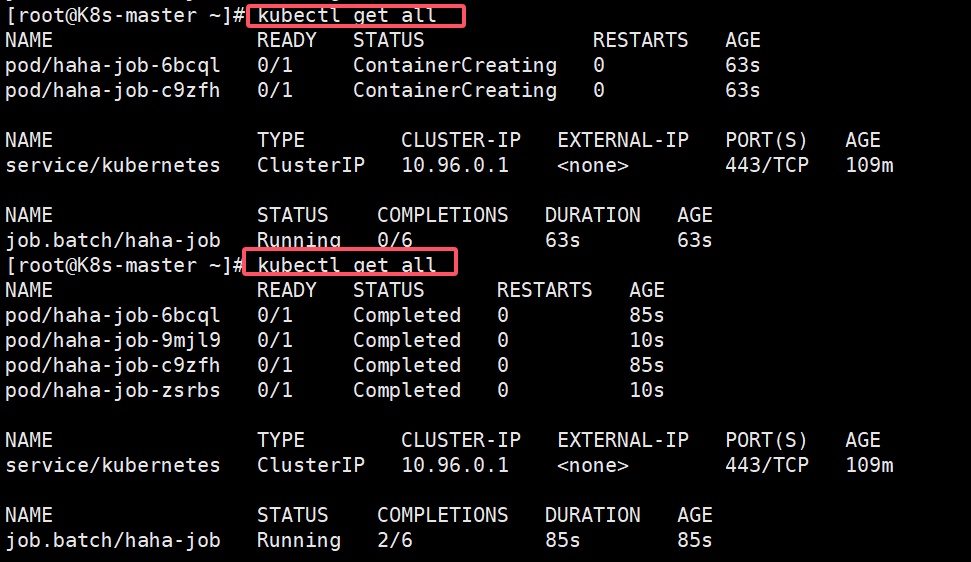

#运行

[root@K8s-master ~]# kubectl apply -f haha-job.yml

job.batch/haha-job created

[root@K8s-master ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/haha-job-6bcql 0/1 ContainerCreating 0 6s

pod/haha-job-c9zfh 0/1 ContainerCreating 0 6s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 108m

NAME STATUS COMPLETIONS DURATION AGE

job.batch/haha-job Running 0/6 6s 6s

#等了一会后

[root@K8s-master ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/haha-job-6bcql 0/1 Completed 0 85s

pod/haha-job-9mjl9 0/1 Completed 0 10s

pod/haha-job-c9zfh 0/1 Completed 0 85s

pod/haha-job-zsrbs 0/1 Completed 0 10s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 109m

NAME STATUS COMPLETIONS DURATION AGE

job.batch/haha-job Running 2/6 85s 85s

关于重启策略设置的说明:

- 如果指定为OnFailure,则job会在pod出现故障时重启容器而不是创建pod,failed次数不变

- 如果指定为Never,则job会在pod出现故障时创建新的pod并且故障pod不会消失,也不会重启,failed次数加1

- 如果指定为Always的话,就意味着一直重启,意味着job任务会重复去执行了

七 cronjob 控制器

7.1 cronjob 控制器功能

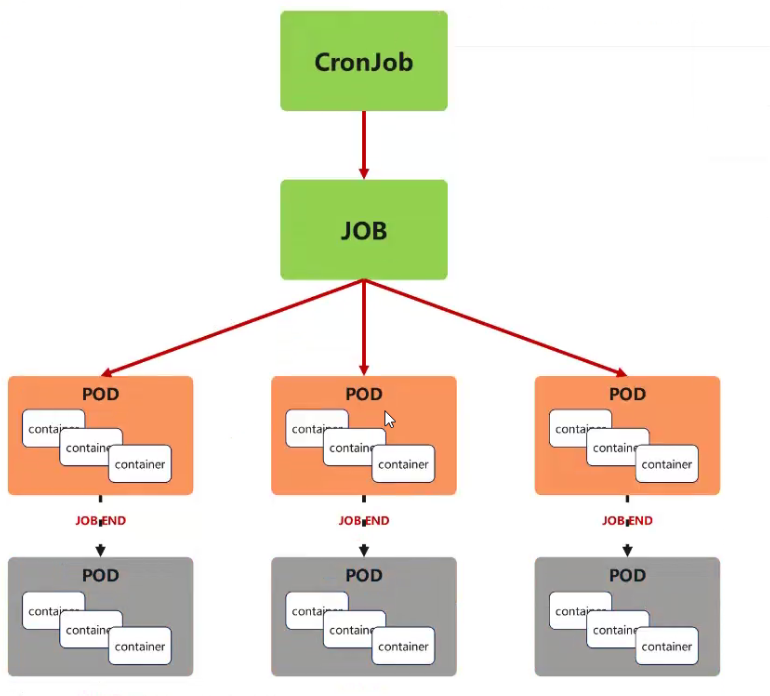

- Cron Job 创建基于时间调度的 Jobs。

- CronJob控制器以Job控制器资源为其管控对象,并借助它管理pod资源对象,

- CronJob可以以类似于Linux操作系统的周期性任务作业计划的方式控制其运行时间点及重复运行的方式。

- CronJob可以在特定的时间点(反复的)去运行job任务。

7.2 cronjob 控制器示例

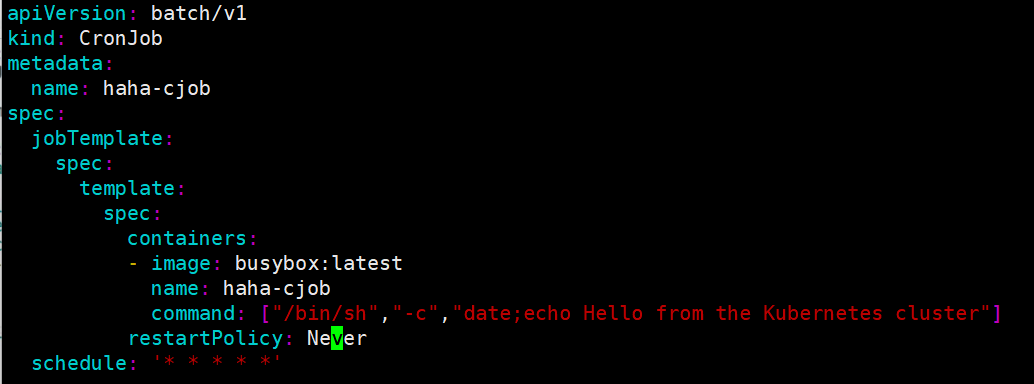

[root@K8s-master ~]# kubectl create cronjob haha-cjob --image busybox:latest --schedule="* * * * *" --restart Never --dry-run=client -o yaml > haha-cjob.yml

[root@K8s-master ~]# vim haha-cjob.yml

apiVersion: batch/v1

kind: CronJob

metadata:

name: haha-cjob

spec:

jobTemplate:

spec:

template:

spec:

containers:

- image: busybox:latest

name: haha-cjob

command: ["/bin/sh","-c","date;echo Hello from the Kubernetes cluster"]

restartPolicy: Nerver

schedule: '* * * * *'

[root@K8s-master ~]# kubectl apply -f haha-cjob.yml

cronjob.batch/haha-cjob created

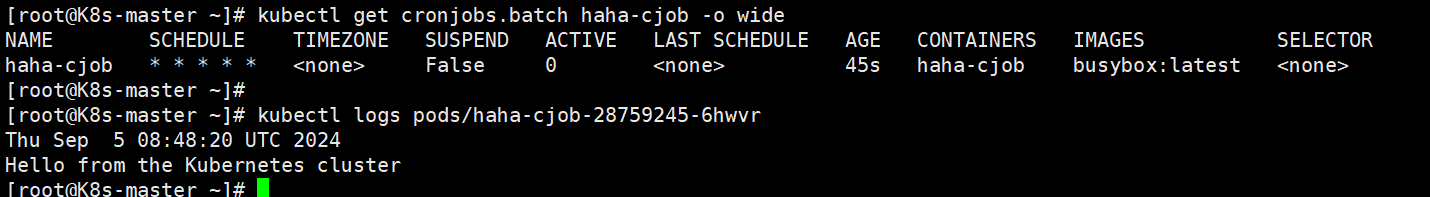

[root@K8s-master ~]# kubectl get cronjobs.batch haha-cjob -o wide

NAME SCHEDULE TIMEZONE SUSPEND ACTIVE LAST SCHEDULE AGE CONTAINERS IMAGES SELECTOR

haha-cjob * * * * * <none> False 0 <none> 45s haha-cjob busybox:latest <none>

[root@K8s-master ~]# kubectl logs pods/haha-cjob-28759245-6hwvr

Thu Sep 5 08:48:20 UTC 2024

Hello from the Kubernetes cluster

#等一分钟

[root@K8s-master ~]# kubectl logs pods/haha-cjob-2875924

pods/haha-cjob-28759245-6hwvr pods/haha-cjob-28759246-gqnkb

[root@K8s-master ~]# kubectl logs pods/haha-cjob-28759246-gqnkb

Thu Sep 5 08:49:20 UTC 2024

Hello from the Kubernetes cluster

#删除

[root@K8s-master ~]# kubectl delete -f haha-cjob.yml

cronjob.batch "haha-cjob" deleted