TensorRT:自定义插件学习与实践 002:实现GELU

- 代码连接:https://github.com/codesteller/trt-custom-plugin

TensorRT版本的选择

- 教程代码对应的版本TensorRT-6.0.1.8,我尝试使用TensorRT-7.2.3.4也能通过编译

set_ifndef(TRT_LIB /usr/local/TensorRT-7.2.3.4/lib)

set_ifndef(TRT_INCLUDE /usr/local/TensorRT-7.2.3.4/include)

- 但是使用更高版本可能报错

set_ifndef(TRT_LIB /usr/local/TensorRT-8.0.0.3/lib)

set_ifndef(TRT_INCLUDE /usr/local/TensorRT-8.0.0.3/include)

-

error: looser throw specifier for ‘virtual int GeluPlugin::getNbOutputs() const’ // https://forums.developer.nvidia.com/t/custom-plugin-fails-error-looser-throw-specifier-for-virtual/186885

-

需要按照新版本的格式进行修改,一般需要加上noexcept关键字 :

int getNbOutputs() const noexcept override;

plugin 在python端使用使用

trt.PluginField

def getAddScalarPlugin(scalar):

for c in trt.get_plugin_registry().plugin_creator_list:

print(c.name)

if c.name == "CustomGeluPlugin":# "LReLU_TRT":#

parameterList = []

#res = c.create_plugin(c.name,None) ## 段错误 (核心已转储)

parameterList.append(trt.PluginField("typeId", np.int32(0), trt.PluginFieldType.INT32))

parameterList.append(trt.PluginField("bias", np.int32(scalar), trt.PluginFieldType.INT32))

res = c.create_plugin(c.name, trt.PluginFieldCollection(parameterList))

return res

return None

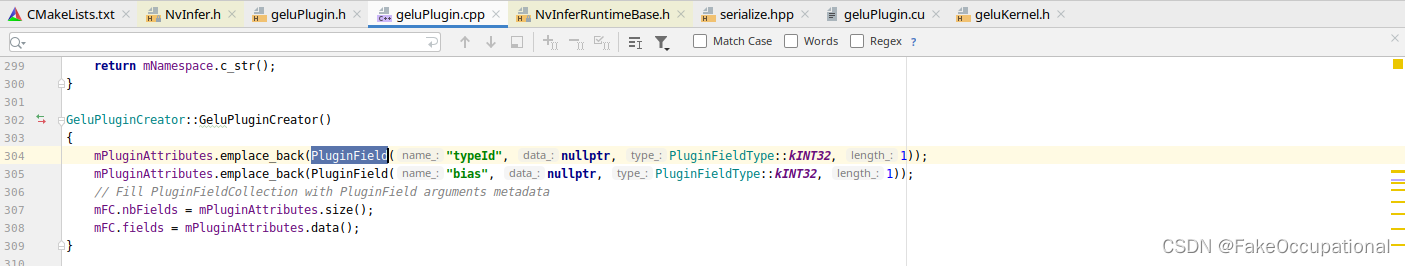

- 关于参数列表

parameterList.append(trt.PluginField("typeId", np.int32(0), trt.PluginFieldType.INT32))的设置,可在原代码的PluginField进行设置

- python文档:https://docs.nvidia.com/deeplearning/tensorrt/api/python_api/infer/Plugin/IPluginCreator.html#tensorrt.PluginFieldCollection

python环境安装

install cuda-python

- pip install cuda-python -i https://mirrors.aliyun.com/pypi/simple/

Both CUDA-Python and pyCUDA allow you to write GPU kernels using CUDA C++. The kernel is presented as a string to the python code to compile and run. The key difference is that the host-side code in one case is coming from the community (Andreas K and others) whereas in the CUDA Python case it is coming from NVIDIA.(https://pypi.org/project/cuda-python/ ,https://nvidia.github.io/cuda-python/install.html https://blog.csdn.net/hjxu2016/article/details/122868139)

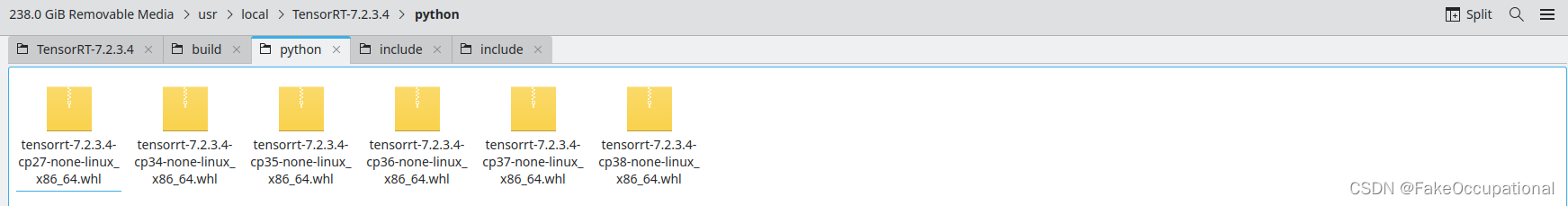

install right version tensorrt for python

- https://docs.nvidia.com/deeplearning/tensorrt/install-guide/index.html

- cd /usr/local/TensorRT-7.2.3.4/python/

- pip install tensorrt-7.2.3.4-cp37-none-linux_x86_64.whl

from .tensorrt import *ImportError: libnvinfer.so.7: cannot open shared object file: No such file or directoryLD_LIBRARY_PATH=/usr/local/TensorRT-7.2.3.4/lib//home/pdd/anaconda3/envs/yolocopy/bin/python3.7 /home/pdd/MPI/AddScalarPlugin/cmake-build-debug/testAddScalarPlugin.pyfrom .tensorrt import *ImportError: libcudnn.so.8: cannot open shared object file: No such file or directory- LD_LIBRARY_PATH=/usr/local/TensorRT-7.2.3.4/lib/:/usr/local/cuda-11.1/targets/x86_64-linux/lib /home/pdd/anaconda3/envs/yolocopy/bin/python3.7 /home/pdd/MPI/AddScalarPlugin/cmake-build-debug/testAddScalarPlugin.py

CG

- 用到的CMakeLists.txt

#

# Copyright (c) 2019, NVIDIA CORPORATION. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

cmake_minimum_required(VERSION 3.8 FATAL_ERROR)

set(CMAKE_CUDA_COMPILER /usr/local/cuda-11.1/bin/nvcc)

set(CUDACXX /usr/local/cuda-11.1/bin/nvcc)

project(gelu LANGUAGES CXX CUDA)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -Wno-long-long -pedantic -Wno-deprecated-declarations")

#set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall ")

# Sets variable to a value if variable is unset.

macro(set_ifndef var val)

if (NOT ${var})

set(${var} ${val})

endif()

message(STATUS "Configurable variable ${var} set to ${${var}}")

endmacro()

# -------- CONFIGURATION --------

find_package(CUDA REQUIRED)

#set_ifndef(TRT_LIB /home/codesteller/apsoft/mlstack/tensorrt/TensorRT-7.0.0.11/targets/x86_64-linux-gnu/lib)

#set_ifndef(TRT_INCLUDE /home/codesteller/apsoft/mlstack/tensorrt/TensorRT-7.0.0.11/include)

set_ifndef(TRT_LIB /usr/local/TensorRT-8.6.0.12/lib)

set_ifndef(TRT_INCLUDE /usr/local/TensorRT-8.6.0.12/include)

#set_ifndef(TRT_LIB /usr/local/TensorRT-8.0.0.3/lib)

#set_ifndef(TRT_INCLUDE /usr/local/TensorRT-8.0.0.3/include)

#set_ifndef(TRT_LIB /usr/local/TensorRT-7.2.3.4/lib)

#set_ifndef(TRT_INCLUDE /usr/local/TensorRT-7.2.3.4/include)

set_ifndef(CUDA_ROOT /usr/local/cuda-11.1)

# Find dependencies:

message("\nThe following variables are derived from the values of the previous variables unless provided explicitly:\n")

# TensorRT's nvinfer lib

find_library(_NVINFER_LIB nvinfer HINTS ${TRT_LIB} PATH_SUFFIXES lib lib64)

set_ifndef(NVINFER_LIB ${_NVINFER_LIB})

# cuBLAS

find_library(_CUBLAS_LIB cublas HINTS ${CUDA_ROOT} PATH_SUFFIXES lib lib64)

set_ifndef(CUBLAS_LIB ${_CUBLAS_LIB})

# CUDA include dir

find_path(_CUDA_INC_DIR cuda_runtime_api.h HINTS ${CUDA_ROOT} PATH_SUFFIXES include)

set_ifndef(CUDA_INC_DIR ${_CUDA_INC_DIR})

# -------- BUILDING --------

# include_directories(${TRT_INCLUDE} ${CUDA_INC_DIR})

include_directories(${CUDA_ROOT}/include ${TRT_INCLUDE})

include_directories(${CUDA_ROOT}/include ${TRT_INCLUDE} ${CMAKE_SOURCE_DIR}/include/ ${CMAKE_SOURCE_DIR}/)

add_library(GeluPlugin MODULE

${CMAKE_SOURCE_DIR}/geluPlugin.cu

${CMAKE_SOURCE_DIR}/geluKernel.h

${CMAKE_SOURCE_DIR}/geluPlugin.cpp

${CMAKE_SOURCE_DIR}/geluPlugin.h

)

# Use C++11

target_compile_features(GeluPlugin PUBLIC cxx_std_11)

# Link TensorRT's nvinfer lib

target_link_libraries(GeluPlugin PRIVATE ${NVINFER_LIB} ${CUBLAS_LIB})

set_target_properties(GeluPlugin PROPERTIES

CUDA_SEPARABLE_COMPILATION ON

)