Spring Boot实现大文件分块上传

1.分块上传使用场景

-

大文件加速上传:当文件大小超过100MB时,使用分片上传可实现并行上传多个Part以加快上传速度。

-

网络环境较差:网络环境较差时,建议使用分片上传。当出现上传失败的时候,您仅需重传失败的Part。

-

文件大小不确定: 可以在需要上传的文件大小还不确定的情况下开始上传,这种场景在视频监控等行业应用中比较常见。

2.实现原理

实现原理其实很简单,核心就是客户端把大文件按照一定规则进行拆分,比如20MB为一个小块,分解成一个一个的文件块,然后把这些文件块单独上传到服务端,等到所有的文件块都上传完毕之后,客户端再通知服务端进行文件合并的操作,合并完成之后整个任务结束。

3.代码工程

实验目的

实现大文件分块上传

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>springboot-demo</artifactId>

<groupId>com.et</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>file</artifactId>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-autoconfigure</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpmime</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-core</artifactId>

<version>5.8.15</version>

</dependency>

</dependencies>

</project>controller

package com.et.controller;

import com.et.bean.Chunk;

import com.et.bean.FileInfo;

import com.et.service.ChunkService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.core.io.Resource;

import org.springframework.http.HttpHeaders;

import org.springframework.http.ResponseEntity;

import org.springframework.web.bind.annotation.*;

import java.util.List;

@RestController

@RequestMapping("file")

public class ChunkController {

@Autowired

private ChunkService chunkService;

/**

* upload by part

*

* @param chunk

* @return

*/

@PostMapping(value = "chunk")

public ResponseEntity<String> chunk(Chunk chunk) {

chunkService.chunk(chunk);

return ResponseEntity.ok("File Chunk Upload Success");

}

/**

* merge

*

* @param filename

* @return

*/

@GetMapping(value = "merge")

public ResponseEntity<Void> merge(@RequestParam("filename") String filename) {

chunkService.merge(filename);

return ResponseEntity.ok().build();

}

/**

* get fileName

*

* @return files

*/

@GetMapping("/files")

public ResponseEntity<List<FileInfo>> list() {

return ResponseEntity.ok(chunkService.list());

}

/**

* get single file

*

* @param filename

* @return file

*/

@GetMapping("/files/{filename:.+}")

public ResponseEntity<Resource> getFile(@PathVariable("filename") String filename) {

return ResponseEntity.ok().header(HttpHeaders.CONTENT_DISPOSITION,

"attachment; filename=\"" + filename + "\"").body(chunkService.getFile(filename));

}

}config

package com.et.config;

import com.et.service.FileClient;

import com.et.service.impl.LocalFileSystemClient;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import java.util.HashMap;

import java.util.Map;

import java.util.function.Supplier;

@Configuration

public class FileClientConfig {

@Value("${file.client.type:local-file}")

private String fileClientType;

private static final Map<String, Supplier<FileClient>> FILE_CLIENT_SUPPLY = new HashMap<String, Supplier<FileClient>>() {

{

put("local-file", LocalFileSystemClient::new);

// put("aws-s3", AWSFileClient::new);

}

};

/**

* get client

*

* @return

*/

@Bean

public FileClient fileClient() {

return FILE_CLIENT_SUPPLY.get(fileClientType).get();

}

}service

package com.et.service;

import com.et.bean.Chunk;

import com.et.bean.FileInfo;

import org.springframework.core.io.Resource;

import java.util.List;

public interface ChunkService {

void chunk(Chunk chunk);

void merge(String filename);

List<FileInfo> list();

Resource getFile(String filename);

}package com.et.service.impl;

import com.et.bean.Chunk;

import com.et.bean.ChunkProcess;

import com.et.bean.FileInfo;

import com.et.service.ChunkService;

import com.et.service.FileClient;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.core.io.Resource;

import org.springframework.stereotype.Service;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.List;

import java.util.Map;

import java.util.Optional;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.CopyOnWriteArrayList;

import java.util.concurrent.atomic.AtomicBoolean;

@Service

@Slf4j

public class ChunkServiceImpl implements ChunkService {

// process

private static final Map<String, ChunkProcess> CHUNK_PROCESS_STORAGE = new ConcurrentHashMap<>();

// file list

private static final List<FileInfo> FILE_STORAGE = new CopyOnWriteArrayList<>();

@Autowired

private FileClient fileClient;

@Override

public void chunk(Chunk chunk) {

String filename = chunk.getFilename();

boolean match = FILE_STORAGE.stream().anyMatch(fileInfo -> fileInfo.getFileName().equals(filename));

if (match) {

throw new RuntimeException("File [ " + filename + " ] already exist");

}

ChunkProcess chunkProcess;

String uploadId;

if (CHUNK_PROCESS_STORAGE.containsKey(filename)) {

chunkProcess = CHUNK_PROCESS_STORAGE.get(filename);

uploadId = chunkProcess.getUploadId();

AtomicBoolean isUploaded = new AtomicBoolean(false);

Optional.ofNullable(chunkProcess.getChunkList()).ifPresent(chunkPartList ->

isUploaded.set(chunkPartList.stream().anyMatch(chunkPart -> chunkPart.getChunkNumber() == chunk.getChunkNumber())));

if (isUploaded.get()) {

log.info("file【{}】chunk【{}】upload,jump", chunk.getFilename(), chunk.getChunkNumber());

return;

}

} else {

uploadId = fileClient.initTask(filename);

chunkProcess = new ChunkProcess().setFilename(filename).setUploadId(uploadId);

CHUNK_PROCESS_STORAGE.put(filename, chunkProcess);

}

List<ChunkProcess.ChunkPart> chunkList = chunkProcess.getChunkList();

String chunkId = fileClient.chunk(chunk, uploadId);

chunkList.add(new ChunkProcess.ChunkPart(chunkId, chunk.getChunkNumber()));

CHUNK_PROCESS_STORAGE.put(filename, chunkProcess.setChunkList(chunkList));

}

@Override

public void merge(String filename) {

ChunkProcess chunkProcess = CHUNK_PROCESS_STORAGE.get(filename);

fileClient.merge(chunkProcess);

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String currentTime = simpleDateFormat.format(new Date());

FILE_STORAGE.add(new FileInfo().setUploadTime(currentTime).setFileName(filename));

CHUNK_PROCESS_STORAGE.remove(filename);

}

@Override

public List<FileInfo> list() {

return FILE_STORAGE;

}

@Override

public Resource getFile(String filename) {

return fileClient.getFile(filename);

}

}package com.et.service.impl;

import com.et.bean.FileInfo;

import com.et.service.FileUploadService;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.core.io.FileSystemResource;

import org.springframework.core.io.Resource;

import org.springframework.stereotype.Service;

import org.springframework.util.FileCopyUtils;

import org.springframework.web.multipart.MultipartFile;

import java.io.File;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.nio.file.Files;

import java.nio.file.Paths;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.List;

import java.util.concurrent.CopyOnWriteArrayList;

@Service

@Slf4j

public class FileUploadServiceImpl implements FileUploadService {

@Value("${upload.path:/data/upload/}")

private String filePath;

private static final List<FileInfo> FILE_STORAGE = new CopyOnWriteArrayList<>();

@Override

public void upload(MultipartFile[] files) {

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

for (MultipartFile file : files) {

String fileName = file.getOriginalFilename();

boolean match = FILE_STORAGE.stream().anyMatch(fileInfo -> fileInfo.getFileName().equals(fileName));

if (match) {

throw new RuntimeException("File [ " + fileName + " ] already exist");

}

String currentTime = simpleDateFormat.format(new Date());

try (InputStream in = file.getInputStream();

OutputStream out = Files.newOutputStream(Paths.get(filePath + fileName))) {

FileCopyUtils.copy(in, out);

} catch (IOException e) {

log.error("File [{}] upload failed", fileName, e);

throw new RuntimeException(e);

}

FileInfo fileInfo = new FileInfo().setFileName(fileName).setUploadTime(currentTime);

FILE_STORAGE.add(fileInfo);

}

}

@Override

public List<FileInfo> list() {

return FILE_STORAGE;

}

@Override

public Resource getFile(String fileName) {

FILE_STORAGE.stream()

.filter(info -> info.getFileName().equals(fileName))

.findFirst()

.orElseThrow(() -> new RuntimeException("File [ " + fileName + " ] not exist"));

File file = new File(filePath + fileName);

return new FileSystemResource(file);

}

}以上只是一些关键代码,所有代码请参见下面代码仓库

代码仓库

- https://github.com/Harries/springboot-demo(File)

4.测试

- 启动Sprint Boot应用

- 编写测试类

package com.et.file;

import cn.hutool.core.io.FileUtil;

import org.junit.Test;

import org.springframework.core.io.FileSystemResource;

import org.springframework.http.HttpEntity;

import org.springframework.http.HttpHeaders;

import org.springframework.http.MediaType;

import org.springframework.http.ResponseEntity;

import org.springframework.util.LinkedMultiValueMap;

import org.springframework.util.MultiValueMap;

import org.springframework.web.client.RestTemplate;

import java.io.RandomAccessFile;

public class MultipartUploadTest {

@Test

public void testUpload() throws Exception {

String chunkFileFolder = "D:/tmp/";

java.io.File file = new java.io.File("D:/SoftWare/oss-browser-win32-ia32.zip");

long contentLength = file.length();

// partSize:20MB

long partSize = 20 * 1024 * 1024;

// the last partSize may less 20MB

long chunkFileNum = (long) Math.ceil(contentLength * 1.0 / partSize);

RestTemplate restTemplate = new RestTemplate();

try (RandomAccessFile raf_read = new RandomAccessFile(file, "r")) {

// buffer

byte[] b = new byte[1024];

for (int i = 1; i <= chunkFileNum; i++) {

// chunk

java.io.File chunkFile = new java.io.File(chunkFileFolder + i);

// write

try (RandomAccessFile raf_write = new RandomAccessFile(chunkFile, "rw")) {

int len;

while ((len = raf_read.read(b)) != -1) {

raf_write.write(b, 0, len);

if (chunkFile.length() >= partSize) {

break;

}

}

// upload

MultiValueMap<String, Object> body = new LinkedMultiValueMap<>();

body.add("file", new FileSystemResource(chunkFile));

body.add("chunkNumber", i);

body.add("chunkSize", partSize);

body.add("currentChunkSize", chunkFile.length());

body.add("totalSize", contentLength);

body.add("filename", file.getName());

body.add("totalChunks", chunkFileNum);

HttpHeaders headers = new HttpHeaders();

headers.setContentType(MediaType.MULTIPART_FORM_DATA);

HttpEntity<MultiValueMap<String, Object>> requestEntity = new HttpEntity<>(body, headers);

String serverUrl = "http://localhost:8080/file/chunk";

ResponseEntity<String> response = restTemplate.postForEntity(serverUrl, requestEntity, String.class);

System.out.println("Response code: " + response.getStatusCode() + " Response body: " + response.getBody());

} finally {

FileUtil.del(chunkFile);

}

}

}

// merge file

String mergeUrl = "http://localhost:8080/file/merge?filename=" + file.getName();

ResponseEntity<String> response = restTemplate.getForEntity(mergeUrl, String.class);

System.out.println("Response code: " + response.getStatusCode() + " Response body: " + response.getBody());

}

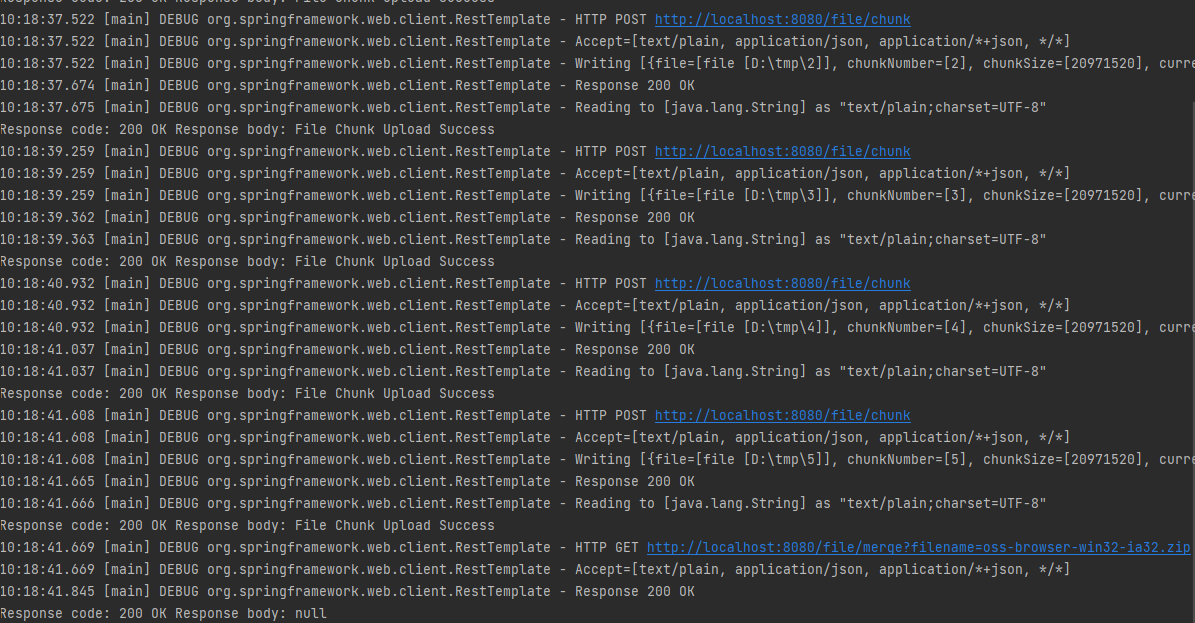

}- 运行测试类,日志如下

5.引用

- Spring Boot中大文件分片上传—支持本地文件和Amazon S3 - Yuandupier

- Spring Boot实现大文件分块上传 | Harries Blog™