9.12-kubeadm方式安装k8s+基础命令的使用

一、安装环境

| 编号 | 主机名称 | ip地址 |

|---|---|---|

| 1 | k8s-master | 192.168.2.66 |

| 2 | k8s-node01 | 192.168.2.77 |

| 3 | k8s-node02 | 192.168.2.88 |

二、前期准备

1.设置免密登录

[root@k8s-master ~]# ssh-keygen [root@k8s-master ~]# ssh-copy-id root@192.168.2.77 [root@k8s-master ~]# ssh-copy-id root@192.168.2.88

2.yum源配置

[root@k8s-master ~]# cd /etc/yum.repos.d/ [root@k8s-master yum.repos.d]# vim docker-ce.repo [docker-ce-stable] name=Docker CE Stable - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/stable enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-stable-debuginfo] name=Docker CE Stable - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/stable enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-stable-source] name=Docker CE Stable - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/stable enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test] name=Docker CE Test - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test-debuginfo] name=Docker CE Test - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test-source] name=Docker CE Test - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly] name=Docker CE Nightly - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly-debuginfo] name=Docker CE Nightly - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly-source] name=Docker CE Nightly - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [root@k8s-master yum.repos.d]# vim kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg [root@k8s-node01 yum.repos.d]# ls CentOS-Base.repo epel.repo haha.repo docker-ce.repo epel-testing.repo kubernetes.repo [root@k8s-node02 yum.repos.d]# ls CentOS-Base.repo epel.repo haha.repo docker-ce.repo epel-testing.repo kubernetes.repo

3.清空创建缓存

[root@k8s-master yum.repos.d]# yum clean all && yum makecache [root@k8s-node01 yum.repos.d]# yum clean all && yum makecache [root@k8s-node02 yum.repos.d]# yum clean all && yum makecache # 四个镜像 aliyun,epel,kubernetes,docker-ce

4.主机映射(三台主机都要设置)

vim /etc/hosts 192.168.2.66 k8s-master 192.168.2.77 k8s-node01 192.168.2.88 k8s-node02 # 验证 [root@k8s-master yum.repos.d]# ping k8s-node01 PING k8s-node01 (192.168.2.77) 56(84) bytes of data. 64 bytes from k8s-node01 (192.168.2.77): icmp_seq=1 ttl=64 time=0.441 ms ^C --- k8s-node01 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4001ms rtt min/avg/max/mdev = 0.430/0.512/0.697/0.097 ms [root@k8s-master yum.repos.d]# ping k8s-node02 PING k8s-node02 (192.168.2.88) 56(84) bytes of data. 64 bytes from k8s-node02 (192.168.2.88): icmp_seq=1 ttl=64 time=0.422 ms ^C --- k8s-node02 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2000ms rtt min/avg/max/mdev = 0.397/0.582/0.928/0.245 ms

5.安装常用工具

[root@k8s-master ~]# yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git tree -y [root@k8s-node01 ~]# yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git tree -y [root@k8s-node02 ~]# yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git tree -y

6.关闭firewalld NetworkManager selinux swap虚拟分区

[root@k8s-master ~]# systemctl disable --now firewalld [root@k8s-master ~]# systemctl disable --now NetworkManager [root@k8s-master ~]# setenforce 0 [root@k8s-master ~]# vim /etc/selinux/config [root@k8s-master ~]# vim /etc/fstab /dev/mapper/centos-swap swap swap defaults 0 0

[root@k8s-master ~]# swapoff -a && sysctl -w vm.swappiness=0 vm.swappiness = 0 [root@k8s-master ~]# sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab [root@k8s-master ~]# vim /etc/fstab #/dev/mapper/centos-swap swap swap defaults 0 0

7.同步时间

[root@k8s-master ~]# ntpdate time2.aliyun.com 11 Sep 10:20:26 ntpdate[2271]: adjust time server 203.107.6.88 offset -0.012244 sec [root@k8s-master ~]# which ntpdate /usr/sbin/ntpdate [root@k8s-master ~]# crontab -e */5 * * * * /usr/sbin/ntpdate time2.aliyun.com [root@k8s-node01 ~]# yum -y install ntpdate [root@k8s-node01 ~]# ntpdate time2.aliyun.com 11 Sep 10:20:36 ntpdate[1779]: adjust time server 203.107.6.88 offset -0.009831 sec [root@k8s-node01 ~]# crontab -e */5 * * * * /usr/sbin/ntpdate time2.aliyun.com [root@k8s-node02 ~]# yum -y install ntpdate [root@k8s-node02 ~]# ntpdate time2.aliyun.com 11 Sep 10:20:39 ntpdate[1915]: adjust time server 203.107.6.88 offset -0.016733 sec [root@k8s-node02 ~]# crontab -e */5 * * * * /usr/sbin/ntpdate time2.aliyun.com

8.配置limit

ulimit -SHn 65535 vim /etc/security/limits.conf * soft nofile 65536 * hard nofile 131072 * soft nproc 65535 * hard nproc 655350 * soft memlock unlimited * hard memlock unlimited

9.下载yaml文件从gitee上下载

配置pod的yaml文件和docker-compose.uaml文件相似

[root@k8s-master ~]# cd /root/ 您在 /var/spool/mail/root 中有新邮件 [root@k8s-master ~]# git clone https://gitee.com/dukuan/k8s-ha-install.git 正克隆到 'k8s-ha-install'... remote: Enumerating objects: 920, done. remote: Counting objects: 100% (8/8), done. remote: Compressing objects: 100% (6/6), done. remote: Total 920 (delta 1), reused 0 (delta 0), pack-reused 912 接收对象中: 100% (920/920), 19.74 MiB | 293.00 KiB/s, done. 处理 delta 中: 100% (388/388), done.

三、配置内核模块

1.ipvs的配置(三个节点)

yum install ipvsadm ipset sysstat conntrack libseccomp -y [root@k8s-master ~]# modprobe -- ip_vs # 使用 modprobe 命令加载内核模块,核心 IPVS 模块。 [root@k8s-master ~]# modprobe -- ip_vs_rr # IPVS 负载均衡算法 rr [root@k8s-master ~]# modprobe -- ip_vs_wrr # IPVS 负载均衡算法 wrr [root@k8s-master ~]# modprobe -- ip_vs_sh # 用于源端负载均衡的模块 [root@k8s-master ~]# modprobe -- nf_conntrack # 用于网络流量过滤和跟踪的模块 [root@k8s-master ~]# vim /etc/modules-load.d/ipvs.conf # 在系统启动时加载下列 IPVS 和相关功能所需的模块 ip_vs # 负载均衡模块 ip_vs_lc # 用于实现基于连接数量的负载均衡算法 ip_vs_wlc # 用于实现带权重的最少连接算法的模块 ip_vs_rr # 负载均衡rr算法模块 ip_vs_wrr # 负载均衡wrr算法模块 ip_vs_lblc # 负载均衡算法,它结合了最少连接(LC)算法和基于偏置的轮询(Round Robin with Bias)算法 ip_vs_lblcr # 用于实现基于链路层拥塞状况的最少连接负载调度算法的模块 ip_vs_dh # 用于实现基于散列(Hashing)的负载均衡算法的模块 ip_vs_sh # 用于源端负载均衡的模块 ip_vs_fo # 用于实现基于本地服务的负载均衡算法的模块 ip_vs_nq # 用于实现NQ算法的模块 ip_vs_sed # 用于实现随机早期检测(Random Early Detection)算法的模块 ip_vs_ftp # 用于实现FTP服务的负载均衡模块 ip_vs_sh nf_conntrack # 用于跟踪网络连接的状态的模块 ip_tables # 用于管理防护墙的机制 ip_set # 用于创建和管理IP集合的模块 xt_set # 用于处理IP数据包集合的模块,提供了与iptables等网络工具的接口 ipt_set # 用于处理iptables规则集合的模块 ipt_rpfilter # 用于实现路由反向路径过滤的模块 ipt_REJECT # iptables模块之一,用于将不符合规则的数据包拒绝,并返回特定的错误码 ipip # 用于实现IP隧道功能的模块,使得数据可以在两个网络之间进行传输 [root@k8s-master ~]# lsmod | grep -e ip_vs -e nf_conntrack ip_vs_sh 12688 0 ip_vs_wrr 12697 0 ip_vs_rr 12600 0 ip_vs 141432 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 133053 1 ip_vs libcrc32c 12644 3 xfs,ip_vs,nf_conntrack [root@k8s-node01 ~]# lsmod | grep -e ip_vs -e nf_conntrack ip_vs_sh 12688 0 ip_vs_wrr 12697 0 ip_vs_rr 12600 0 ip_vs 141432 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 133053 1 ip_vs libcrc32c 12644 3 xfs,ip_vs,nf_conntrack [root@k8s-node02 ~]# lsmod | grep -e ip_vs -e nf_conntrack ip_vs_sh 12688 0 ip_vs_wrr 12697 0 ip_vs_rr 12600 0 ip_vs 141432 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 133053 1 ip_vs libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

2.k8s的内核加载(三个)

[root@k8s-master ~]# vim /etc/sysctl.d/k8s.conf # 写入k8s所需内核模块 # net.bridge.bridge-nf-call-iptables = 1 # 控制网络桥接与iptables之间的网络转发行为 # net.bridge.bridge-nf-call-ip6tables = 1 # 用于控制网络桥接(bridge)的IP6tables过滤规则。当该参数设置为1时,表示启用对网络桥接的IP6tables过滤规则 # fs.may_detach_mounts = 1 # 用于控制文件系统是否允许分离挂载,1表示允许 # net.ipv4.conf.all.route_localnet = 1 # 允许本地网络上的路由。设置为1表示允许,设置为0表示禁止。 # vm.overcommit_memory=1 # 控制内存分配策略。设置为1表示允许内存过量分配,设置为0表示不允许。 # vm.panic_on_oom=0 # 决定当系统遇到内存不足(OOM)时是否产生panic。设置为0表示不产生panic,设置为1表示产生panic。 # fs.inotify.max_user_watches=89100 # inotify可以监视的文件和目录的最大数量。 # fs.file-max=52706963 # 系统级别的文件描述符的最大数量。 # fs.nr_open=52706963 # 单个进程可以打开的文件描述符的最大数量。 # net.netfilter.nf_conntrack_max=2310720 # 网络连接跟踪表的最大大小。 # net.ipv4.tcp_keepalive_time = 600 # TCP保活机制发送探测包的间隔时间(秒)。 # net.ipv4.tcp_keepalive_probes = 3 # TCP保活机制发送探测包的最大次数。 # net.ipv4.tcp_keepalive_intvl =15 # TCP保活机制在发送下一个探测包之前等待响应的时间(秒)。 # net.ipv4.tcp_max_tw_buckets = 36000 # TCP TIME_WAIT状态的bucket数量。 # net.ipv4.tcp_tw_reuse = 1 # 允许重用TIME_WAIT套接字。设置为1表示允许,设置为0表示不允许。 # net.ipv4.tcp_max_orphans = 327680 # 系统中最大的孤套接字数量。 # net.ipv4.tcp_orphan_retries = 3 # 系统尝试重新分配孤套接字的次数。 # net.ipv4.tcp_syncookies = 1 # 用于防止SYN洪水攻击。设置为1表示启用SYN cookies,设置为0表示禁用。 # net.ipv4.tcp_max_syn_backlog = 16384 # SYN连接请求队列的最大长度。 # net.ipv4.ip_conntrack_max = 65536 # IP连接跟踪表的最大大小。 # net.ipv4.tcp_max_syn_backlog = 16384 # 系统中最大的监听队列的长度。 # net.ipv4.tcp_timestamps = 0 # 用于关闭TCP时间戳选项。 # net.core.somaxconn = 16384 # 用于设置系统中最大的监听队列的长度 reboot [root@k8s-master ~]# lsmod | grep --color=auto -e ip_vs -e nf_conntrack ip_vs_sh 12688 0 ip_vs 141432 2 ip_vs_sh nf_conntrack 133053 1 ip_vs libcrc32c 12644 3 xfs,ip_vs,nf_conntrack [root@k8s-node01 ~]# lsmod | grep --color=auto -e ip_vs -e nf_conntrack ip_vs_sh 12688 0 ip_vs 141432 2 ip_vs_sh nf_conntrack 133053 1 ip_vs libcrc32c 12644 3 xfs,ip_vs,nf_conntrack [root@k8s-node02 ~]# lsmod | grep --color=auto -e ip_vs -e nf_conntrack ip_vs_sh 12688 0 ip_vs 141432 2 ip_vs_sh nf_conntrack 133053 1 ip_vs libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

四、基本组件安装

1.三个节点安装docker-ce docker-ce-cli containerd.io(三个)

[root@k8s-master ~]# yum remove -y podman runc containerd # 卸载之前的containerd [root@k8s-master ~]# yum install docker-ce docker-ce-cli containerd.io -y # 安装Docker和containerd [root@k8s-master ~]# yum list installed|grep docker containerd.io.x86_64 1.6.33-3.1.el7 @docker-ce-stable docker-buildx-plugin.x86_64 0.14.1-1.el7 @docker-ce-stable docker-ce.x86_64 3:26.1.4-1.el7 @docker-ce-stable docker-ce-cli.x86_64 1:26.1.4-1.el7 @docker-ce-stable docker-ce-rootless-extras.x86_64 26.1.4-1.el7 @docker-ce-stable docker-compose-plugin.x86_64 2.27.1-1.el7 @docker-ce-stable

2.配置containerd所需模块(三个)

[root@k8s-master ~]# cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf > overlay > br_netfilter > EOF overlay br_netfilter 您在 /var/spool/mail/root 中有新邮件 [root@k8s-master ~]# modprobe overlay [root@k8s-master ~]# modprobe br_netfilter

3.配置containerd所需内核(三个)

[root@k8s-master ~]# cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf > net.bridge.bridge-nf-call-iptables = 1 > net.ipv4.ip_forward = 1 > net.bridge.bridge-nf-call-ip6tables = 1 > EOF net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 您在 /var/spool/mail/root 中有新邮件 [root@k8s-master ~]# sysctl --system [root@k8s-node01 ~]# cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf > net.bridge.bridge-nf-call-iptables = 1 > net.ipv4.ip_forward = 1 > net.bridge.bridge-nf-call-ip6tables = 1 > EOF net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 您在 /var/spool/mail/root 中有新邮件 [root@k8s-node01 ~]# sysctl --system [root@k8s-node02 ~]# cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf > net.bridge.bridge-nf-call-iptables = 1 > net.ipv4.ip_forward = 1 > net.bridge.bridge-nf-call-ip6tables = 1 > EOF net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 您在 /var/spool/mail/root 中有新邮件 [root@k8s-node02 ~]# sysctl --system

4.containerd配置文件(三个)

[root@k8s-master ~]# ls /etc/containerd/config.toml /etc/containerd/config.toml 您在 /var/spool/mail/root 中有新邮件 [root@k8s-master ~]# mkdir -p /etc/containerd/ [root@k8s-master ~]# containerd config default | tee /etc/containerd/config.toml [root@k8s-master ~]# vim /etc/containerd/config.toml 63 sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9 " 127 SystemdCgroup = true # 开hi自启动 [root@k8s-master ~]# systemctl enable --now containerd.service Created symlink from /etc/systemd/system/multi-user.target.wants/containerd.service to /usr/lib/systemd/system/containerd.service. # 查看服务状态 [root@k8s-master ~]# systemctl status containerd.service

5.配置 crictl 客户端连接的运⾏位置 (三个)

[root@k8s-master ~]# cat > /etc/crictl.yaml <<EOF > runtime-endpoint:unix:///run/containerd/containerd.sock # 指定了容器运⾏时的地址为:unix://... > image-endpoint:unix:///run/containerd/containerd.sock # 指定了镜像运⾏时的地址为:unix://... > timeout: 10 # 设置了超时时间为10秒 > debug: false # 关闭调试模式 > EOF

6.安装kubernetes组件(三个节点)

[root@k8s-master ~]#yum -y install kubeadm-1.28* kubectl-1.28* kubelet-1.28* [root@k8s-master ~]# yum list installed | grep kube cri-tools.x86_64 1.26.0-0 @kubernetes kubeadm.x86_64 1.28.2-0 @kubernetes kubectl.x86_64 1.28.2-0 @kubernetes kubelet.x86_64 1.28.2-0 @kubernetes kubernetes-cni.x86_64 1.2.0-0 @kubernetes [root@k8s-node01 ~]# yum list installed | grep kube cri-tools.x86_64 1.26.0-0 @kubernetes kubeadm.x86_64 1.28.2-0 @kubernetes kubectl.x86_64 1.28.2-0 @kubernetes kubelet.x86_64 1.28.2-0 @kubernetes kubernetes-cni.x86_64 1.2.0-0 @kubernetes [root@k8s-node02 ~]# yum list installed | grep kube cri-tools.x86_64 1.26.0-0 @kubernetes kubeadm.x86_64 1.28.2-0 @kubernetes kubectl.x86_64 1.28.2-0 @kubernetes kubelet.x86_64 1.28.2-0 @kubernetes kubernetes-cni.x86_64 1.2.0-0 @kubernetes [root@k8s-master ~]# systemctl daemon-reload [root@k8s-master ~]# systemctl enable --now kubelet [root@k8s-master ~]# systemctl status kubelet [root@k8s-master ~]# netstat -lntup|grep kubelet tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 2916/kubelet tcp6 0 0 :::10250 :::* LISTEN 2916/kubelet tcp6 0 0 :::10255 :::* LISTEN 2916/kubelet # 异常处理 # 如果kubelet无法正常启动,检查swap是否已经取消虚拟分区,查看/var/log/message如果没有/var/lib/kubelet/config.yaml文件,可能需要重新安装 yum -y remove kubelet-1.28* yum -y install kubelet-1.28* systemctl daemon-reload ystemctl enable --now kubelet yum -y install kubeadm-1.28* # kubelet的端口是10248 10250 10255三个端口

五、kubernetes集群初始化

1.kubeadm配置文件

[root@k8s-master ~]# vim kubeadm-config.yaml

# 修改kubeadm配置文件

apiVersion: kubeadm.k8s.io/v1beta3 # 指定Kubernetes配置文件的版本,使用的是kubeadm API的v1beta3版本

bootstrapTokens: # 定义bootstrap tokens的信息。这些tokens用于在Kubernetes集群初始化过程中进行身份验证

- groups: # 定义了与此token关联的组

- system:bootstrappers:kubeadm:default-node-token

token: 7t2weq.bjbawausm0jaxury # bootstrap token的值

ttl: 24h0m0s # token的生存时间,这里设置为24小时

usages: # 定义token的用途

- signing # 数字签名

- authentication # 身份验证

kind: InitConfiguration # 指定配置对象的类型,InitConfiguration:表示这是一个>初始化配置

localAPIEndpoint: # 定义本地API端点的地址和端口

advertiseAddress: 192.168.2.66

bindPort: 6443

nodeRegistration: # 定义节点注册时的配置

criSocket: unix:///var/run/containerd/containerd.sock # 容器运行时(CRI)的套>接字路径

name: k8s-master # 节点的名称

taints: # 标记

- effect: NoSchedule # 免调度节点

key: node-role.kubernetes.io/control-plane # 该节点为控制节点

---

apiServer: # 定义了API服务器的配置

certSANs: # 为API服务器指定了附加的证书主体名称(SAN),指定IP即可

- 192.168.2.66

timeoutForControlPlane: 4m0s # 控制平面的超时时间,这里设置为4分钟

apiVersion: kubeadm.k8s.io/v1beta3 # 指定API Server版本

certificatesDir: /etc/kubernetes/pki # 指定了证书的存储目录

clusterName: kubernetes # 定义了集群的名称为"kubernetes"

controlPlaneEndpoint: 192.168.2.66:6443 # 定义了控制节点的地址和端口

controllerManager: {} # 控制器管理器的配置,为空表示使用默认配置

etcd: # 定义了etcd的配置

local: # 本地etcd实例

dataDir: /var/lib/etcd # 数据目录

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers # 指定了Kubernetes使用的镜像仓库的地址,阿里云的镜像仓库。

kind: ClusterConfiguration # 指定了配置对象的类型,ClusterConfiguration:表示这是一个集群配置

kubernetesVersion: v1.28.2 # 指定了kubernetes的版本

networking: # 定义了kubernetes集群网络设置

dnsDomain: cluster.local # 定义了集群的DNS域为:cluster.local

podSubnet: 172.16.0.0/16 # 定义了Pod的子网

serviceSubnet: 10.96.0.0/16 # 定义了服务的子网

scheduler: {} # 使用默认的调度器行为

[root@k8s-master ~]# kubeadm config migrate --old-config kubeadm-config.yaml --new-config new.yaml

[root@k8s-master ~]# ls

anaconda-ks.cfg k8s-ha-install kubeadm-config.yaml new.yaml

2.下载组件镜像

# 通过新的配置⽂件new.yaml从指定的阿⾥云仓库拉取kubernetes组件镜像 [root@k8s-master ~]# kubeadm config images pull --config new.yaml [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.28.2 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.28.2 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.28.2 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.28.2 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.9-0 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.10.1

3.集群初始化

[root@k8s-master ~]# kubeadm init --config /root/new.yaml --upload-certs

# 初始化会报错,因为开了kubelet服务,所以需要停止kubelet服务

[root@k8s-master ~]# systemctl stop kubelet

[root@k8s-master ~]# kubeadm init --config /root/new.yaml --upload-certs

# 等待初始化后保存这些命令

kubeadm join 192.168.2.66:6443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:88133267ffe3e3592b2946e8d175e6d74685f2f46ed53c96f6ffbaeb6970bb6a

# 保存token

[root@k8s-master ~]# vim token

kubeadm join 192.168.2.66:6443 --token bqqsrs.f2jv6wgun97hzk70 # 当需要加⼊新node节点时,只复制到这即可

--discovery-token-ca-cert-hash sha256:88133267ffe3e3592b2946e8d175e6d74685f2f46ed53c96f6ffbaeb6970bb6a # 当需要⾼可⽤master集群时,将整个token复制下来

# 不成功

1.# 主机配置 2核20G

2.# echo 1 > /proc/net/ipv4/ip_forward

3.# kubelet无法启动

# swap虚拟分区没关

# 没有配置文件

# vim /var/log/messages

4.node加入集群

# 节点加入集群之前需要停用kubelet服务 [root@k8s-node01 ~]# systemctl stop kubelet.service Warning: kubelet.service changed on disk. Run 'systemctl daemon-reload' to reload units. [root@k8s-node01 ~]# kubeadm join 192.168.2.66:6443 --token bqqsrs.f2jv6wgun97hzk70 --discovery-token-ca-cert-hash sha256:88133267ffe3e3592b2946e8d175e6d74685f2f46ed53c96f6ffbaeb6970bb6a # 节点加入集群之前需要停用kubelet服务 [root@k8s-node02 ~]# systemctl stop kubelet.service Warning: kubelet.service changed on disk. Run 'systemctl daemon-reload' to reload units. [root@k8s-node02 ~]# kubeadm join 192.168.2.66:6443 --token bqqsrs.f2jv6wgun97hzk70 --discovery-token-ca-cert-hash sha256:88133267ffe3e3592b2946e8d175e6d74685f2f46ed53c96f6ffbaeb6970bb6a # 获取所有节点信息 # 查看不到节点 [root@k8s-master ~]# kubectl get nodes # 临时修改 [root@k8s-master ~]# export KUBECONFIG=/etc/kubernetes/admin.conf # 获取所有节点信息 [root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master NotReady control-plane 37m v1.28.2 k8s-node01 NotReady <none> 2m44s v1.28.2 k8s-node02 NotReady <none> 2m19s v1.28.2 # 长期修改 [root@k8s-master ~]# vim .bashrc export KUBECONFIG=/etc/kubernetes/admin.conf # 添加不成功 1.# kubelet没有stop 2.# ip没有转发 3.# token失效,重新初始化或者生成token 4.# node中的containderd是否正常

5.查看组件容器状态

# 获取所有节点信息 [root@k8s-master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master NotReady control-plane 37m v1.28.2 k8s-node01 NotReady <none> 2m44s v1.28.2 k8s-node02 NotReady <none> 2m19s v1.28.2 # 查看所有pod的状态 [root@k8s-master ~]# kubectl get po -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-6554b8b87f-m5wnb 0/1 Pending 0 42m kube-system coredns-6554b8b87f-zz9cb 0/1 Pending 0 42m kube-system etcd-k8s-master 1/1 Running 0 43m kube-system kube-apiserver-k8s-master 1/1 Running 0 43m kube-system kube-controller-manager-k8s-master 1/1 Running 0 43m kube-system kube-proxy-gtt6v 1/1 Running 0 42m kube-system kube-proxy-snr8v 1/1 Running 0 7m53s kube-system kube-proxy-z5hrs 1/1 Running 0 8m18s kube-system kube-scheduler-k8s-master 1/1 Running 0 43m # 查看pod完整状态 [root@k8s-master ~]# kubectl get po -Aowide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system coredns-6554b8b87f-m5wnb 0/1 Pending 0 49m <none> <none> <none> <none> kube-system coredns-6554b8b87f-zz9cb 0/1 Pending 0 49m <none> <none> <none> <none> kube-system etcd-k8s-master 1/1 Running 0 49m 192.168.2.66 k8s-master <none> <none> kube-system kube-apiserver-k8s-master 1/1 Running 0 49m 192.168.2.66 k8s-master <none> <none> kube-system kube-controller-manager-k8s-master 1/1 Running 0 49m 192.168.2.66 k8s-master <none> <none> kube-system kube-proxy-gtt6v 1/1 Running 0 49m 192.168.2.66 k8s-master <none> <none> kube-system kube-proxy-snr8v 1/1 Running 0 14m 192.168.2.88 k8s-node02 <none> <none> kube-system kube-proxy-z5hrs 1/1 Running 0 15m 192.168.2.77 k8s-node01 <none> <none> kube-system kube-scheduler-k8s-master 1/1 Running 0 49m 192.168.2.66 k8s-master <none> <none>

status:

| 状态名称 | 中文 | 说明 |

|---|---|---|

| pending | 挂起 | 当前pod没有工作 |

| running | 运行中 | 当前pod正常工作 |

| containercreating | 正在创建容器 | 正在创建容器 |

六、部署calico

1.calico的部署

[root@k8s-master ~]# ls

anaconda-ks.cfg k8s-ha-install kubeadm-config.yaml new.yaml token

您在 /var/spool/mail/root 中有新邮件

# 切换 git 分⽀

[root@k8s-master ~]# cd k8s-ha-install/

[root@k8s-master k8s-ha-install]# git checkout manual-installation-v1.28.x && cd calico/

分支 manual-installation-v1.28.x 设置为跟踪来自 origin 的远程分支 manual-installation-v1.28.x。

切换到一个新分支 'manual-installation-v1.28.x'

[root@k8s-master calico]# ls

calico.yaml

[root@k8s-master calico]# pwd

/root/k8s-ha-install/calico

[root@k8s-master calico]# cat ~/new.yaml | grep Sub

podSubnet: 172.16.0.0/16

serviceSubnet: 10.96.0.0/16

[root@k8s-master calico]# vim calico.yaml

# 修改配置文件,将文件中的POD_CIDR替换成172.16.0.0/16

4801 value: "172.16.0.0/16"

[root@k8s-master calico]# kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6554b8b87f-m5wnb 0/1 Pending 0 94m

kube-system coredns-6554b8b87f-zz9cb 0/1 Pending 0 94m

kube-system etcd-k8s-master 1/1 Running 0 94m

kube-system kube-apiserver-k8s-master 1/1 Running 0 94m

kube-system kube-controller-manager-k8s-master 1/1 Running 0 94m

kube-system kube-proxy-gtt6v 1/1 Running 0 94m

kube-system kube-proxy-snr8v 1/1 Running 0 59m

kube-system kube-proxy-z5hrs 1/1 Running 0 59m

kube-system kube-scheduler-k8s-master 1/1 Running 0 94m

# 创建pod

[root@k8s-master calico]# kubectl apply -f calico.yaml

# 查看日志

[root@k8s-master calico]# kubectl logs calico-node-9jp9m -n kube-system

# 出现问题就去节点查看日志

[root@k8s-node01 ~]# vim /var/log/messages

2.补充:

(1)如果出现这种错误

![]()

(2)解决办法:

1.# 把这个2个文件添加的/etc/cni/net.d下 [root@k8s-master ~]# cd /etc/cni/net.d/ [root@k8s-master net.d]# rz -E rz waiting to receive. [root@k8s-master net.d]# rz -E rz waiting to receive. [root@k8s-master net.d]# ls 10-calico.conflist calico-kubeconfig 2.# 对master进行更新: [root@k8s-master ~]# yum -y update # 然后重启虚拟机 [root@k8s-master ~]# reboot # 查看容器和节点状态 # k8s部署完成 [root@k8s-master calico]# kubectl get po -A NAMESPACE NAME READY STATUS RESTARTS kube-system calico-kube-controllers-6d48795585-qt9b5 1/1 Running 2 (9m44s ago kube-system calico-node-2gx4m 1/1 Running 0 kube-system calico-node-bkjsj 1/1 Running 0 kube-system calico-node-tr6g6 1/1 Running 2 (9m44s ago kube-system coredns-6554b8b87f-m5wnb 1/1 Running 2 (9m44s ago kube-system coredns-6554b8b87f-zz9cb 1/1 Running 2 (9m44s ago kube-system etcd-k8s-master 1/1 Running 5 (9m44s ago kube-system kube-apiserver-k8s-master 1/1 Running 6 (9m44s ago kube-system kube-controller-manager-k8s-master 1/1 Running 6 (9m44s ago kube-system kube-proxy-gtt6v 1/1 Running 5 (9m44s ago kube-system kube-proxy-snr8v 1/1 Running 5 (12m ago) kube-system kube-proxy-z5hrs 1/1 Running 5 (14m ago) kube-system kube-scheduler-k8s-master 1/1 Running 6 (9m44s ag

3.测试

(1)创建节点

# 添加一个新的pod [root@k8s-master calico]# kubectl run nginx0 --image=nginx pod/nginx0 created [root@k8s-master calico]# kubectl get po -Aowide|grep nginx # 查看日志 [root@k8s-master calico]# kubectl logs nginx0 Error from server (BadRequest): container "nginx0" in pod "nginx0" is waiting to start: trying and failing to pull image

(2)删除节点

[root@k8s-master calico]# kubectl delete pod nginx0 pod "nginx0" deleted [root@k8s-master calico]# kubectl get po -Aowide|grep nginx

七、Metrics 部署

1.复制证书到所有节点

# 向node01节点发送代理证书 [root@k8s-master calico]# scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node01:/etc/kubernetes/pki/front-proxy-ca.crt # 向node02节点发送代理证书 [root@k8s-master calico]# scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node02:/etc/kubernetes/pki/front-proxy-ca.crt

2.安装 metrics server

[root@k8s-master ~]# ls components.yaml components.yaml [root@k8s-master ~]# mkdir pods [root@k8s-master ~]# mv components.yaml pods/ [root@k8s-master ~]# cd pods/ [root@k8s-master pods]# ls components.yaml [root@k8s-master pods]# cat components.yaml | wc -l 202 # 添加metric server的pod资源 [root@k8s-master pods]# kubectl create -f components.yaml # 在kube-system命名空间下查看metrics server的pod运⾏状态 [root@k8s-master pods]# kubectl get po -A|grep metrics kube-system metrics-server-79776b6d54-dmwk6 1/1 Running 0 2m26s

3.查看节点资源监控

# 查看node节点的系统资源使⽤情况 [root@k8s-master pods]# kubectl top nodes NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% k8s-master 151m 7% 1099Mi 63% k8s-node01 40m 4% 467Mi 53% k8s-node02 39m 3% 483Mi 55% [root@k8s-master pods]# kubectl top pods -A NAMESPACE NAME CPU(cores) MEMORY(bytes) kube-system calico-kube-controllers-6d48795585-qt9b5 2m 37Mi kube-system calico-node-2gx4m 16m 86Mi kube-system calico-node-bkjsj 17m 86Mi kube-system calico-node-tr6g6 20m 98Mi kube-system coredns-6554b8b87f-m5wnb 1m 18Mi kube-system coredns-6554b8b87f-zz9cb 1m 25Mi kube-system etcd-k8s-master 18m 70Mi kube-system kube-apiserver-k8s-master 49m 308Mi kube-system kube-controller-manager-k8s-master 14m 92Mi kube-system kube-proxy-gtt6v 1m 33Mi kube-system kube-proxy-snr8v 1m 34Mi kube-system kube-proxy-z5hrs 1m 34Mi kube-system kube-scheduler-k8s-master 3m 42Mi kube-system metrics-server-79776b6d54-dmwk6 3m 15Mi

4.dashboard部署

[root@k8s-master pods]# cd ~/k8s-ha-install/ [root@k8s-master k8s-ha-install]# ls bootstrap CoreDNS dashboard metrics-server README.md calico csi-hostpath kubeadm-metrics-server pki snapshotter [root@k8s-master k8s-ha-install]# cd dashboard/ [root@k8s-master dashboard]# ls dashboard-user.yaml dashboard.yaml # 简历dashboard的pod资源 [root@k8s-master dashboard]# kubectl create -f . [root@k8s-master dashboard]# kubectl get po -A|grep dashboard kubernetes-dashboard dashboard-metrics-scraper-7b554c884f-7489m 1/1 Running 0 58s kubernetes-dashboard kubernetes-dashboard-54b699784c-fsjrw 0/1 ContainerCreating 0 58s [root@k8s-master dashboard]# kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard 32 type: NodePort Edit cancelled, no changes made. # edit:进⼊kubernetes的⽂本编辑器 # svc:指定某个服务项,这⾥指定的是kubernetes-dashboard # -n:指定命名空间,kubernetes-dashboard # 命令执⾏后相当于进⼊vim⽂本编辑器,不要⽤⿏标滚轮,会输出乱码的!可以使⽤“/”搜索,输⼊“/type”找到⽬标,如果已经为NodePort忽略此步骤 # 查看访问端口号 # 获取kubernetes-dashboard状态信息,包含端⼝,服务IP等 [root@k8s-master dashboard]# kubectl get svc kubernetes-dashboard -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes-dashboard NodePort 10.96.242.161 <none> 443:30754/TCP 4m7s

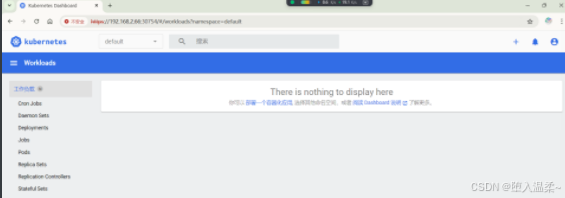

5.浏览器访问:192.168.2.66:30754

找到端⼝号后,通过 master 的 IP+端⼝即可访问 dashboard(端⼝为终端查询到的端⼝,要⽤ https 协议访问)

6.获得登录的token

[root@k8s-master dashboard]# kubectl create token admin-user -n kube-system eyJhbGciOiJSUzI1NiIsImtpZCI6Il9ZSlNRQ2FxeTZ3QmcyTnZXc05XeDhyX0hoYWFBNkkxbWtXcGU3SWJ2X1kifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzI2MTE1NTQ2LCJpYXQiOjE3MjYxMTE5NDYsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiYzk0YzJkZDEtMzk2MS00ZTFkLWJjM2ItYjlmOWMyNTU0MmQ0In19LCJuYmYiOjE3MjYxMTE5NDYsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbi11c2VyIn0.XdbwTvblcoZK0O5ItN9n6wbfDJ0EbfOWOunbjlVO6GlaORTwC4sSN8HBx-WSB0ZvdFlbSCC7sq096Xm_9bctxFG79WdGqkzCi241khbpPOpYIeOVl1PufqFg9z2TeedmoKKnCOPpBzwFXW_bY48IbrbDW7HcgFwOVq5iD9dergB9nk3CbxNczc8yuvln1MJuKK29juOfdluNsseXGkvv22uQjby-Ku34Fo2cXh1sTlQLfZlVp0uPm_0p9jB2tqtiS-XMX1k7miG0hS9UC7ol7H-Xih6ZpUhgXjyBpW9O8SyRrMywUQi_n0ZnaPdY5G1NSuBO9vbWcfjhbVTz9_mAyA

7.将获得的token粘贴到浏览器中

(1)在“输⼊ token ”内输⼊终端⽣成的 token

(2)就可以进行登录了

8.使用nginx镜像创建一个节点

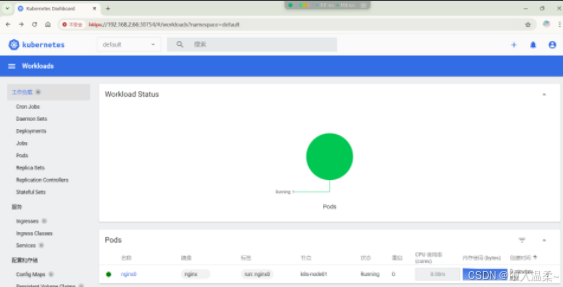

[root@k8s-master dashboard]# kubectl run nginx0 --image=nginx pod/nginx0 created [root@k8s-master dashboard]# kubectl get po -Aowide|grep nginx default nginx0 1/1 Running 0 3m20s 172.16.85.194 k8s-node01 <none> <none>

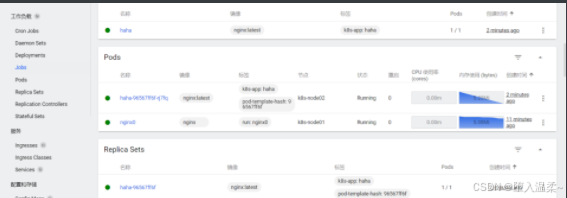

9.访问页面上就会显示出来

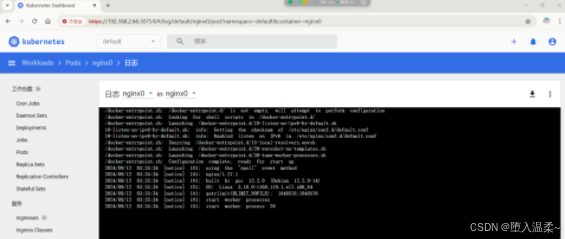

10.在访问页面中也可以查看日志

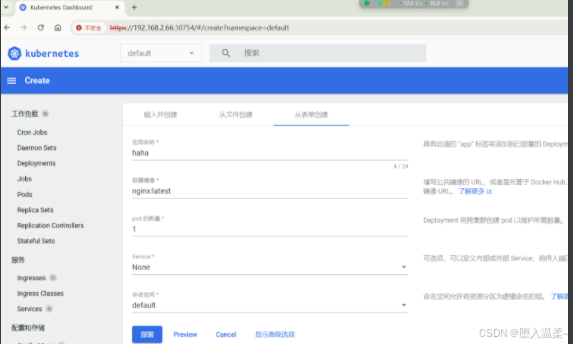

11.在访问页面中创建节点

12.在linux中可以查到

[root@k8s-master dashboard]# kubectl get po -Aowide|grep haha default haha-96567ff6f-rj7fq 1/1 Running 0 88s 172.16.58.196 k8s-node02 <none> <none>

八、kube-proxy

1.改为ipvs模式

[root@k8s-master dashboard]# kubectl get pods -A|grep proxy kube-system kube-proxy-gtt6v 1/1 Running 5 (3h18m ago) 23h kube-system kube-proxy-snr8v 1/1 Running 5 (3h21m ago) 22h kube-system kube-proxy-z5hrs 1/1 Running 5 (3h23m ago) 22h [root@k8s-master dashboard]# kubectl edit cm kube-proxy -n kube-system 54 mode: ipvs

2.更新kube-proxy的pod

[root@k8s-master ~]# kubectl patch daemonset kube-proxy -p "{\"spec\":{\"template\":{\"metadata\":{\"annotations\":{\"date\":\"`date +'%s'`\"}}}}}" -n kube-system

daemonset.apps/kube-proxy patched

3.访问测试

[root@k8s-master ~]# curl 127.0.0.1:10249/proxyMode ipvs

4.查看服务的⽹段

[root@k8s-master ~]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23h [root@k8s-master ~]# kubectl get po -Aowide

5.验证是否可正常创建参数

[root@k8s-master ~]# kubectl get po -A|wc -l 19 # 测试创建参数 [root@k8s-master ~]# kubectl create deploy cluster-test0 --image=registry.cn-beijing.aliyuncs.com/dotbalo/debug-tools -- sleep 3600 deployment.apps/cluster-test0 created [root@k8s-master ~]# kubectl get po -A|wc -l 20 deployment.apps/cluster-test0 created [root@k8s-master ~]# kubectl get po -A|grep cluster-test0 default cluster-test0-58689d5d5d-qr4mv 1/1 Running 0 23s

6.进到创建的节点中

[root@k8s-master ~]# kubectl exec -it cluster-test0-58689d5d5d-qr4mv -- bash (07:26 cluster-test0-58689d5d5d-qr4mv:/) ifconfig eth0 Link encap:Ethernet HWaddr 82:74:40:7f:27:b1 inet addr:172.16.58.201 Bcast:0.0.0.0 Mask:255.255.255.255 inet6 addr: fe80::8074:40ff:fe7f:27b1/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1480 Metric:1 RX packets:5 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:446 (446.0 B) TX bytes:656 (656.0 B) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) (07:26 cluster-test0-58689d5d5d-qr4mv:/) nslookup kubernetes Server: 10.96.0.10 Address: 10.96.0.10#53 Name: kubernetes.default.svc.cluster.local Address: 10.96.0.1 (07:27 cluster-test0-58689d5d5d-qr4mv:/) nslookup kube-dns.kube-system Server: 10.96.0.10 Address: 10.96.0.10#53 Name: kube-dns.kube-system.svc.cluster.local Address: 10.96.0.10 (07:27 cluster-test0-58689d5d5d-qr4mv:/) exit exit

7.访问dns的443端口和53端口

[root@k8s-master ~]# curl -k https://10.96.0.1:443

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {},

"code": 403

}[root@k8s-master ~]# curl http://10.96.0.10:53

curl: (52) Empty reply from server

九、kubernetes自动补齐

1.安装自动补齐

[root@k8s-master ~]# yum -y install bash-completion [root@k8s-master ~]# source <(kubectl completion bash) # 测试自动补齐 # 创建节点 [root@k8s-master ~]# kubectl run nginx1 --image nginx pod/nginx1 created [root@k8s-master ~]# kubectl get po -A # 删除节点 [root@k8s-master ~]# kubectl delete pod nginx1 pod "nginx1" deleted # 设置开机自启动 [root@k8s-master ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

2.kubernetes基础命令

# 删除节点 [root@k8s-master ~]# kubectl delete pod cluster-test-64b7b9cbf-jjmmh pod "cluster-test-64b7b9cbf-jjmmh" deleted # 节点还在 [root@k8s-master ~]# kubectl get po -A|grep cluster-test default cluster-test-64b7b9cbf-dnn2m 0/1 ContainerCreating 0 20s default cluster-test0-58689d5d5d-qr4mv 1/1 Running 0 34m # 使用deployment删除 [root@k8s-master ~]# kubectl delete deployment cluster-test deployment.apps "cluster-test" deleted # 已删除 [root@k8s-master ~]# kubectl get po -A|grep cluster-test

3.编写yaml文件-创建节点

[root@k8s-master ~]# vim pods/abc.yaml apiVersion: v1 kind: Pod metadata: name: busybox-sleep spec: containers: - name: busybox image: busybox:1.28 args: - sleep - "1000" [root@k8s-master ~]# cd pods/ [root@k8s-master pods]# ls abc.yaml components.yaml [root@k8s-master pods]# kubectl create -f abc.yaml [root@k8s-master pods]# kubectl create -f abc.yaml pod/busybox-sleep created [root@k8s-master pods]# kubectl get po -A|grep busybox-sleep default busybox-sleep 1/1 Running 0 3s [root@k8s-master pods]# kubectl delete pod busybox-sleep pod "busybox-sleep" deleted [root@k8s-master pods]# kubectl get po -A|grep busy

4.编辑json文件

[root@k8s-master ~]# vim pods/abc.json

{

"apiVersion":"v1",

"kind":"Pod",

"metadata":{

"name":"busybox-sleep000"

},

"spec":{

"containers":[

{

"name":"busybox000",

"image":"busybox:1.28",

"args":[

"sleep",

"1000"

]

}

]

}

}