【从零开始】2. Dell PowerEdge 人工智能服务搭建(番外篇)

从今年 2 月开始申请,直到现在才到位的算力服务器 Dell PowerEdge。该说不说,在没有你之前我跟 CPU 相处得挺好的。通过多级召回 + llamafile + 自研 CPU 算力集群感觉已经拉近了跟 NVIDIA 解决方案的距离😒。但自从你来了之后,一切都变了,只能说有资源的感觉真好…😚

说真的,很多厂商现在都提供云算力平台交钱就能用,而且有 24 小时客服支撑,对于我们这种小公司而言应该是最好的选择了。但由于客户数据不能外泄且老板还有点小心思(你以为给你买服务器就你部门自己用吗?其他部门你不支持一下吗?公司层面不做创新吗?老板每一分钱都要最大化利用),于是只能在本地进行搭建。

基于有限的预算下选择了一套 Dell PowerEdge + NVIDIA RTX A6000 配置,如下图:

作为内部使用(开发、测试、生图等)是够用了。裸机到位后先做各种硬件检查并验收,如下图:

作为内部使用(开发、测试、生图等)是够用了。裸机到位后先做各种硬件检查并验收,如下图:

Final Check 之后就可以通过 DellEMC 进行系统安装。基于“不能用最新”的原则选择了 Ubuntu Server 22.04.5 LTS 操作系统。整个安装过程都比较简单直接跟着引导安装即可。

Final Check 之后就可以通过 DellEMC 进行系统安装。基于“不能用最新”的原则选择了 Ubuntu Server 22.04.5 LTS 操作系统。整个安装过程都比较简单直接跟着引导安装即可。

系统安装后立刻进行系统更新。由于安装向导已配置了国内源,因此整个更新过程也非常顺利。

sudo apt-get update && sudo apt-get upgrade -y && sudo apt-get dist-upgrade -y && sudo apt autoremove && sudo apt autoclean

在所有安全更新都安装完毕后,接下就可以安装 NVIDIA 驱动了。

由于 Ubuntu Server 没有 gdm 界面了,因此跟家里的 Linux Mint 的安装方式不太一样。

1. 安装 NVIDIA 驱动

首先,先检查显卡是否有被识别,如下图:

pai@pai:~$ lspci | grep -i nvidia

18:00.0 VGA compatible controller: NVIDIA Corporation GA102GL [RTX A6000] (rev a1)

18:00.1 Audio device: NVIDIA Corporation GA102 High Definition Audio Controller (rev a1)

OK,由于安装过程需要用到 gcc 编译,所以还是先装一下 gcc 和 make,如下图:

pai@pai:~$ sudo apt install gcc make

[sudo] password for pai:

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

cpp cpp-11 fontconfig-config fonts-dejavu-core gcc-11 gcc-11-base libasan6 libatomic1 libc-dev-bin libc-devtools libc6-dev libcc1-0 libcrypt-dev libdeflate0 libfontconfig1

libgcc-11-dev libgd3 libgomp1 libisl23 libitm1 libjbig0 libjpeg-turbo8 libjpeg8 liblsan0 libmpc3 libnsl-dev libquadmath0 libtiff5 libtirpc-dev libtsan0 libubsan1 libwebp7

libxpm4 linux-libc-dev manpages-dev rpcsvc-proto

Suggested packages:

cpp-doc gcc-11-locales gcc-multilib autoconf automake libtool flex bison gdb gcc-doc gcc-11-multilib gcc-11-doc glibc-doc libgd-tools make-doc

The following NEW packages will be installed:

cpp cpp-11 fontconfig-config fonts-dejavu-core gcc gcc-11 gcc-11-base libasan6 libatomic1 libc-dev-bin libc-devtools libc6-dev libcc1-0 libcrypt-dev libdeflate0 libfontconfig1

libgcc-11-dev libgd3 libgomp1 libisl23 libitm1 libjbig0 libjpeg-turbo8 libjpeg8 liblsan0 libmpc3 libnsl-dev libquadmath0 libtiff5 libtirpc-dev libtsan0 libubsan1 libwebp7

libxpm4 linux-libc-dev make manpages-dev rpcsvc-proto

0 upgraded, 38 newly installed, 0 to remove and 1 not upgraded.

Need to get 48.8 MB of archives.

After this operation, 153 MB of additional disk space will be used.

Do you want to continue? [Y/n] y

Get:1 http://cn.archive.ubuntu.com/ubuntu jammy-updates/main amd64 gcc-11-base amd64 11.4.0-1ubuntu1~22.04 [20.2 kB]

...

接着就可以搜索 NVIDIA 驱动(通过 ubuntu-drivers devices 指令搜索驱动能够找到所有 Ubuntu 官方推荐的驱动版本)

pai@pai:~$ ubuntu-drivers devices

ERROR:root:aplay command not found

== /sys/devices/pci0000:17/0000:17:02.0/0000:18:00.0 ==

modalias : pci:v000010DEd00002230sv00001028sd00001459bc03sc00i00

vendor : NVIDIA Corporation

model : GA102GL [RTX A6000]

driver : nvidia-driver-535-server - distro non-free

driver : nvidia-driver-545 - distro non-free

driver : nvidia-driver-470-server - distro non-free

driver : nvidia-driver-470 - distro non-free

driver : nvidia-driver-535 - distro non-free

driver : nvidia-driver-535-server-open - distro non-free

driver : nvidia-driver-550-open - distro non-free

driver : nvidia-driver-535-open - distro non-free

driver : nvidia-driver-545-open - distro non-free

driver : nvidia-driver-550 - distro non-free recommended

driver : xserver-xorg-video-nouveau - distro free builtin

注意 “recommended” 字样并找到对应版本进行安装

pai@pai:~$ sudo apt-get install nvidia-driver-550

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

...

驱动安装后重启系统(或登出重新登录)即可通过 nvidia-smi 指令查到显卡信息

pai@pai:~$ nvidia-smi

Wed Oct 16 06:42:03 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.107.02 Driver Version: 550.107.02 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA RTX A6000 Off | 00000000:18:00.0 Off | Off |

| 30% 39C P8 12W / 300W | 2MiB / 49140MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

OK,显卡驱动装完接下来就轮到更新 CUDA。

PS:虽然通过 nvidia-smi 可知安装显卡驱动时已经安装了 12.4 版本 CUDA 了,但是为了达到最好效果,还是选择将 CUDA 驱动更新到最新版本。

访问地址:https://developer.nvidia.com/cuda-downloads

逐级选择对应信息后,网页下方将会出现 CUDA 获取提示,这里只需跟步骤执行即可。

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/cuda-ubuntu2204.pin

sudo mv cuda-ubuntu2204.pin /etc/apt/preferences.d/cuda-repository-pin-600

wget https://developer.download.nvidia.com/compute/cuda/12.6.2/local_installers/cuda-repo-ubuntu2204-12-6-local_12.6.2-560.35.03-1_amd64.deb

sudo dpkg -i cuda-repo-ubuntu2204-12-6-local_12.6.2-560.35.03-1_amd64.deb

sudo cp /var/cuda-repo-ubuntu2204-12-6-local/cuda-*-keyring.gpg /usr/share/keyrings/

sudo apt-get update

sudo apt-get -y install cuda-toolkit-12-6

PS:执行完成以上指令后重新通过 nvidia-smi 指令查看 CUDA 信息,会发现 CUDA 版本还是原来的 12.4 。别急,后面会告诉你怎样解决。

接着安装的是 cuDNN 这个深度学习加速库,如下图:

访问地址:https://developer.nvidia.com/cudnn-downloads

同理,在选中系统信息后跟着网页提示安装即可,如下图:

wget https://developer.download.nvidia.com/compute/cudnn/9.5.0/local_installers/cudnn-local-repo-ubuntu2204-9.5.0_1.0-1_amd64.deb

sudo dpkg -i cudnn-local-repo-ubuntu2204-9.5.0_1.0-1_amd64.deb

sudo cp /var/cudnn-local-repo-ubuntu2204-9.5.0/cudnn-*-keyring.gpg /usr/share/keyrings/

sudo apt-get update

sudo apt-get -y install cudnn

在安装完 CUDA 和 cuDNN 之后使用 nvidia-smi 指令发现显卡信息没有变化。这时需要重新搜索显卡驱动,会发现多了一个 560 第三方驱动,并被标记为“recommended”,如下图:

pai@pai:~$ ubuntu-drivers devices

ERROR:root:aplay command not found

== /sys/devices/pci0000:17/0000:17:02.0/0000:18:00.0 ==

modalias : pci:v000010DEd00002230sv00001028sd00001459bc03sc00i00

vendor : NVIDIA Corporation

model : GA102GL [RTX A6000]

driver : nvidia-driver-550 - distro non-free

driver : nvidia-driver-535-open - distro non-free

driver : nvidia-driver-470-server - distro non-free

driver : nvidia-driver-545 - distro non-free

driver : nvidia-driver-545-open - distro non-free

driver : nvidia-driver-560 - third-party non-free recommended

driver : nvidia-driver-535-server - distro non-free

driver : nvidia-driver-470 - distro non-free

driver : nvidia-driver-535-server-open - distro non-free

driver : nvidia-driver-535 - distro non-free

driver : nvidia-driver-550-open - distro non-free

driver : nvidia-driver-560-open - third-party non-free

driver : xserver-xorg-video-nouveau - distro free builtin

这时选择安装 560 版本驱动即可。最终如下所示:

pai@pai:~$ nvidia-smi

Wed Oct 16 07:50:07 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 560.35.03 Driver Version: 560.35.03 CUDA Version: 12.6 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA RTX A6000 Off | 00000000:18:00.0 Off | Off |

| 30% 32C P8 12W / 300W | 2MiB / 49140MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

pai@pai:~$ ls /usr/lib/x86_64-linux-gnu/libcudnn*

/usr/lib/x86_64-linux-gnu/libcudnn_adv.so /usr/lib/x86_64-linux-gnu/libcudnn_engines_runtime_compiled_static_v9.a

/usr/lib/x86_64-linux-gnu/libcudnn_adv.so.9 /usr/lib/x86_64-linux-gnu/libcudnn_graph.so

/usr/lib/x86_64-linux-gnu/libcudnn_adv.so.9.5.0 /usr/lib/x86_64-linux-gnu/libcudnn_graph.so.9

/usr/lib/x86_64-linux-gnu/libcudnn_adv_static.a /usr/lib/x86_64-linux-gnu/libcudnn_graph.so.9.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_adv_static_v9.a /usr/lib/x86_64-linux-gnu/libcudnn_graph_static.a

/usr/lib/x86_64-linux-gnu/libcudnn_cnn.so /usr/lib/x86_64-linux-gnu/libcudnn_graph_static_v9.a

/usr/lib/x86_64-linux-gnu/libcudnn_cnn.so.9 /usr/lib/x86_64-linux-gnu/libcudnn_heuristic.so

/usr/lib/x86_64-linux-gnu/libcudnn_cnn.so.9.5.0 /usr/lib/x86_64-linux-gnu/libcudnn_heuristic.so.9

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_static.a /usr/lib/x86_64-linux-gnu/libcudnn_heuristic.so.9.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_cnn_static_v9.a /usr/lib/x86_64-linux-gnu/libcudnn_heuristic_static.a

/usr/lib/x86_64-linux-gnu/libcudnn_engines_precompiled.so /usr/lib/x86_64-linux-gnu/libcudnn_heuristic_static_v9.a

/usr/lib/x86_64-linux-gnu/libcudnn_engines_precompiled.so.9 /usr/lib/x86_64-linux-gnu/libcudnn_ops.so

/usr/lib/x86_64-linux-gnu/libcudnn_engines_precompiled.so.9.5.0 /usr/lib/x86_64-linux-gnu/libcudnn_ops.so.9

/usr/lib/x86_64-linux-gnu/libcudnn_engines_precompiled_static.a /usr/lib/x86_64-linux-gnu/libcudnn_ops.so.9.5.0

/usr/lib/x86_64-linux-gnu/libcudnn_engines_precompiled_static_v9.a /usr/lib/x86_64-linux-gnu/libcudnn_ops_static.a

/usr/lib/x86_64-linux-gnu/libcudnn_engines_runtime_compiled.so /usr/lib/x86_64-linux-gnu/libcudnn_ops_static_v9.a

/usr/lib/x86_64-linux-gnu/libcudnn_engines_runtime_compiled.so.9 /usr/lib/x86_64-linux-gnu/libcudnn.so

/usr/lib/x86_64-linux-gnu/libcudnn_engines_runtime_compiled.so.9.5.0 /usr/lib/x86_64-linux-gnu/libcudnn.so.9

/usr/lib/x86_64-linux-gnu/libcudnn_engines_runtime_compiled_static.a /usr/lib/x86_64-linux-gnu/libcudnn.so.9.5.0

OK,一切准备就绪。那么还是老规矩通过 Ollama 实时推理,观察 GPU 和显存使用情况。

2. 部署 Ollama 应用

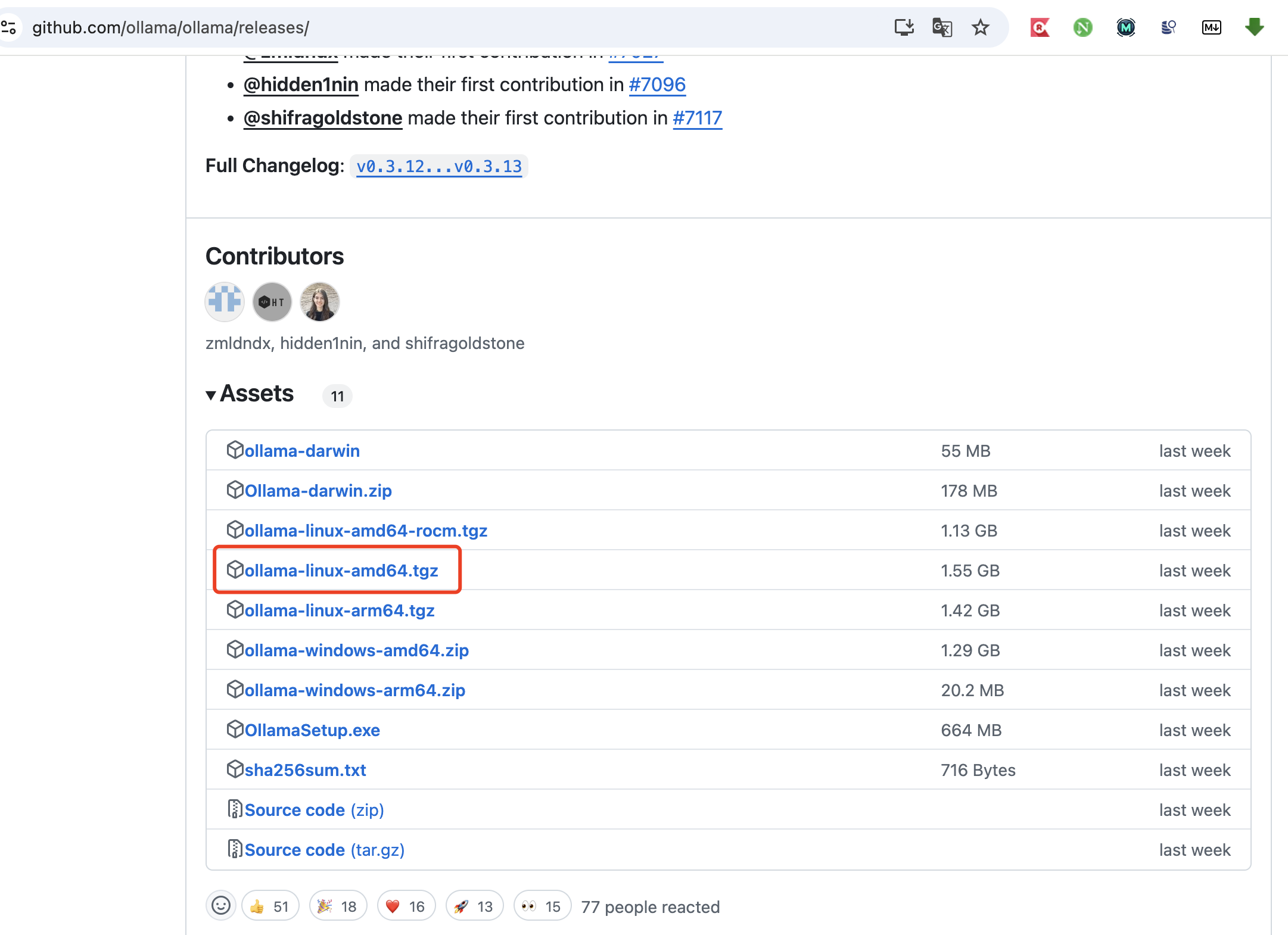

因为网络问题,Ollama 只能采用离线方式安装,如下图:

访问地址:https://github.com/ollama/ollama/releases/

访问地址:https://github.com/ollama/ollama/releases/

找到 v0.3.12 版本的 ollama-linux-amd64.tgz 进行下载,然后通过 tar -zxvf 进行解压。解压后 ollama 启动程序将会放置在 ./bin 的路径下,将其剪切到 /usr/local/bin 目录下,如下图:

sudo mv ./bin/ollama /usr/local/bin/ollama

但离线安装 Ollama 无法使用静默方式启动,为了方便写一个启动脚本

#!/bin/sh

nohup ollama serve > /home/pai/logs/ollama/start_run.log 2>&1 &

接下来就可以通过 ollama pull 指令下载模型了,这里选取 qwen2.5:7b-instruct-q5_K_M 模型。模型下载完毕后就可以通过 ollama run 来运行模型了。最后为了观察显卡资源使用情况,还需安装 nvtop 。

OK,让我们来看看效果。如下图:

效果还可以。

3. 部署 Stable Diffusion 应用

我们的 UI 工程师天天都催着让搞个人工智能生图工具给他(老板的那些效果图都改怕了)。这不现在来机器了嘛就催得更紧了。虽然之前为他购置了一台带 3050 显卡的笔记本用来本地部署 Stable Diffusion(以下简称“SD”) 生图的,但是他的工作量实在太饱和了,看他天天操爷爷告奶奶的,还是优先给他将 SD 部署到服务器吧。

由于使用的是 Ubuntu Server 系统,因此还无法使用秋叶大神的一键安装包(之前 UI 工程师使用的就是秋叶大神的)。为此这次还是直接从 Github checkout “AUTOMATIC1111/stable-diffusion-webui”进行部署。

首先,国内一般不建议 checkout 大型的 Github 仓库(因为众所周知的原因网络经常会断),如实在需要可以先找找 Gitee 是否存在镜像仓库。但 “AUTOMATIC1111/stable-diffusion-webui” 的 Gitee 镜像已经有接近半年没有更新了,因此还是到 Github 下载吧。

为了解决这个问题就需要修改一下 /etc/hosts 文件,但在修改之前还需要解析 github.com 域名找到国内的加速点 ip。我在这里就使用了站长工具(https://tool.chinaz.com/)的国内测速功能,如下图:

选择其中最快的节点 ip,接着对 hosts 文件进行修改,如下图:

选择其中最快的节点 ip,接着对 hosts 文件进行修改,如下图:

127.0.0.1 localhost

127.0.1.1 pai

20.205.243.166 github.com

之后重新 checkout 就一切正常了,如下图:

pai@pai:~/llm/sd$ git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git

Cloning into 'stable-diffusion-webui'...

remote: Enumerating objects: 34605, done.

remote: Total 34605 (delta 0), reused 0 (delta 0), pack-reused 34605 (from 1)

Receiving objects: 100% (34605/34605), 35.31 MiB | 647.00 KiB/s, done.

Resolving deltas: 100% (24194/24194), done.

OK,第一个问题解决了。接下来就可以根据官网提示预安装运行环境,如下图:

pai@pai:~/llm/sd/stable-diffusion-webui$ sudo apt install wget git python3 python3-venv libgl1 libglib2.0-0

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

...

之后就可以运行 webui.sh 进行安装,如下图:

pai@pai:~/llm/sd/stable-diffusion-webui$ ./webui.sh

################################################################

Install script for stable-diffusion + Web UI

Tested on Debian 11 (Bullseye), Fedora 34+ and openSUSE Leap 15.4 or newer.

################################################################

################################################################

Running on pai user

################################################################

################################################################

Repo already cloned, using it as install directory

################################################################

################################################################

Create and activate python venv

################################################################

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Requirement already satisfied: pip in ./venv/lib/python3.10/site-packages (22.0.2)

Collecting pip

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/d4/55/90db48d85f7689ec6f81c0db0622d704306c5284850383c090e6c7195a5c/pip-24.2-py3-none-any.whl (1.8 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.8/1.8 MB 192.5 kB/s eta 0:00:00

Installing collected packages: pip

Attempting uninstall: pip

Found existing installation: pip 22.0.2

Uninstalling pip-22.0.2:

Successfully uninstalled pip-22.0.2

Successfully installed pip-24.2

################################################################

Launching launch.py...

################################################################

glibc version is 2.35

Cannot locate TCMalloc. Do you have tcmalloc or google-perftool installed on your system? (improves CPU memory usage)

Python 3.10.12 (main, Sep 11 2024, 15:47:36) [GCC 11.4.0]

Version: v1.10.1

Commit hash: 82a973c04367123ae98bd9abdf80d9eda9b910e2

Installing torch and torchvision

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple, https://download.pytorch.org/whl/cu121

在安装到 pytorch 时由于安装包过大( 2.2G ),因此经常出现 time out 的情况出现,如下图:

pai@pai:~/.pip$ "/home/pai/llm/sd/stable-diffusion-webui/venv/bin/python" -m pip install --index-url https://mirrors.aliyun.com/pypi/simple torch==2.1.2 torchvision==0.16.2 --extra-index-url https://download.pytorch.org/whl/cu121

Looking in indexes: https://mirrors.aliyun.com/pypi/simple, https://download.pytorch.org/whl/cu121

Collecting torch==2.1.2

Downloading https://download.pytorch.org/whl/cu121/torch-2.1.2%2Bcu121-cp310-cp310-linux_x86_64.whl (2200.7 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/2.2 GB 262.3 kB/s eta 2:18:20

ERROR: Exception:

...

TimeoutError: The read operation timed out

之后尝试过使用 “pip install -i” 的方式临时使用国内源进行下载,但由于实在有点大下载的时间还是比较久。于是只好在我本地通过多线程下载后重新上传到服务器继续安装,如下图:

pai@pai:~/llm/sd/stable-diffusion-webui$ source venv/bin/activate

(venv)pai@pai:~/llm/sd/stable-diffusion-webui$ "/home/pai/llm/sd/stable-diffusion-webui/venv/bin/python" -m pip install torch-2.1.2+cu121-cp310-cp310-linux_x86_64.whl

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Processing ./torch-2.1.2+cu121-cp310-cp310-linux_x86_64.whl

Collecting filelock (from torch==2.1.2+cu121)

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/b9/f8/feced7779d755758a52d1f6635d990b8d98dc0a29fa568bbe0625f18fdf3/filelock-3.16.1-py3-none-any.whl (16 kB)

...

Installing collected packages: mpmath, typing-extensions, sympy, networkx, MarkupSafe, fsspec, filelock, triton, jinja2, torch

Successfully installed MarkupSafe-3.0.2 filelock-3.16.1 fsspec-2024.10.0 jinja2-3.1.4 mpmath-1.3.0 networkx-3.4.1 sympy-1.13.3 torch-2.1.2+cu121 triton-2.1.0 typing-extensions-4.12.2

...

好了,第二个问题解决了让我们继续安装吧

(venv)pai@pai:~/llm/sd/stable-diffusion-webui$ ./webui.sh

...

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/12/90/3c9ff0512038035f59d279fddeb79f5f1eccd8859f06d6163c58798b9487/certifi-2024.8.30-py3-none-any.whl (167 kB)

Requirement already satisfied: mpmath<1.4,>=1.1.0 in ./venv/lib/python3.10/site-packages (from sympy->torch==2.1.2) (1.3.0)

Installing collected packages: urllib3, pillow, numpy, idna, charset-normalizer, certifi, requests, torchvision

Successfully installed certifi-2024.8.30 charset-normalizer-3.4.0 idna-3.10 numpy-2.1.2 pillow-11.0.0 requests-2.32.3 torchvision-0.16.2+cu121 urllib3-2.2.3

Installing clip

Installing open_clip

Traceback (most recent call last):

File "/home/pai/llm/sd/stable-diffusion-webui/launch.py", line 48, in <module>

main()

File "/home/pai/llm/sd/stable-diffusion-webui/launch.py", line 39, in main

prepare_environment()

File "/home/pai/llm/sd/stable-diffusion-webui/modules/launch_utils.py", line 398, in prepare_environment

run_pip(f"install {openclip_package}", "open_clip")

File "/home/pai/llm/sd/stable-diffusion-webui/modules/launch_utils.py", line 144, in run_pip

return run(f'"{python}" -m pip {command} --prefer-binary{index_url_line}', desc=f"Installing {desc}", errdesc=f"Couldn't install {desc}", live=live)

File "/home/pai/llm/sd/stable-diffusion-webui/modules/launch_utils.py", line 116, in run

raise RuntimeError("\n".join(error_bits))

RuntimeError: Couldn't install open_clip.

Command: "/home/pai/llm/sd/stable-diffusion-webui/venv/bin/python3.10" -m pip install https://github.com/mlfoundations/open_clip/archive/bb6e834e9c70d9c27d0dc3ecedeebeaeb1ffad6b.zip --prefer-binary

Error code: 1

stdout: Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Collecting https://github.com/mlfoundations/open_clip/archive/bb6e834e9c70d9c27d0dc3ecedeebeaeb1ffad6b.zip

stderr: WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ProtocolError('Connection aborted.', RemoteDisconnected('Remote end closed connection without response'))': /mlfoundations/open_clip/archive/bb6e834e9c70d9c27d0dc3ecedeebeaeb1ffad6b.zip

WARNING: Retrying (Retry(total=3, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ConnectTimeoutError(<pip._vendor.urllib3.connection.HTTPSConnection object at 0x7f468c3a1870>, 'Connection to github.com timed out. (connect timeout=15)')': /mlfoundations/open_clip/archive/bb6e834e9c70d9c27d0dc3ecedeebeaeb1ffad6b.zip

WARNING: Retrying (Retry(total=2, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ConnectTimeoutError(<pip._vendor.urllib3.connection.HTTPSConnection object at 0x7f468c3a19f0>, 'Connection to github.com timed out. (connect timeout=15)')': /mlfoundations/open_clip/archive/bb6e834e9c70d9c27d0dc3ecedeebeaeb1ffad6b.zip

WARNING: Retrying (Retry(total=1, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ConnectTimeoutError(<pip._vendor.urllib3.connection.HTTPSConnection object at 0x7f468c3a1ae0>, 'Connection to github.com timed out. (connect timeout=15)')': /mlfoundations/open_clip/archive/bb6e834e9c70d9c27d0dc3ecedeebeaeb1ffad6b.zip

WARNING: Retrying (Retry(total=0, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ConnectTimeoutError(<pip._vendor.urllib3.connection.HTTPSConnection object at 0x7f468c3a1c60>, 'Connection to github.com timed out. (connect timeout=15)')': /mlfoundations/open_clip/archive/bb6e834e9c70d9c27d0dc3ecedeebeaeb1ffad6b.zip

ERROR: Could not install packages due to an OSError: HTTPSConnectionPool(host='github.com', port=443): Max retries exceeded with url: /mlfoundations/open_clip/archive/bb6e834e9c70d9c27d0dc3ecedeebeaeb1ffad6b.zip (Caused by ConnectTimeoutError(<pip._vendor.urllib3.connection.HTTPSConnection object at 0x7f468c3a1de0>, 'Connection to github.com timed out. (connect timeout=15)'))

呃…Github 又访问不了啦,现在已经不想再折腾这个 hosts文件了。于是就按照 pytorch 那样先下载再安装

(venv)pai@pai:~/software$ "/home/pai/llm/sd/stable-diffusion-webui/venv/bin/python3.10" -m pip install open_clip-bb6e834e9c70d9c27d0dc3ecedeebeaeb1ffad6b.zip

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Processing ./open_clip-bb6e834e9c70d9c27d0dc3ecedeebeaeb1ffad6b.zip

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing metadata (pyproject.toml) ... done

...

Created wheel for open_clip_torch: filename=open_clip_torch-2.7.0-py3-none-any.whl size=1442888 sha256=49a241efb7798cc9367df97ff51c870b9f28a25cae580295869b4ff6b172c247

Stored in directory: /home/pai/.cache/pip/wheels/1c/d1/e2/1ff5ef854ccdeddeb0e1695ca7fc1d17877fe9c4363f9d40d3

Successfully built open_clip_torch

Installing collected packages: sentencepiece, pyyaml, protobuf, packaging, huggingface-hub, open_clip_torch

Successfully installed huggingface-hub-0.26.0 open_clip_torch-2.7.0 packaging-24.1 protobuf-3.20.0 pyyaml-6.0.2 sentencepiece-0.2.0

Yes,最终都安装好了。但重新执行 webui.sh 时发现了第四个问题,如下图:

...

Cannot locate TCMalloc. Do you have tcmalloc or google-perftool installed on your system? (improves CPU memory usage)

...

说是无法定位 TCMalloc,这个在之前的一个项目中也遇到过,其实解决也简单安装 google-perftools 即可

sudo apt install --no-install-recommends google-perftools

...

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

libgoogle-perftools4 libtcmalloc-minimal4

Suggested packages:

libgoogle-perftools-dev

Recommended packages:

graphviz gv

The following NEW packages will be installed:

google-perftools libgoogle-perftools4 libtcmalloc-minimal4

...

紧接着第五个问题来了 “no module ‘xformers’”

...

Launching Web UI with arguments:

The cache for model files in Transformers v4.22.0 has been updated. Migrating your old cache. This is a one-time only operation. You can interrupt this and resume the migration later on by calling `transformers.utils.move_cache()`.

0it [00:00, ?it/s]

/home/pai/llm/sd/stable-diffusion-webui/venv/lib/python3.10/site-packages/timm/models/layers/__init__.py:48: FutureWarning: Importing from timm.models.layers is deprecated, please import via timm.layers

warnings.warn(f"Importing from {__name__} is deprecated, please import via timm.layers", FutureWarning)

no module 'xformers'. Processing without...

no module 'xformers'. Processing without...

No module 'xformers'. Proceeding without it.

这个是因为 venv 环境没有安装 xformers 库导致的,那就装一下呗

(venv)pai@pai:~/llm/sd/stable-diffusion-webui$ pip install -i https://mirrors.aliyun.com/pypi/simple xformers

Looking in indexes: https://mirrors.aliyun.com/pypi/simple

Collecting xformers

Using cached https://mirrors.aliyun.com/pypi/packages/ca/24/3335df4d7c363188705be2808eb7e4bacfbfe23e3a4671c8f311236036d1/xformers-0.0.28.post1-cp310-cp310-manylinux_2_28_x86_64.whl (16.7 MB)

Requirement already satisfied: numpy in ./venv/lib/python3.10/site-packages (from xformers) (1.26.2)

Collecting torch==2.4.1 (from xformers)

Using cached https://mirrors.aliyun.com/pypi/packages/41/05/d540049b1832d1062510efc6829634b7fbef5394c757d8312414fb65a3cb/torch-2.4.1-cp310-cp310-manylinux1_x86_64.whl (797.1 MB)

...

在安装的过程中又出现了兼容性错误

...

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

torchvision 0.16.2+cu121 requires torch==2.1.2, but you have torch 2.4.1 which is incompatible.

...

最后,将 torch 和 xformers 进行降级处理解决这个问题。

...

torch 2.1.2

torchvision 0.16.2+cu121

xformers 0.0.23.post1

...

后面再重新执行 webui.sh 后就能够正常启动了,但为了使用 xformers 需要增加启动参数 “–xformers”。

OK,万事具备但发现远程访问不了…

经过多方查证,这时需要在启动参数后再加上“–listen”和“–gradio-auth”两个参数来解决。

...

Launching Web UI with arguments: --xformers --listen --gradio-auth pai:pai@2017

/home/pai/llm/sd/stable-diffusion-webui/venv/lib/python3.10/site-packages/timm/models/layers/__init__.py:48: FutureWarning: Importing from timm.models.layers is deprecated, please import via timm.layers

warnings.warn(f"Importing from {__name__} is deprecated, please import via timm.layers", FutureWarning)

Loading weights [6ce0161689] from /home/pai/llm/sd/stable-diffusion-webui/models/Stable-diffusion/v1-5-pruned-emaonly.safetensors

Running on local URL: http://0.0.0.0:7860

To create a public link, set `share=True` in `launch()`.

...

好了,正常启动后通过浏览器访问发现界面全是英文,要转为中文就必须安装中文插件。

选择 Extensions -> Available -> 反选 localzation,之后就可以点击 load from 按钮。Boom!!直接报错

选择 Extensions -> Available -> 反选 localzation,之后就可以点击 load from 按钮。Boom!!直接报错

*** Error completing request

*** Arguments: ('https://raw.githubusercontent.com/AUTOMATIC1111/stable-diffusion-webui-extensions/master/index.json', ['ads', 'installed'], 'hide', 'or', 0) {}

Traceback (most recent call last):

File "/usr/lib/python3.10/urllib/request.py", line 1348, in do_open

h.request(req.get_method(), req.selector, req.data, headers,

File "/usr/lib/python3.10/http/client.py", line 1283, in request

self._send_request(method, url, body, headers, encode_chunked)

File "/usr/lib/python3.10/http/client.py", line 1329, in _send_request

self.endheaders(body, encode_chunked=encode_chunked)

File "/usr/lib/python3.10/http/client.py", line 1278, in endheaders

self._send_output(message_body, encode_chunked=encode_chunked)

File "/usr/lib/python3.10/http/client.py", line 1038, in _send_output

self.send(msg)

File "/usr/lib/python3.10/http/client.py", line 976, in send

self.connect()

File "/usr/lib/python3.10/http/client.py", line 1448, in connect

super().connect()

File "/usr/lib/python3.10/http/client.py", line 942, in connect

self.sock = self._create_connection(

File "/usr/lib/python3.10/socket.py", line 845, in create_connection

raise err

File "/usr/lib/python3.10/socket.py", line 833, in create_connection

sock.connect(sa)

ConnectionRefusedError: [Errno 111] Connection refused

...

正常,https://raw.githubusercontent.com 怎么可能访问得了。还是老老实实从外部下载吧。

插件地址:https://github.com/hanamizuki-ai/stable-diffusion-webui-localization-zh_Hans.git

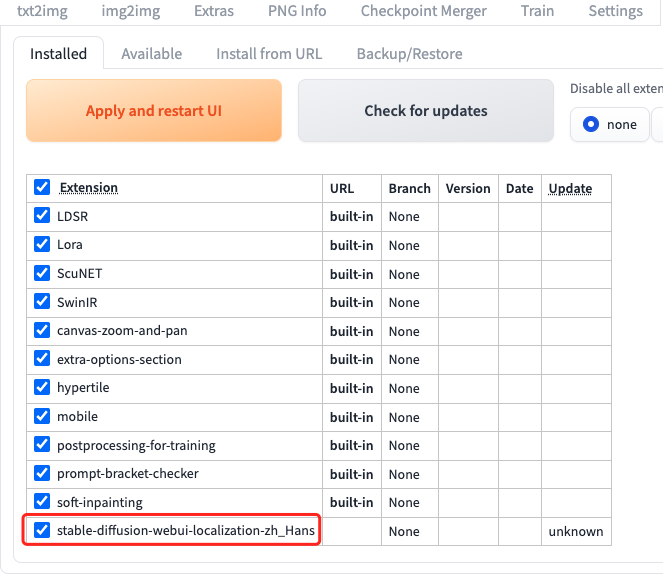

下载完成后解压并放到 extensions 文件夹。之后重启服务就能看到插件信息,如下图:

ll

ll

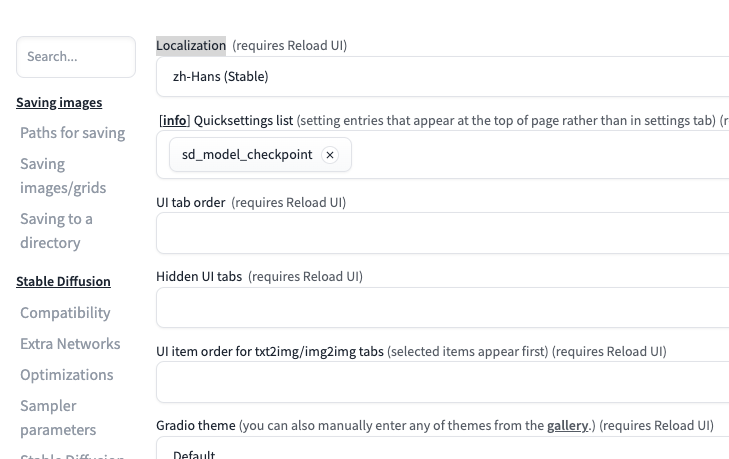

接着选择 Settings -> User interface,通过 Localization 可以选择界面语言,如下图:

之后点击“Apply settings”和“Reload UI”即可。

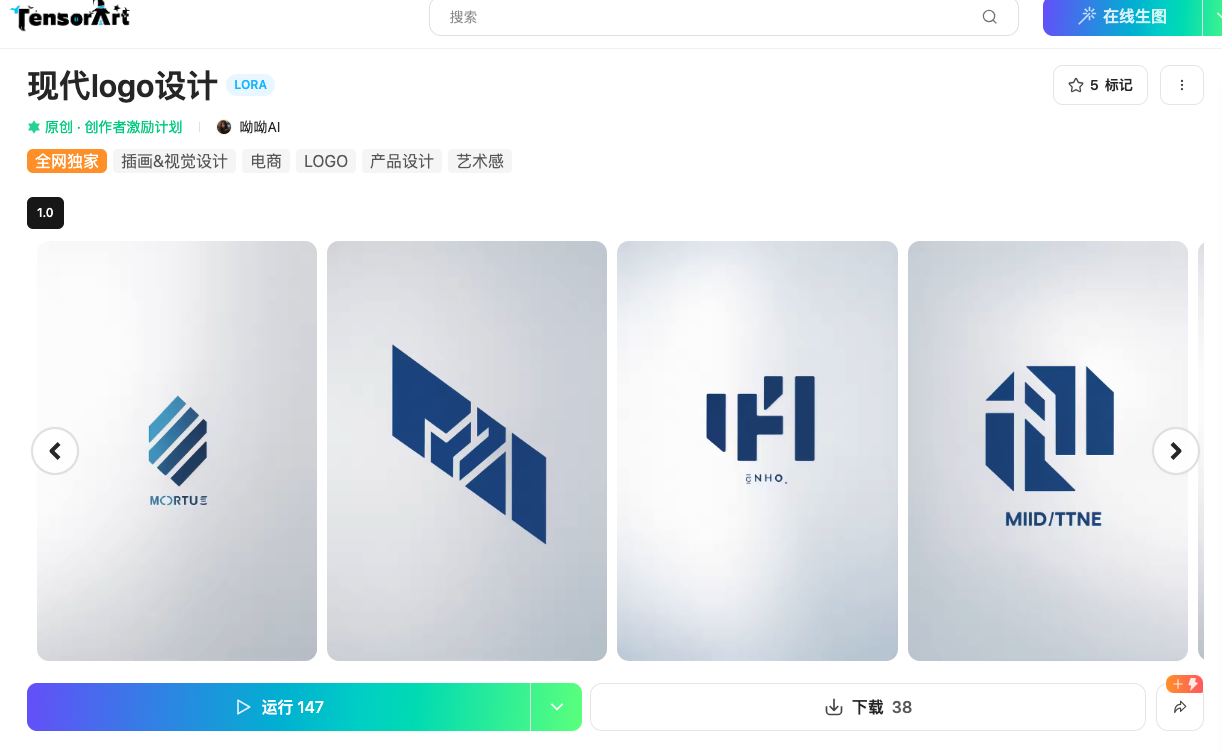

至此,SD 的部署就基本结束了。接下来我们还需要验证一下整个结果如何,我在吐司 TusiArt 下载了一个 logo 设计模型,如下图:

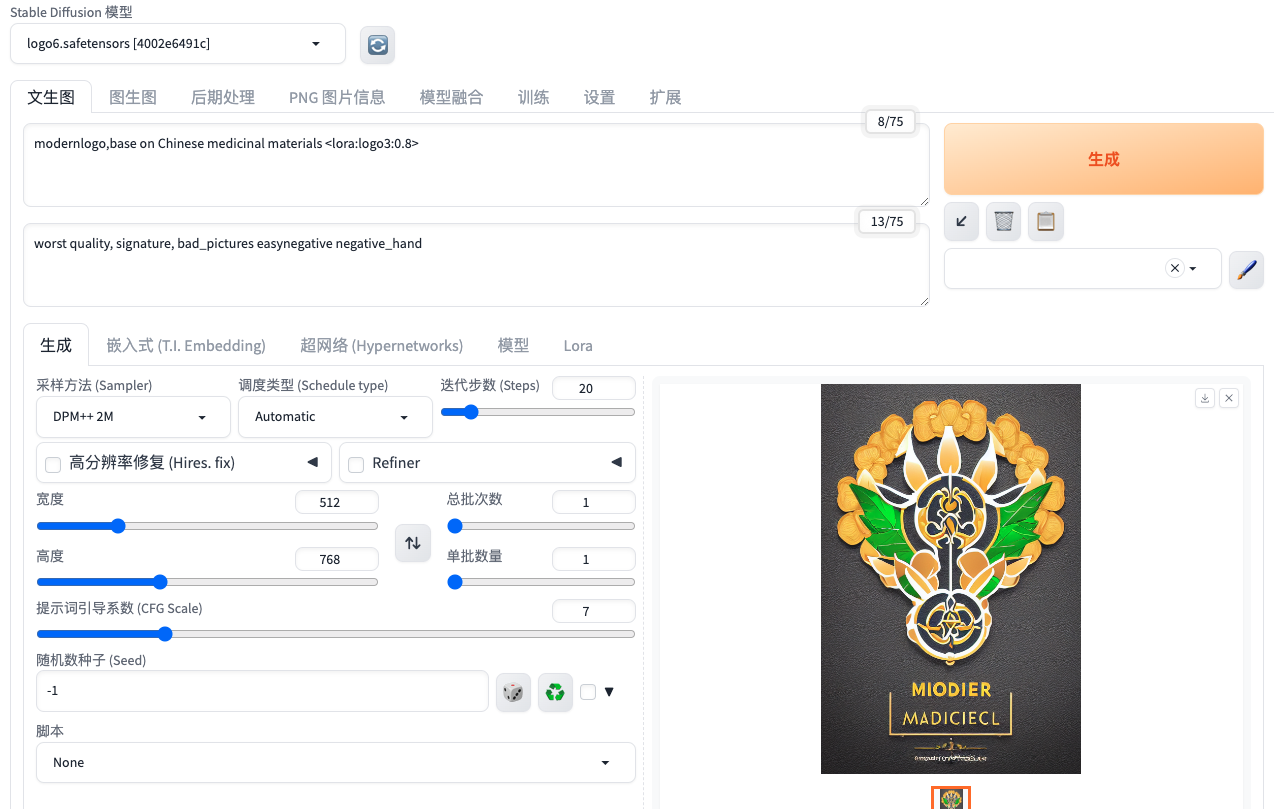

将下载后的 safetensors 文件上传到 SD 的对应目录,之后就可以试试文生图的效果了,如下图:

OK,效果还算可以,剩下的就让 UI 工程师自己迁移模型就可以了。

(未完待续…)