基于K8S1.28.2实验rook部署ceph

原文链接: 基于K8S1.28.2实验rook部署ceph | 严千屹博客

Rook 支持 Kubernetes v1.22 或更高版本。

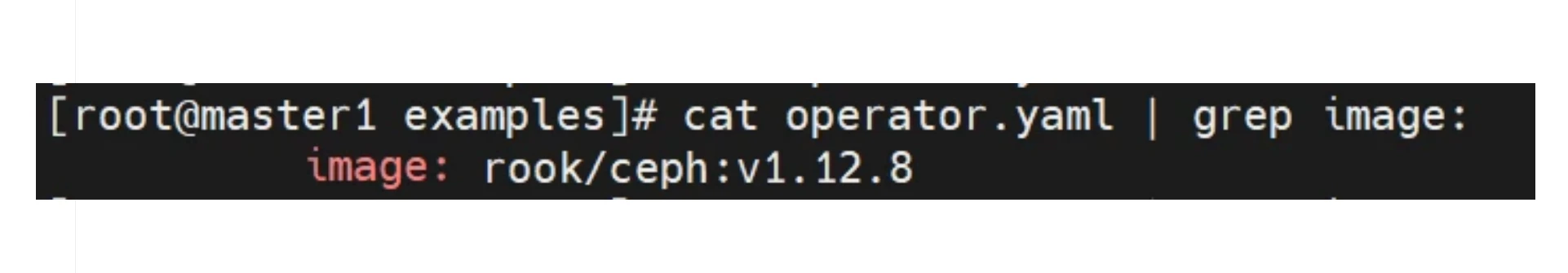

rook版本1.12.8

K8S版本1.28.2

部署出来ceph版本是quincy版本

主机名 | ip1(NAT) | 系统 | 新硬盘 | 磁盘 | 内存 |

master1 | 192.168.48.101 | Centos7.9 | 100G | 100G | 4G |

master2 | 192.168.48.102 | Centos7.9 | 100G | 4G | |

master3 | 192.168.48.103 | Centos7.9 | 100G | 4G | |

node01 | 192.168.48.104 | Centos7.9 | 100G | 100G | 6G |

node02 | 192.168.48.105 | Centos7.9 | 100G | 100G | 6G |

我这里是五台机,本应该ceph(三节点)是需要部署在三台node上的,这里为了测试方便,仅部署在master1,node01,node02上所以需要给这三台加一个物理硬盘

注意!使用之前,请确定是否去掉master节点的污点

【去污点方法】

以下所有操作都在master进行

前期准备

克隆仓库

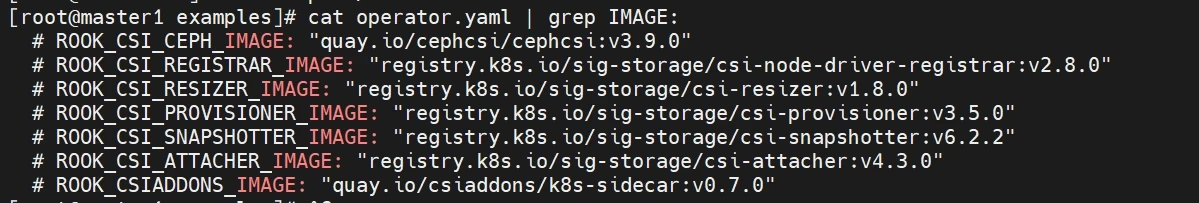

查看所需镜像

基本都是国外的镜像,在这里通过阿里云+github方式构建镜像仓库解决(以下是添加为自己私人构建的镜像)

开启自动发现磁盘(用于后期扩展)

建议提前下载镜像

安装rook+ceph集群

开始部署

- 创建crd&common&operator

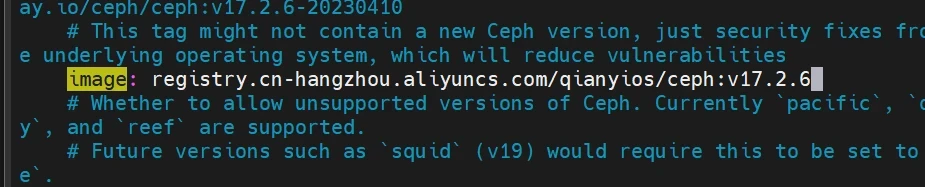

- 创建cluster(ceph)

修改配置:等待operator容器和discover容器启动,配置osd节点

先注意一下自己的磁盘(lsblk)根据自身情况修改下面的配置文件

这里的三个节点,是我们开头讲到的三台机,自行根据修改调整,注意这里的名字是k8s集群的名字可以在kubectl get nodes查看

部署cluster

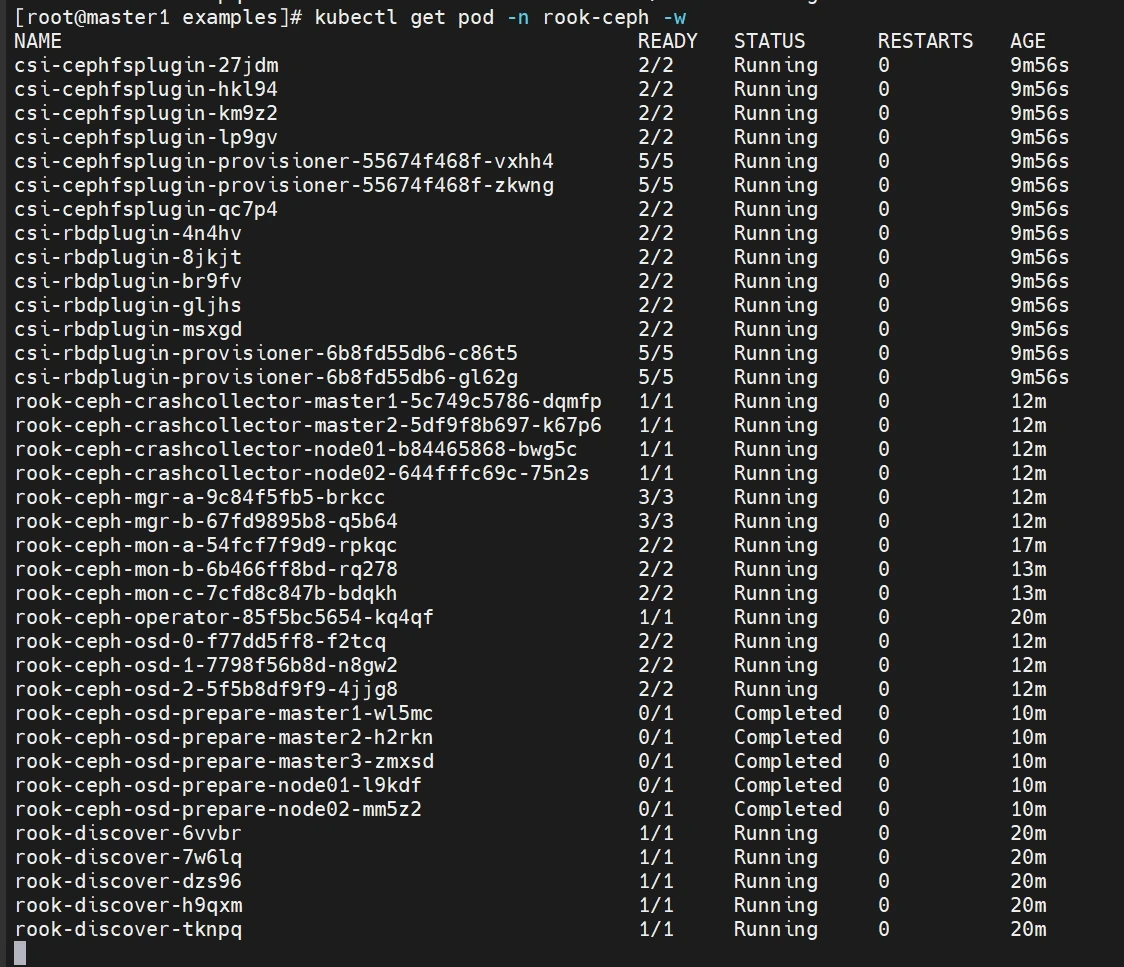

查看状态

安装ceph客户端工具

暴露dashboard

查看dashboard密码

访问dashboard

如果出现以下报错(可以按下面解决,反之跳过)

消除HEALTH_WARN警告

- 查看警告详情

- AUTH_INSECURE_GLOBAL_ID_RECLAIM_ALLOWED: mons are allowing insecure global_id reclaim

- MON_DISK_LOW: mons a,b,c are low on available space

官方解决方案: https://docs.ceph.com/en/latest/rados/operations/health-checks/

- AUTH_INSECURE_GLOBAL_ID_RECLAIM_ALLOWED

- MON_DISK_LOW:根分区使用率过高,清理即可。

Ceph存储使用

三种存储类型

存储类型 | 特征 | 应用场景 | 典型设备 |

块存储(RBD) | 存储速度较快 不支持共享存储 [ReadWriteOnce] | 虚拟机硬盘 | 硬盘 Raid |

文件存储(CephFS) | 存储速度慢(需经操作系统处理再转为块存储) 支持共享存储 [ReadWriteMany] | 文件共享 | FTP NFS |

对象存储(Object) | 具备块存储的读写性能和文件存储的共享特性 操作系统不能直接访问,只能通过应用程序级别的API访问 | 图片存储 视频存储 | OSS |

块存储

创建CephBlockPool和StorageClass

- 文件路径:

/root/rook/deploy/examples/csi/rbd/storageclass.yaml - CephBlockPool和StorageClass都位于storageclass.yaml 文件

- 配置文件简要解读:

创建CephBlockPool和StorageClass

查看

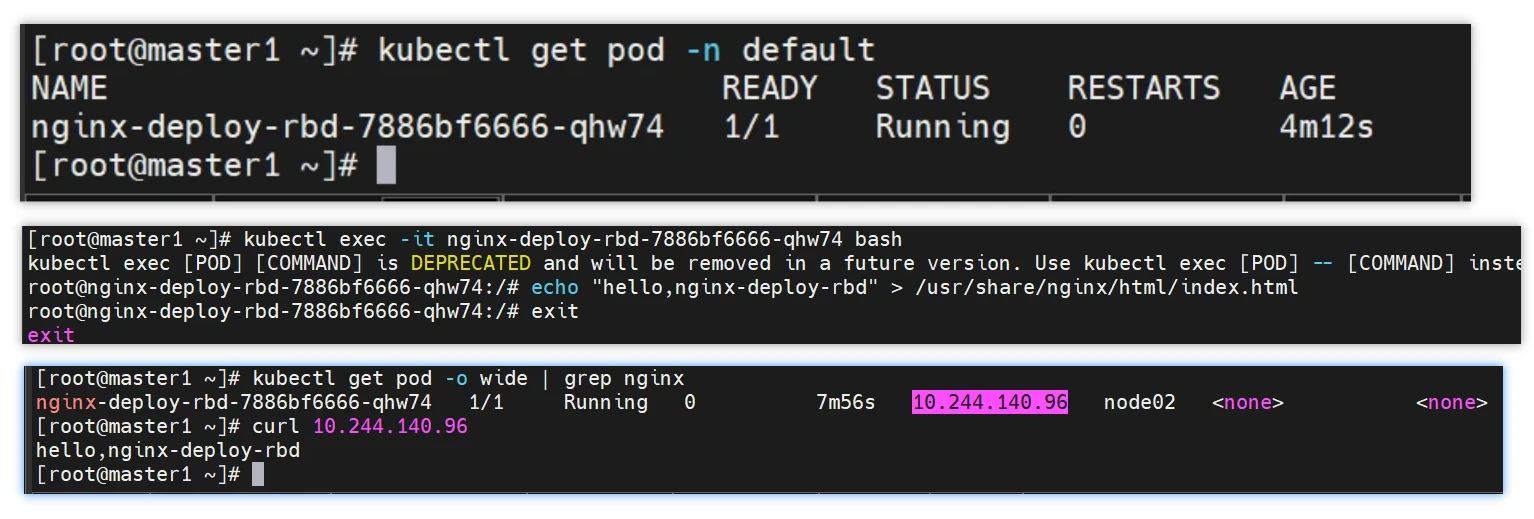

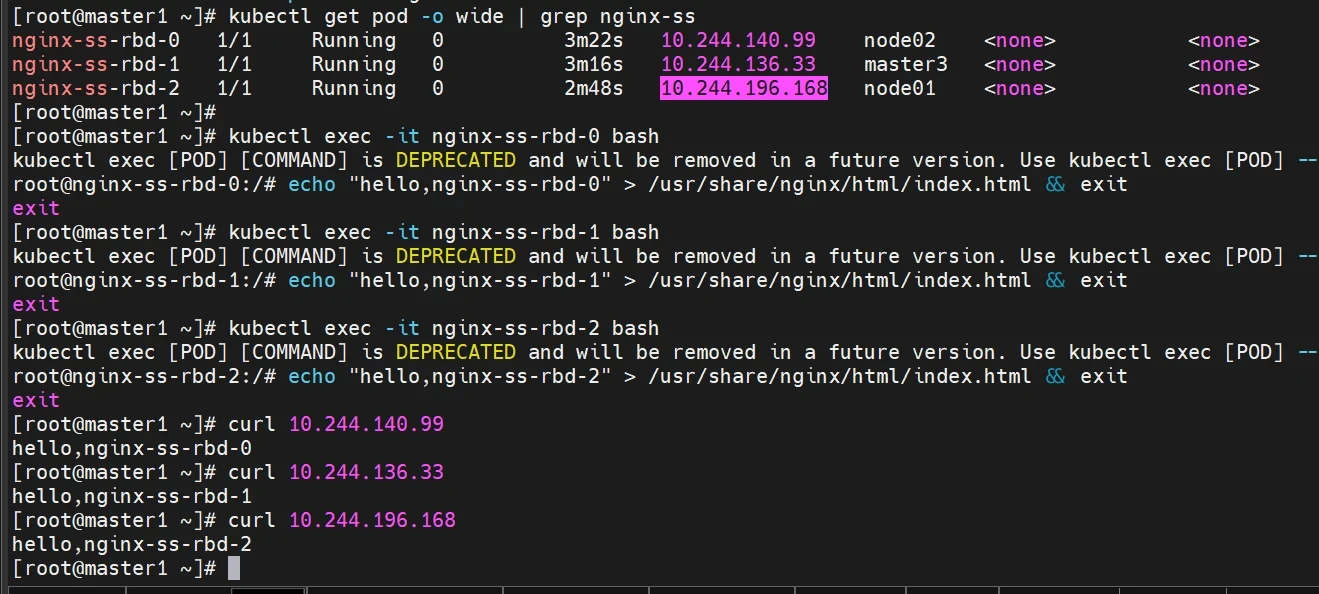

块存储使用示例

- Deployment单副本+PersistentVolumeClaim

- StatefulSet多副本+volumeClaimTemplates

部署

共享文件存储

部署MDS服务

创建Cephfs文件系统需要先部署MDS服务,该服务负责处理文件系统中的元数据。

- 文件路径:

/root/rook/deploy/examples/filesystem.yaml

配置文件解读

配置存储(StorageClass)

配置文件:/root/rook/deploy/examples/csi/cephfs/storageclass.yaml

共享文件存储使用示例

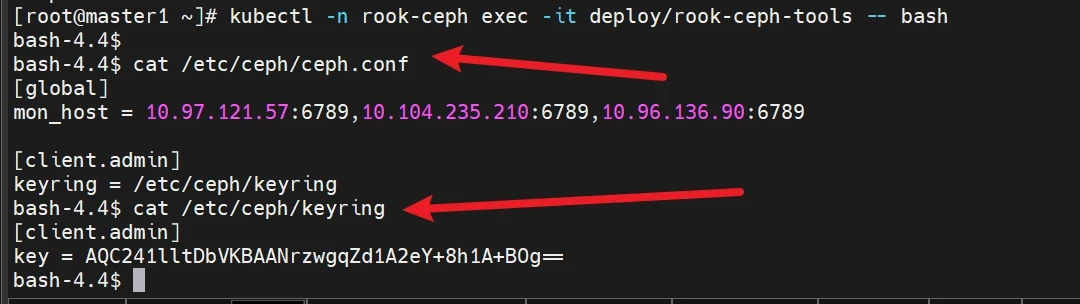

在K8S中直接调用出ceph命令

同步ceph中的认证文件

当你添加完之后直接调用ceph的命令

删除pvc,sc及对应的存储资源

特别声明

千屹博客旗下的所有文章,是通过本人课堂学习和课外自学所精心整理的知识巨著

难免会有出错的地方

如果细心的你发现了小失误,可以在下方评论区告诉我,或者私信我!

非常感谢大家的热烈支持!