系列1:基于Centos-8.6部署Kubernetes (1.24-1.30)

每日禅语

“木末芙蓉花,山中发红萼,涧户寂无人,纷纷开自落。”这是王维的一首诗,名叫《辛夷坞》。这首诗写的是在辛夷坞这个幽深的山谷里,辛夷花自开自落,平淡得很,既没有生的喜悦,也没有死的悲哀。无情有性,辛夷花得之于自然,又回归自然。它不需要赞美,也不需要人们对它的凋谢洒同情之泪,它把自己生命的美丽发挥到了极致。在佛家眼中,众生平等,没有高低贵贱,每个个体都自在自足,自性自然圆满。《占察善恶业报经》有云:“如来法身自性不空,有真实体,具足无量清净功业,从无始世来自然圆满,非修非作,乃至一切众生身中亦皆具足,不变不异,无增无减。”一个人如果能体察到自身不增不减的天赋,就能在世间拥有精彩和圆满。我们常常会有这样的感觉,远处的风景都被笼罩在薄雾或尘埃之下,越是走近就越是朦胧;心里的念头被围困在重峦叠嶂之中,越是急于走出迷阵就越是辨不清方向。这是因为我们过多地执着于思维,而忽视了自性。

写作初衷

网络上关于k8s的部署文档纷繁复杂,而且k8s的入门难度也比docker要高,我学习k8s的时候为了部署一套完整的k8s集群环境,寻找网上各种各样的文档信息,终究不得其中奥秘,所以光是部署这一步导致很多人退而缺步,最终而放弃学习,所以写了这篇文章让更多的k8s学习爱好者一起学习,让大家可以更好的部署集群环境。

注:未标明具体节点的操作需要在所有节点上都执行。

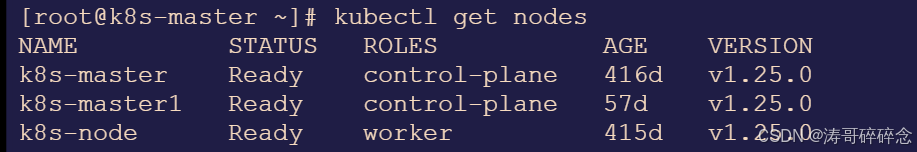

部署模式:两主一从

市面上大部分的部署教程都是一个主节点,两个从节点这种方式,很少有人写这种高可用的部署方法,笔者开始的部署模式也是一主两从,但是真正在使用的时候会发现主节点不是太稳定,经常会导致集群宕机。所以本文是采用两主一从的方式部署

| 服务器 | 节点名称 | k8s节点角色 |

| 192.168.11.85 | k8s-master | control-plane |

| 192.168.11.86 | k8s-master1 | control-plane |

| 192.168.11.87 | k8s-node | worker |

1.部署机器初始化操作

1.1 关闭selinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config1.2配置主机名称

- 控制节点(主节点)其他的控制节点也如下命令添加

# hostnamectl set-hostname k8s-master- 子节点设置服务器名称也是一样操作

# hostnamectl set-hostname k8s-node

# hostnamectl set-hostname k8s-node1

# hostnamectl set-hostname k8s-node21.3关闭交换分区swap

vim /etc/fstab //注释swap挂载,给swap这行开头加一下注释。

# /dev/mapper/centos-swap swap swap defaults 0 0

# 重启服务器让其生效

reboot now1.4修改机器内核参数

# modprobe br_netfilter

# echo "modprobe br_netfilter" >> /etc/profile

# cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF# sysctl -p /etc/sysctl.d/k8s.conf# vim /etc/rc.sysinit //重启后模块失效,下面是开机自动加载模块的脚本,在/etc/新建rc.sysinit 文件

#!/bin/bash

for file in /etc/sysconfig/modules/*.modules; do

[ -x $file ] && $file

done

# vim /etc/sysconfig/modules/br_netfilter.modules //在/etc/sysconfig/modules/目录下新建文件

modprobe br_netfilter

# chmod 755 /etc/sysconfig/modules/br_netfilter.modules //增加权限1.5关闭防火墙

# systemctl stop firewalld; systemctl disable firewalld1.6配置yum源

备份基础repo源

# mkdir /root/repo.bak

# cd /etc/yum.repos.d/

# mv * /root/repo.bak/

# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

# yum makecache

# yum -y install yum-utils

# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo1.7安装基础软件包

# yum -y install yum-utils openssh-clients device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm2安装containerd

2.1安装containerd

# yum install containerd.io-1.6.6 -y2.2配置containerd配置

# mkdir -p /etc/containerd

# containerd config default > /etc/containerd/config.toml //生成containerd配置文件

# vim /etc/containerd/config.toml //修改配置文件

把SystemdCgroup = false修改成SystemdCgroup = true

把sandbox_image = "k8s.gcr.io/pause:3.6"修改成sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.7"2.3配置 containerd 开机启动,并启动 containerd

# systemctl enable containerd --now2.3修改/etc/crictl.yaml文件

# cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

# systemctl restart containerd2.4配置containerd镜像加速器

# vim /etc/containerd/config.toml文件

将config_path = ""修改成如下目录:config_path = "/etc/containerd/certs.d"

# mkdir /etc/containerd/certs.d/docker.io/ -p

# vim /etc/containerd/certs.d/docker.io/hosts.toml

[host."https://vh3bm52y.mirror.aliyuncs.com",host."https://registry.docker-cn.com"]

capabilities = ["pull"]

# systemctl restart containerd3安装docker服务

3.1安装docker

备注:docker也要安装,docker跟containerd不冲突,安装docker是为了能基于dockerfile构建镜像

# yum install docker-ce -y

# systemctl enable docker --now3.2配置docker镜像加速器

# vim /etc/docker/daemon.json //配置docker镜像加速器

{

"registry-mirrors":["https://vh3bm52y.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com"]

}

# systemctl daemon-reload

# systemctl restart docker4.安装k8s组件

4.1配置安装k8s组件需要的阿里云的repo源(不同版本的k8s需要配置不同的repo源)

1.25版本

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

EOF查看不同版本的镜像源地址:安装 kubeadm | Kubernetes

- 1.30版本

# 此操作会覆盖 /etc/yum.repos.d/kubernetes.repo 中现存的所有配置

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.30/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.30/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF- 1.29版本

# 此操作会覆盖 /etc/yum.repos.d/kubernetes.repo 中现存的所有配置

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.29/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.29/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF- 其他版本的类似

4.2安装k8s初始化工具

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

sudo systemctl enable --now kubelet4.3设置容器运行时的endpoint

# crictl config runtime-endpoint /run/containerd/containerd.sock4.4使用kubeadm初始化k8s集群(控制节点执行)

# vim kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3

...

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.17.11.85#控制节点的ip

bindPort: 6443

nodeRegistration:

criSocket: unix:///run/containerd/containerd.sock #指定containerd容器运行时的endpoint

imagePullPolicy: IfNotPresent

name: k8s-master #控制节点主机名

taints: null

---

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers #指定从阿里云仓库拉取镜像

kind: ClusterConfiguration

kubernetesVersion: 1.30.0 #k8s版本

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 #指定pod网段

serviceSubnet: 10.96.0.0/12 #指定Service网段

scheduler: {}

#在文件最后,插入以下内容,(复制时,要带着---)

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd4.4 修改/etc/sysconfig/kubelet

# vi /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"4.5 基于kubeadm.yaml文件初始化k8s (控制节点执行)

kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification4.6配置kubectl的配置文件(配置kubectl的配置文件config,相当于对kubectl进行授权,这样kubectl命令可以使用此证书对k8s集群进行管理)(控制节点执行)

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get nodes

5.安装kubernetes网络组件-Calico(控制节点执行)

上传calico.yaml到master1上,使用yaml文件安装calico 网络插件(这里需要等几分钟才能ready)。

kubectl apply -f calico.yaml

注:在线下载配置文件地址是:https://docs.projectcalico.org/manifests/calico.yaml6.控制节点部署成功以后,添加node节点

注:未标明具体节点的操作需要在node节点上都执行。 执行完成以后在进行下面步骤的操作

6.1上述的所有操作都已经操作成功,除控制节点的操作

在看k8s-master上查看加入节点的命令:

[root@k8s-master ~]# kubeadm token create --print-join-command

kubeadm join 192.168.11.85:6443 --token ol7rnk.473w56z16o24u3qs --discovery-token-ca-cert-hash sha256:98d33a741dd35172891d54ea625beb552acf6e75e66edf47e64f0f78365351c6

把k8s-node加入k8s集群:

[root@k8s-node ~]# kubeadm join 192.168.11.85:6443 --token ol7rnk.473w56z16o24u3qs --discovery-token-ca-cert-hash sha256:98d33a741dd35172891d54ea625beb552acf6e75e66edf47e64f0f78365351c66.2可以把node的ROLES变成work,按照如下方法

[root@k8s-master ~]# kubectl label node k8s-node node-role.kubernetes.io/worker=worker6.3查看节点情况

kubectl get nodes //在master上查看集群节点状况

7.添加master1控制节点

注:未标明具体节点的操作需要在看s-master1节点上都执行。 执行完成以后在进行下面步骤的操作

7.1在当前唯一的master节点上运行如下命令,获取key

1 # kubeadm init phase upload-certs --upload-certs

2 I1109 14:34:00.836965 5988 version.go:255] remote version is much newer: v1.25.3; falling back to: stable-1.22

3 [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

4 [upload-certs] Using certificate key:

5 ecf2abbfdf3a7bc45ddb2de75152ec12889971098d69939b98e4451b53aa30337.2在当前唯一的master节点上运行如下命令,获取token

在看k8s-master上查看加入节点的命令:

[root@k8s-master ~]# kubeadm token create --print-join-command

kubeadm join 192.168.11.85:6443 --token ol7rnk.473w56z16o24u3qs --discovery-token-ca-cert-hash sha256:98d33a741dd35172891d54ea625beb552acf6e75e66edf47e64f0f78365351c67.3将获取的key和token进行拼接

kubeadm join 192.168.11.85:6443 --token xxxxxxxxx --discovery-token-ca-cert-hash xxxxxxx --control-plane --certificate-key xxxxxxx注意事项:

- 不要使用 --experimental-control-plane,会报错

- 要加上--control-plane --certificate-key ,不然就会添加为node节点而不是master

- join的时候节点上不要部署,如果部署了kubeadm reset后再join

7.4将7.3步骤拼接好的join命令,在master1节点执行,执行成功以后显示如下信息

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.7.5报错处理

7.5.1 第一次加入集群的时候会有以下报错:

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

error execution phase preflight:

One or more conditions for hosting a new control plane instance is not satisfied.

unable to add a new control plane instance a cluster that doesn't have a stable controlPlaneEndpoint address

Please ensure that:

* The cluster has a stable controlPlaneEndpoint address.

* The certificates that must be shared among control plane instances are provided.

To see the stack trace of this error execute with --v=5 or higher7.5.2解决办法

查看kubeadm-config.yaml

kubectl -n kube-system get cm kubeadm-config -oyaml

发现没有controlPlaneEndpoint

添加controlPlaneEndpoint

kubectl -n kube-system edit cm kubeadm-config

大概在这么个位置:

kind: ClusterConfiguration

kubernetesVersion: v1.25.3

controlPlaneEndpoint: 192.168.11.86 #当前需要添加为master节点的ip地址

然后再在准备添加为master的节点(k8s-master1)上执行kubeadm join的命令7.6添加成功以后可以看见2个master节点和1个worker节点

后记

k8s的部署确实很复杂,有可能不同的系统版本,依赖版本都可能导致问题的出现,笔者这里是根据当前操作系统部署,如果你在安装过程中可能遇到一些奇奇怪怪的问题,欢迎下方留言,我们一起探讨。文中可能也有一些漏洞,欢迎指出问题,万分感谢。