【Kubernetes】kubectl top pod 异常?

目录

- 前言

- 一、表象

- 二、解决方法

- 1、导入镜像包

- 2、编辑yaml文件

- 3、解决问题

- 三、优化改造

- 1.修改配置文件

- 2.检查api-server服务是否正常

- 3.测试验证

- 总结

前言

各位老铁大家好,好久不见,卑微涛目前从事kubernetes相关容器工作,感兴趣的小伙伴相互交流一下鸭~

一、表象

使用kubeadm、二进制方式安装的K8S,想查看集群中,node节点/pod的CUP、内存等信息,无法查看

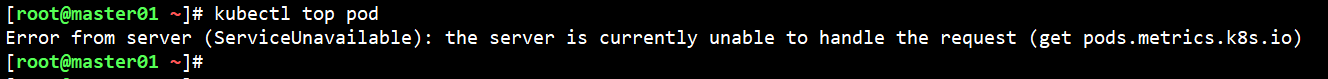

报错:Error from server (ServiceUnavailable): the server is currently unable to handle the request (get pods.metrics.k8s.io)

原因是:缺少metrics-server这个pod

二、解决方法

下面咱们手把手解决这个问题

1、导入镜像包

导入下列两个镜像包

镜像包链接:【若链接失效请私聊卑微涛】

链接:https://pan.baidu.com/s/1qo6QTqF9xSEfeN9OS9BjdQ

提取码:gjx4

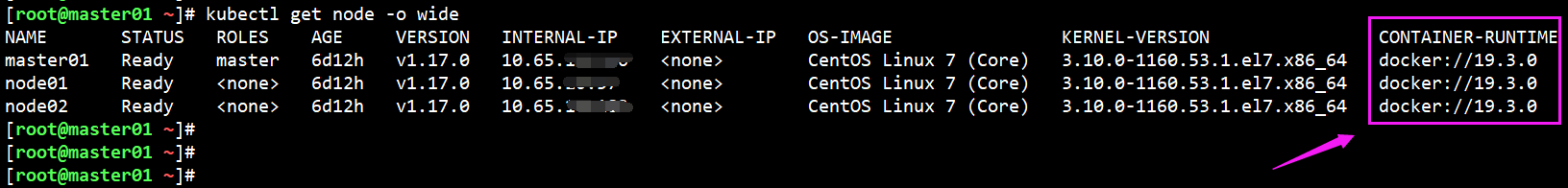

#如果k8s是 docker 作为 容器运行时

docker image load -i addon.tar.gz

docker image load -i metrics-server-amd64-0-3-6.tar.gz

#如果k8s是 container 作为 容器运行时

ctr -n=k8s.io images import addon.tar.gz

ctr -n=k8s.io images import metrics-server-amd64-0-3-6.tar.gz

#==================================================================

#查看k8s是哪个作为 容器运行时

kubectl get node -o wide

2、编辑yaml文件

注意修改对应的 images:xxx 镜像名称,其余配置无需修改

# cat metrics.yaml #这个yaml文件在镜像包对应的目录中有,大家下载也行

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:auth-delegator

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: metrics-server-auth-reader

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- deployments

verbs:

- get

- list

- update

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: metrics-server-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v0.3.6

spec:

selector:

matchLabels:

k8s-app: metrics-server

version: v0.3.6

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

version: v0.3.6

spec:

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

containers:

- name: metrics-server

image: k8s.gcr.io/metrics-server-amd64:v0.3.6 #这里的镜像名称,根据导入的镜像包修改

imagePullPolicy: IfNotPresent

command:

- /metrics-server

- --metric-resolution=30s

- --kubelet-preferred-address-types=InternalIP

- --kubelet-insecure-tls

ports:

- containerPort: 443

name: https

protocol: TCP

- name: metrics-server-nanny

image: k8s.gcr.io/addon-resizer:1.8.4 #这里的镜像名称,根据导入的镜像包修改

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 5m

memory: 50Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: metrics-server-config-volume

mountPath: /etc/config

command:

- /pod_nanny

- --config-dir=/etc/config

- --cpu=300m

- --extra-cpu=20m

- --memory=200Mi

- --extra-memory=10Mi

- --threshold=5

- --deployment=metrics-server

- --container=metrics-server

- --poll-period=300000

- --estimator=exponential

- --minClusterSize=2

volumes:

- name: metrics-server-config-volume

configMap:

name: metrics-server-config

nodeSelector:

galaxy-app: kce-monitor

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "Metrics-server"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: https

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

kubectl apply -f metrics.yaml #运行这个yaml文件

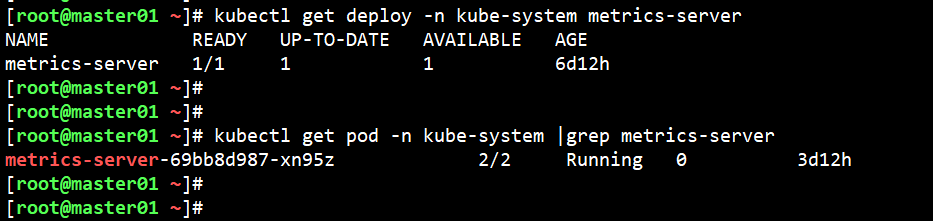

3、解决问题

查看对应的pod是否正常运行

kubectl get deploy -n kube-system metrics-server

kubectl get pod -n kube-system |grep metrics-server

等待30秒,即可正常使用了

三、优化改造

作为一名"老运维"工程师(好吧,就2年😉),优化+高可用是我们必须考虑的,有一次演练把这个pod删后,虽然重新拉起了,但kubectl top pod 又异常了,我们需要再做这么一个操作

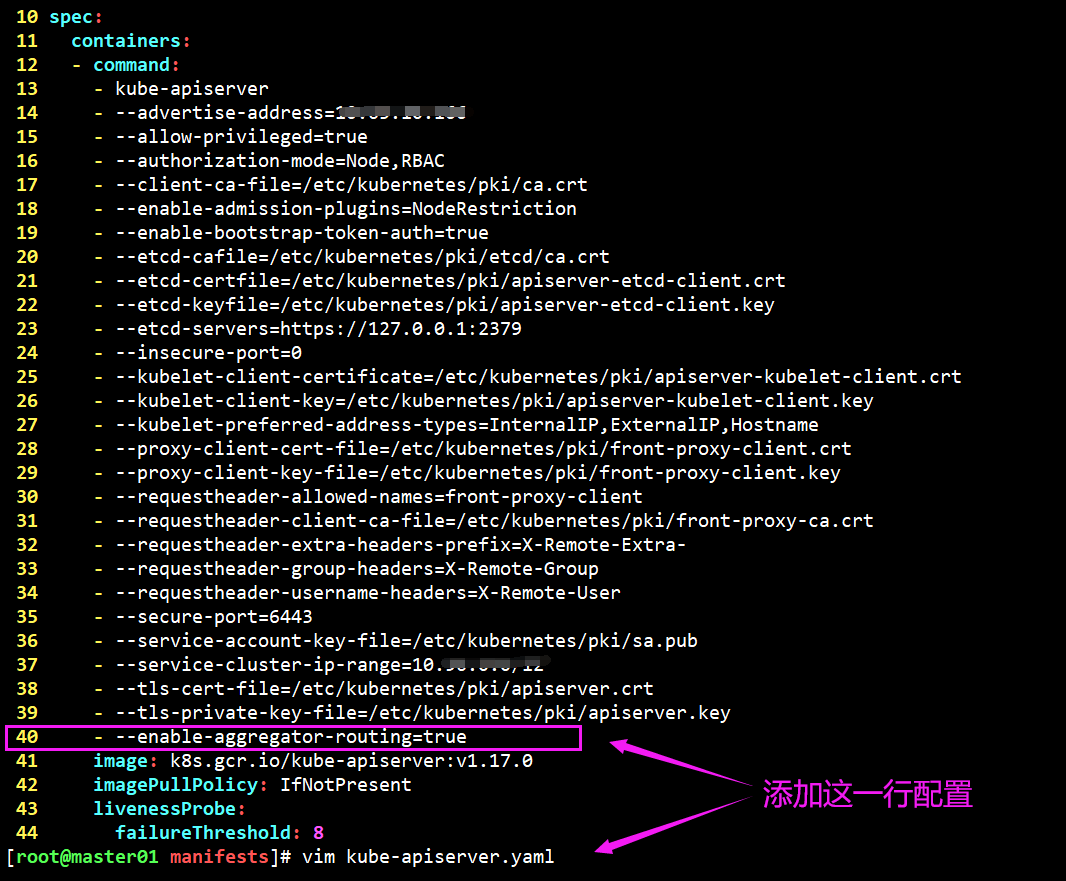

1.修改配置文件

# 修改每个 API Server 的 kube-apiserver.yaml 配置开启 Aggregator Routing:修改 manifests 配置后 API Server 会自动重启生效。

$ cat /etc/kubernetes/manifests/kube-apiserver.yaml

spec:

containers:

- command:

- --enable-aggregator-routing=true #增加这一行配置

注意:

注意:/etc/kubernetes/manifests这个目录下的文件由kubelet服务检测,是静态pod,修改完即可,不需要再kubectl apply -f 运行,会自动重新建立对应的pod

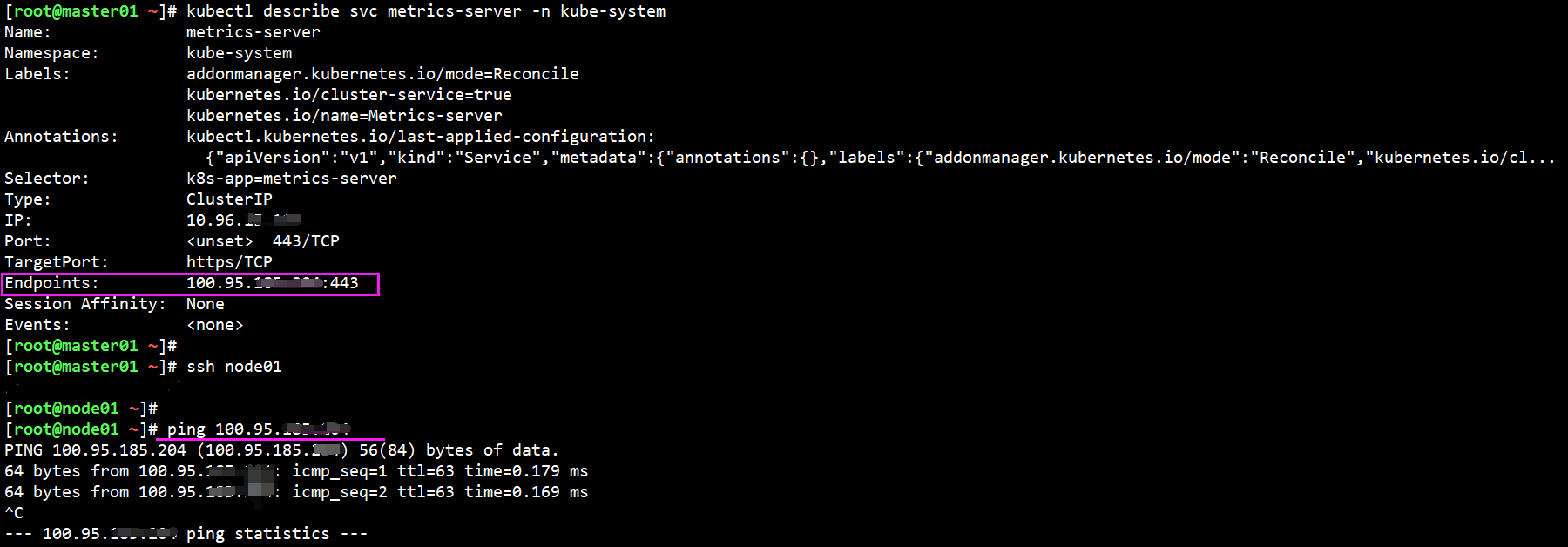

2.检查api-server服务是否正常

$ kubectl describe svc metrics-server -n kube-system

# 在其他几个节点ping一下Endpoints的地址

ping IP

telnet IP 443

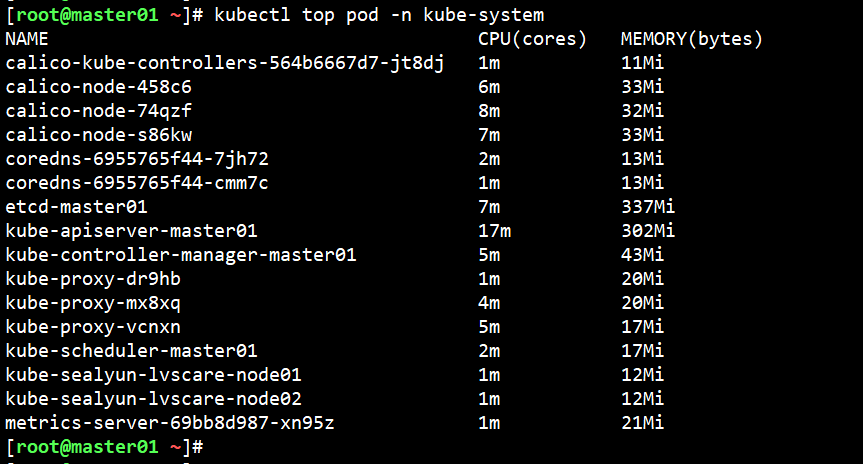

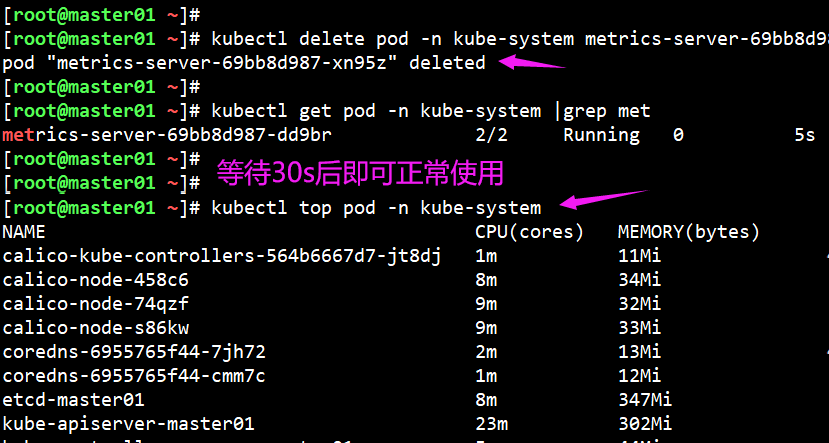

3.测试验证

我们再测试一下,把metrics-server对应的pod删除后,是否能正常使用 kubectl top 指令

大功告成!

总结

"失踪"的一年多时间里,经历了很多,从数据库 --> 大数据 --> 容器,每一次改变,逼着自己跳出舒适圈,

感谢这一路的挫折,让我变得更Strong!

后续不断输出 容器&Kubernetes 相关博客

我是卑微涛,咱们下一篇文章再见了🤞