Rockchip RV1126 模型部署(完整部署流程)

文章目录

- 1、芯片简介

- 2、部署流程简述

- 3、开发环境配置(RKNN-Toolkit)

- 3.1、软件安装测试

- 3.2、示例代码解析

- 4、开发环境配置(RKNN-NPU)

- 4.1、源码结构

- 4.2、 编译源码

- 4.3、源码解析

- 4.4、芯片端运行

- 5、量化算法解析

1、芯片简介

-

环境概述

PC系统:Ubuntu 18.04 LTS,64位

芯片类型以及系统:RV1126,Linux 32 位芯片基本情况如下图所示

-

RK工具链

工具链下载地址:https://github.com/rockchip-linux/rknn-toolkit

RKNN-ToolKit 版本:V1.7.3下图是上述软件链接的【ReadMe】介绍,不同的芯片平台适配不同的工具链。【RK1808/RK1806/RK3399Pro/RV1109/RV1126】系列芯片的适配工具链为【RKNN-Toolkit】:

2、部署流程简述

基本流程如下:1. 模型转换;2. C++转换;3. RV1126运行

- PC 端安装 Python 版本的工具链(rknn-toolkit),在PC端将(.onnx,.pt,.ckpt)导出为(.rknn)模型。该步骤可以验证自己训练的模型是否可以成功导出,卷积算子是否支持,是否可量化,预测效果是否符合预期等功能。

- PC 端配置 C 版本工具链(rknpu),将【Python】版本的工程改写为【C++】推理工程。利用【arm-32bit】的工具链编将其编译为可执行文件(不能使用ubuntu的g++环境编译)。

- 将得到RKNN模型和可执行文件,以及RK一些必要的依赖库(.so)放入芯片上,可以直接运行。

- 板子上量化评估,性能评估。

3、开发环境配置(RKNN-Toolkit)

结合官方文档,主要是解析Python版运行代码的核心RKNN函数。代码的组织结构如下:

3.1、软件安装测试

-

基础软件安装

cd ~/rknn-toolkit-master/ cd packages(软件安装,见上图的目录截图) pip install -r requirements-cpu.txt(cpu,gpu 版本都可以,如果下载慢,可以尝试其它方式,逐个安装软件) -

RKNN 软件安装

下载软件包:rknn-toolkit-v1.7.3-packages.zip,解压到根目录,按照下面的步骤进行安装:cd rknn-toolkit-v1.7.3-packages/packages pip install rknn_toolkit-1.7.3-cp36-cp36m-linux_x86_64.whl安装完成测试,没有打印任何内容,表示安装成功,如下图所示

-

PC上仿真运行示例

测试例子:

examples/onnx/yolov5/test.py

待转换模型:yolov5s.onnx

已转换模型:yolov5s.rknn

可视化结果:可以看到,PC模拟器下运行成功,结果输出正常

3.2、示例代码解析

-

核心API函数

创建RKNN对象rknn = RKNN()API 参数:打印模型转换过程中的日志信息,有助于排查错误

配置RKNN参数

# 配置预处理参数 rknn.config()在构建 RKNN 模型之前,需要先对模型进行通道均值、通道顺序、量化类型等参数的配置,这些 操作可以通过 【config 】接口进行配置,具体如下:

加载模型

ret = rknn.load(model='model_path')以ONNX模型的加载为例,注意参数:outputs,有时候需要取模型指定节点的输出结果,该参数就非常有用。具体说明如下图所示:

编译模型

ret = rknn.build()

导出 RKNN 模型

ret = rknn.export_rknn(RKNN_MODEL)通过该接口导出 RKNN 模型文件,用于模型部署。

加载 RKNN 模型

ret = rknn.load_rknn(path='./yolov5s.rknn')

初始化运行环境

ret = rknn.init_runtime()初始化运行环境,指定运行平台,相关参数如下:

模型推理

outputs = rknn.inference(inputs=[img])在进行模型推理前,必须先构建或加载一个 RKNN 模型。

模型性能评估

rknn.eval_perf(inputs=[image], is_print=True)部署到板子上,评估性能是必不可少的步骤,具体如下

内存使用情况

memory_detail = rknn.eval_memory()

-

示例解析

在PC端,利用配置好的Python环境,将ONNX模型转换为RKNN格式模型。import os import numpy as np import cv2 from rknn.api import RKNN ONNX_MODEL = 'yolov5s.onnx' RKNN_MODEL = 'yolov5s.rknn' IMG_PATH = './bus.jpg' DATASET = './dataset.txt' QUANTIZE_ON = True BOX_THRESH = 0.5 NMS_THRESH = 0.6 IMG_SIZE = (640, 640) # (width, height), such as (1280, 736) CLASSES = ("person", "bicycle", "car","motorbike ","aeroplane ","bus ","train","truck ","boat","traffic light", "fire hydrant","stop sign ","parking meter","bench","bird","cat","dog ","horse ","sheep","cow","elephant", "bear","zebra ","giraffe","backpack","umbrella","handbag","tie","suitcase","frisbee","skis","snowboard","sports ball","kite", "baseball bat","baseball glove","skateboard","surfboard","tennis racket","bottle","wine glass","cup","fork","knife ", "spoon","bowl","banana","apple","sandwich","orange","broccoli","carrot","hot dog","pizza ","donut","cake","chair","sofa", "pottedplant","bed","diningtable","toilet ","tvmonitor","laptop ","mouse ","remote ","keyboard ","cell phone","microwave ", "oven ","toaster","sink","refrigerator ","book","clock","vase","scissors ","teddy bear ","hair drier", "toothbrush ") def sigmoid(x): return 1 / (1 + np.exp(-x)) def xywh2xyxy(x): # Convert [x, y, w, h] to [x1, y1, x2, y2] y = np.copy(x) y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left x y[:, 1] = x[:, 1] - x[:, 3] / 2 # top left y y[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right x y[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right y return y def process(input, mask, anchors): anchors = [anchors[i] for i in mask] grid_h, grid_w = map(int, input.shape[0:2]) box_confidence = sigmoid(input[..., 4]) box_confidence = np.expand_dims(box_confidence, axis=-1) box_class_probs = sigmoid(input[..., 5:]) box_xy = sigmoid(input[..., :2])*2 - 0.5 col = np.tile(np.arange(0, grid_w), grid_h).reshape(-1, grid_w) row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_w) col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2) row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2) grid = np.concatenate((col, row), axis=-1) box_xy += grid box_xy *= (int(IMG_SIZE[1]/grid_h), int(IMG_SIZE[0]/grid_w)) box_wh = pow(sigmoid(input[..., 2:4])*2, 2) box_wh = box_wh * anchors box = np.concatenate((box_xy, box_wh), axis=-1) return box, box_confidence, box_class_probs def filter_boxes(boxes, box_confidences, box_class_probs): """Filter boxes with box threshold. It's a bit different with origin yolov5 post process! # Arguments boxes: ndarray, boxes of objects. box_confidences: ndarray, confidences of objects. box_class_probs: ndarray, class_probs of objects. # Returns boxes: ndarray, filtered boxes. classes: ndarray, classes for boxes. scores: ndarray, scores for boxes. """ boxes = boxes.reshape(-1, 4) box_confidences = box_confidences.reshape(-1) box_class_probs = box_class_probs.reshape(-1, box_class_probs.shape[-1]) _box_pos = np.where(box_confidences >= BOX_THRESH) boxes = boxes[_box_pos] box_confidences = box_confidences[_box_pos] box_class_probs = box_class_probs[_box_pos] class_max_score = np.max(box_class_probs, axis=-1) classes = np.argmax(box_class_probs, axis=-1) _class_pos = np.where(class_max_score* box_confidences >= BOX_THRESH) boxes = boxes[_class_pos] classes = classes[_class_pos] scores = (class_max_score* box_confidences)[_class_pos] return boxes, classes, scores def nms_boxes(boxes, scores): """Suppress non-maximal boxes. # Arguments boxes: ndarray, boxes of objects. scores: ndarray, scores of objects. # Returns keep: ndarray, index of effective boxes. """ x = boxes[:, 0] y = boxes[:, 1] w = boxes[:, 2] - boxes[:, 0] h = boxes[:, 3] - boxes[:, 1] areas = w * h order = scores.argsort()[::-1] keep = [] while order.size > 0: i = order[0] keep.append(i) xx1 = np.maximum(x[i], x[order[1:]]) yy1 = np.maximum(y[i], y[order[1:]]) xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]]) yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]]) w1 = np.maximum(0.0, xx2 - xx1 + 0.00001) h1 = np.maximum(0.0, yy2 - yy1 + 0.00001) inter = w1 * h1 ovr = inter / (areas[i] + areas[order[1:]] - inter) inds = np.where(ovr <= NMS_THRESH)[0] order = order[inds + 1] keep = np.array(keep) return keep def yolov5_post_process(input_data): masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]] anchors = [[10, 13], [16, 30], [33, 23], [30, 61], [62, 45], [59, 119], [116, 90], [156, 198], [373, 326]] boxes, classes, scores = [], [], [] for input,mask in zip(input_data, masks): b, c, s = process(input, mask, anchors) b, c, s = filter_boxes(b, c, s) boxes.append(b) classes.append(c) scores.append(s) boxes = np.concatenate(boxes) boxes = xywh2xyxy(boxes) classes = np.concatenate(classes) scores = np.concatenate(scores) nboxes, nclasses, nscores = [], [], [] for c in set(classes): inds = np.where(classes == c) b = boxes[inds] c = classes[inds] s = scores[inds] keep = nms_boxes(b, s) nboxes.append(b[keep]) nclasses.append(c[keep]) nscores.append(s[keep]) if not nclasses and not nscores: return None, None, None boxes = np.concatenate(nboxes) classes = np.concatenate(nclasses) scores = np.concatenate(nscores) return boxes, classes, scores def draw(image, boxes, scores, classes): """Draw the boxes on the image. # Argument: image: original image. boxes: ndarray, boxes of objects. classes: ndarray, classes of objects. scores: ndarray, scores of objects. all_classes: all classes name. """ for box, score, cl in zip(boxes, scores, classes): top, left, right, bottom = box print('class: {}, score: {}'.format(CLASSES[cl], score)) print('box coordinate left,top,right,down: [{}, {}, {}, {}]'.format(top, left, right, bottom)) top = int(top) left = int(left) right = int(right) bottom = int(bottom) cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 2) cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score), (top, left - 6), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 2) def letterbox(im, new_shape=(640, 640), color=(0, 0, 0)): # Resize and pad image while meeting stride-multiple constraints shape = im.shape[:2] # current shape [height, width] if isinstance(new_shape, int): new_shape = (new_shape, new_shape) # Scale ratio (new / old) r = min(new_shape[0] / shape[0], new_shape[1] / shape[1]) # Compute padding ratio = r, r # width, height ratios new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r)) dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh padding dw /= 2 # divide padding into 2 sides dh /= 2 if shape[::-1] != new_unpad: # resize im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR) top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1)) left, right = int(round(dw - 0.1)), int(round(dw + 0.1)) im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border return im, ratio, (dw, dh) if __name__ == '__main__': # Create RKNN object # 创建RKNN对象 rknn = RKNN() if not os.path.exists(ONNX_MODEL): print('model not exist') exit(-1) # pre-process config # 配置模型运行的相关参数,具体如下 print('--> Config model') rknn.config(reorder_channel='0 1 2', # 调整通道输入顺序 mean_values=[[0, 0, 0]], # 均值 std_values=[[255, 255, 255]], # 方差 optimization_level=3, # 打开所有优化选项 target_platform = 'rv1126', # 指定RKNN的运行平台 output_optimize=1, # 官方文档未提供该参数的说明 quantize_input_node=QUANTIZE_ON) # 是否对输入节点进行量化 print('done') # Load ONNX model # 加载待转换模型(onnx) print('--> Loading model') ret = rknn.load_onnx(model=ONNX_MODEL) if ret != 0: print('Load yolov5 failed!') exit(ret) print('done') # Build model # 构建RKNN模型 # do_quantization:是否进行量化 # dataset:参与量化的输入数据 print('--> Building model') ret = rknn.build(do_quantization=QUANTIZE_ON, dataset=DATASET) if ret != 0: print('Build yolov5 failed!') exit(ret) print('done') # Export RKNN model # 导出模型为rknn print('--> Export RKNN model') ret = rknn.export_rknn(RKNN_MODEL) if ret != 0: print('Export yolov5rknn failed!') exit(ret) print('done') # ============================================================ # 上述模型转换成功后,后续代码对模型进行性能评估 # ============================================================ # init runtime environment # 初始化运行环境 print('--> Init runtime environment') ret = rknn.init_runtime() # ret = rknn.init_runtime('rk1808', device_id='1808') if ret != 0: print('Init runtime environment failed') exit(ret) print('done') # Set inputs img = cv2.imread(IMG_PATH) img, ratio, (dw, dh) = letterbox(img, new_shape=(IMG_SIZE[1], IMG_SIZE[0])) img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) # Inference # 模型推理 print('--> Running model') outputs = rknn.inference(inputs=[img]) # post process input0_data = outputs[0] input1_data = outputs[1] input2_data = outputs[2] input0_data = input0_data.reshape([3,-1]+list(input0_data.shape[-2:])) input1_data = input1_data.reshape([3,-1]+list(input1_data.shape[-2:])) input2_data = input2_data.reshape([3,-1]+list(input2_data.shape[-2:])) input_data = list() input_data.append(np.transpose(input0_data, (2, 3, 0, 1))) input_data.append(np.transpose(input1_data, (2, 3, 0, 1))) input_data.append(np.transpose(input2_data, (2, 3, 0, 1))) boxes, classes, scores = yolov5_post_process(input_data) img_1 = cv2.cvtColor(img, cv2.COLOR_RGB2BGR) if boxes is not None: draw(img_1, boxes, scores, classes) # cv2.imshow("post process result", img_1) # cv2.waitKeyEx(0) # 性能评估 rknn.eval_perf(inputs=[img],is_print=True) rknn.release()

4、开发环境配置(RKNN-NPU)

通过上述教程,我们在PC端将(onnx)模型转为(rknn)模型,并且得到预期的输出结果,如此才能进行板子上的验证流程。下面我们需要将模型放到具体的芯片端运行(比如RV1126),完成从PC端到芯片端的完整部署流程。

4.1、源码结构

本工程主要为Rockchip NPU提供驱动、示例等,工程源码结构如下图所示。

适用芯片:RK1808/RK1806,RV1109/RV1126

配置平台:Ubuntu18.04

源码结构:

4.2、 编译源码

-

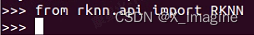

下载【arm】交叉编译器

下载地址:https://releases.linaro.org/components/toolchain/binaries/6.4-2017.08/arm-linux-gnueabihf/

编译器版本:32位

交叉编译器:将交叉编译器解压到固定路径,在Ubuntu编译工程,即可在RV1126板子上运行程序。

arm-none-eabi-gcc:GNU 推出的的ARM交叉编译工具,可用于交叉编译 ARM MCU(32位)芯片,如ARM7、ARM9、Cortex-M/R芯片程序。

aarch64-linux-gnu-gcc:Linaro 公司基于GCC推出的ARM交叉编译工具,可用于交叉编译 ARMv8(64位)目标中的裸机程序、u-boot、Linux kernel、filesystem和App应用程序。 -

CMakeLists.txt

注意库的版本:RV1126是32位的系统,一些依赖库也需要用上述工具链编译为32位的库。cmake_minimum_required(VERSION 3.4.1) project(rknn_yolov5_demo_linux) set(CMAKE_SYSTEM_NAME Linux) set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -s -O3") set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -s -O3") if (CMAKE_C_COMPILER MATCHES "aarch64") set(LIB_ARCH lib64) else() set(LIB_ARCH lib) endif() # rga set(RGA_DIR ${CMAKE_SOURCE_DIR}/../3rdparty/rga) include_directories(${RGA_DIR}/include) # drm set(DRM_DIR ${CMAKE_SOURCE_DIR}/../3rdparty/drm) include_directories(${DRM_DIR}/include) include_directories(${DRM_DIR}/include/libdrm) include_directories(${CMAKE_SOURCE_DIR}/include) # rknn api set(RKNN_API_PATH ${CMAKE_SOURCE_DIR}/../../librknn_api) include_directories(${RKNN_API_PATH}/include) set(RKNN_API_LIB ${RKNN_API_PATH}/${LIB_ARCH}/librknn_api.so) #stb include_directories(${CMAKE_SOURCE_DIR}/../3rdparty/) set(CMAKE_INSTALL_RPATH "lib") add_executable(rknn_yolov5_demo src/drm_func.c src/rga_func.c src/postprocess.cc src/main.cc ) target_link_libraries(rknn_yolov5_demo ${RKNN_API_LIB} dl ) # install target and libraries set(CMAKE_INSTALL_PREFIX ${CMAKE_SOURCE_DIR}/install/rknn_yolov5_demo) install(TARGETS rknn_yolov5_demo DESTINATION ./) install(DIRECTORY model DESTINATION ./) install(PROGRAMS ${RKNN_API_LIB} DESTINATION lib) -

配置【build.sh】

#!/bin/bash set -e # for rk1808 aarch64 # GCC_COMPILER=${RK1808_TOOL_CHAIN}/bin/aarch64-linux-gnu # for rk1806 armhf # GCC_COMPILER=~/opts/gcc-linaro-6.3.1-2017.05-x86_64_arm-linux-gnueabihf/bin/arm-linux-gnueabihf # for rv1109/rv1126 armhf # 自己添加的编译器路径【RV1109_TOOL_CHAIN】 RV1109_TOOL_CHAIN='/home/ll/Mount/kxh_2023/RV1126/gcc-linaro-6.4.1-2017.08-i686_arm-linux-gnueabihf' GCC_COMPILER=${RV1109_TOOL_CHAIN}/bin/arm-linux-gnueabihf ROOT_PWD=$( cd "$( dirname $0 )" && cd -P "$( dirname "$SOURCE" )" && pwd ) # build rockx BUILD_DIR=${ROOT_PWD}/build if [[ ! -d "${BUILD_DIR}" ]]; then mkdir -p ${BUILD_DIR} fi cd ${BUILD_DIR} cmake .. \ -DCMAKE_C_COMPILER=${GCC_COMPILER}-gcc \ -DCMAKE_CXX_COMPILER=${GCC_COMPILER}-g++ make -j4 make install cd - # cp run_rk180x.sh install/rknn_yolov5_demo/ cp run_rv1109_rv1126.sh install/rknn_yolov5_demo/在原【build.sh】的基础上,只需添加交叉编译器的路径即可【RV1109_TOOL_CHAIN】,即可编译程序。

cd ~/RV1126/rknpu-master/rknpu-master/rknn/rknn_api/examples/rknn_yolov5_demo ./build.sh生成的文件在【install】目录下,然后将其拷贝到【RV1126】板子上运行即可。

4.3、源码解析

-

核心API函数

初始化模型// 读取二进制的RKNN模型 unsigned char* model_data = load_model(model_name, &model_data_size); // 创建 rknn_context 对象 ret = rknn_init(&ctx, model_data, model_data_size, 0)rknn_init 初始化函数将创建 rknn_context 对象、加载 RKNN 模型以及根据 【flag】执行特定的初始化行为

rknn_query

// 查询命令接口 // 以查询SDK版本信息的命令为例 ret = rknn_query(ctx, RKNN_QUERY_SDK_VERSION, &version, sizeof(rknn_sdk_version)); // 查询输入输出个数的命令 rknn_input_output_num io_num; ret = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num)); if (ret < 0) { printf("rknn_init error ret=%d\n", ret); return -1; } printf("model input num: %d, output num: %d\n", io_num.n_input, io_num.n_output);rknn_query 函数能够查询获取到模型输入输出、运行时间以及 SDK 版本等信息

支持的查询命令如下:

设置模型的输入

// 设置输入的基本结构 rknn_input inputs[1]; memset(inputs, 0, sizeof(inputs)); inputs[0].index = 0; inputs[0].type = RKNN_TENSOR_UINT8; inputs[0].size = width * height * channel; inputs[0].fmt = RKNN_TENSOR_NHWC; inputs[0].pass_through = 0; // 设置输入信息 rknn_inputs_set(ctx, io_num.n_input, inputs);通过 rknn_inputs_set 函数可以设置模型的输入数据。该函数能够支持多个输入,其中每个输入是 rknn_input 结构体对象,在传入之前用户需要设置该对象。

模型推理

ret = rknn_run(ctx, NULL);rknn_run 函数将执行一次模型推理,调用之前需要先通过 rknn_inputs_set 函数设置输入数据

获取模型推理结果

rknn_output outputs[io_num.n_output]; memset(outputs, 0, sizeof(outputs)); for (int i = 0; i < io_num.n_output; i++) { outputs[i].want_float = 0; // 标识是否需要将输出数据转为 float 类型输出。 } ret = rknn_outputs_get(ctx, io_num.n_output, outputs, NULL);rknn_outputs_get 函数可以获取模型推理的输出数据。该函数能够一次获取多个输出数据。其中每个输出是 rknn_output 结构体对象,在函数调用之前需要依次创建并设置每个rknn_output 对象

释放输出结果

ret = rknn_outputs_release(ctx, io_num.n_output, outputs);rknn_outputs_release 函数将释放 rknn_outputs_get 函数得到的输出的相关资源

-

示例解析

为了更加明晰整个处理流程,这里只解析代码的主函数部分,基本步骤如下:

a. 初始化模型

b. 获得输入和输出数量

c. 读取图片

d. 设置输入数据

e. 网络推理

f. 获取推理结果

g. 后处理

h. 释放资源/*------------------------------------------- Main Functions -------------------------------------------*/ int main(int argc, char** argv) { int status = 0; char* model_name = NULL; rknn_context ctx; void* drm_buf = NULL; int drm_fd = -1; int buf_fd = -1; // converted from buffer handle unsigned int handle; size_t actual_size = 0; int img_width = 0; int img_height = 0; int img_channel = 0; rga_context rga_ctx; drm_context drm_ctx; const float nms_threshold = NMS_THRESH; const float box_conf_threshold = BOX_THRESH; struct timeval start_time, stop_time; int ret; memset(&rga_ctx, 0, sizeof(rga_context)); memset(&drm_ctx, 0, sizeof(drm_context)); if (argc != 3) { printf("Usage: %s <rknn model> <bmp> \n", argv[0]); return -1; } printf("post process config: box_conf_threshold = %.2f, nms_threshold = %.2f\n", box_conf_threshold, nms_threshold); model_name = (char*)argv[1]; char* image_name = argv[2]; if (strstr(image_name, ".jpg") != NULL || strstr(image_name, ".png") != NULL) { printf("Error: read %s failed! only support .bmp format image\n", image_name); return -1; } /* Create the neural network */ // 1. 加载二进制rknn模型 // 2. 初始化 rknn_context 对象 printf("Loading mode...\n"); int model_data_size = 0; unsigned char* model_data = load_model(model_name, &model_data_size); ret = rknn_init(&ctx, model_data, model_data_size, 0); if (ret < 0) { printf("rknn_init error ret=%d\n", ret); return -1; } // 3. 查询SDK的版本信息 rknn_sdk_version version; ret = rknn_query(ctx, RKNN_QUERY_SDK_VERSION, &version, sizeof(rknn_sdk_version)); if (ret < 0) { printf("rknn_init error ret=%d\n", ret); return -1; } printf("sdk version: %s driver version: %s\n", version.api_version, version.drv_version); // 4. 查询模型输入和输出的数量 rknn_input_output_num io_num; ret = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num)); if (ret < 0) { printf("rknn_init error ret=%d\n", ret); return -1; } printf("model input num: %d, output num: %d\n", io_num.n_input, io_num.n_output); // 5. 查询输入的属性信息:比如大小,shape,name rknn_tensor_attr input_attrs[io_num.n_input]; memset(input_attrs, 0, sizeof(input_attrs)); for (int i = 0; i < io_num.n_input; i++) { input_attrs[i].index = i; ret = rknn_query(ctx, RKNN_QUERY_INPUT_ATTR, &(input_attrs[i]), sizeof(rknn_tensor_attr)); if (ret < 0) { printf("rknn_init error ret=%d\n", ret); return -1; } dump_tensor_attr(&(input_attrs[i])); } // 6. 查询输出的属性信息:比如大小,shape,name,输出个数 rknn_tensor_attr output_attrs[io_num.n_output]; memset(output_attrs, 0, sizeof(output_attrs)); for (int i = 0; i < io_num.n_output; i++) { output_attrs[i].index = i; ret = rknn_query(ctx, RKNN_QUERY_OUTPUT_ATTR, &(output_attrs[i]), sizeof(rknn_tensor_attr)); dump_tensor_attr(&(output_attrs[i])); if (output_attrs[i].qnt_type != RKNN_TENSOR_QNT_AFFINE_ASYMMETRIC || output_attrs[i].type != RKNN_TENSOR_UINT8) { fprintf(stderr, "The Demo required for a Affine asymmetric u8 quantized rknn model, but output quant type is %s, output " "data type is %s\n", get_qnt_type_string(output_attrs[i].qnt_type), get_type_string(output_attrs[i].type)); return -1; } } int channel = 3; int width = 0; int height = 0; if (input_attrs[0].fmt == RKNN_TENSOR_NCHW) { printf("model is NCHW input fmt\n"); width = input_attrs[0].dims[0]; height = input_attrs[0].dims[1]; } else { printf("model is NHWC input fmt\n"); width = input_attrs[0].dims[1]; height = input_attrs[0].dims[2]; } printf("model input height=%d, width=%d, channel=%d\n", height, width, channel); // Load image // 7. 读取图像数据,也可以用opencv CImg<unsigned char> img(image_name); unsigned char* input_data = NULL; input_data = load_image(image_name, &img_height, &img_width, &img_channel, &input_attrs[0]); if (!input_data) { return -1; } // 8. 设置输入的属性信息 rknn_input inputs[1]; memset(inputs, 0, sizeof(inputs)); inputs[0].index = 0; inputs[0].type = RKNN_TENSOR_UINT8; inputs[0].size = width * height * channel; inputs[0].fmt = RKNN_TENSOR_NHWC; inputs[0].pass_through = 0; // DRM alloc buffer drm_fd = drm_init(&drm_ctx); drm_buf = drm_buf_alloc(&drm_ctx, drm_fd, img_width, img_height, channel * 8, &buf_fd, &handle, &actual_size); memcpy(drm_buf, input_data, img_width * img_height * channel); void* resize_buf = malloc(height * width * channel); // init rga context RGA_init(&rga_ctx); img_resize_slow(&rga_ctx, drm_buf, img_width, img_height, resize_buf, width, height); inputs[0].buf = resize_buf; gettimeofday(&start_time, NULL); // 8. 设置输入的属性信息 rknn_inputs_set(ctx, io_num.n_input, inputs); rknn_output outputs[io_num.n_output]; memset(outputs, 0, sizeof(outputs)); for (int i = 0; i < io_num.n_output; i++) { outputs[i].want_float = 0; } // 9. 执行推理一次 ret = rknn_run(ctx, NULL); // 10. 获取推理结果 ret = rknn_outputs_get(ctx, io_num.n_output, outputs, NULL); gettimeofday(&stop_time, NULL); printf("once run use %f ms\n", (__get_us(stop_time) - __get_us(start_time)) / 1000); // post process // 10. 对输出进行后处理 float scale_w = (float)width / img_width; float scale_h = (float)height / img_height; detect_result_group_t detect_result_group; // 10.1:获取量化常量值,用于反量化 std::vector<float> out_scales; std::vector<uint32_t> out_zps; for (int i = 0; i < io_num.n_output; ++i) { out_scales.push_back(output_attrs[i].scale); out_zps.push_back(output_attrs[i].zp); } // 10.2:开始后处理 post_process((uint8_t*)outputs[0].buf, (uint8_t*)outputs[1].buf, (uint8_t*)outputs[2].buf, height, width, box_conf_threshold, nms_threshold, scale_w, scale_h, out_zps, out_scales, &detect_result_group); // Draw Objects // 10.3: 绘制预测结果 char text[256]; const unsigned char blue[] = {0, 0, 255}; const unsigned char white[] = {255, 255, 255}; for (int i = 0; i < detect_result_group.count; i++) { detect_result_t* det_result = &(detect_result_group.results[i]); sprintf(text, "%s %.2f", det_result->name, det_result->prop); printf("%s @ (%d %d %d %d) %f\n", det_result->name, det_result->box.left, det_result->box.top, det_result->box.right, det_result->box.bottom, det_result->prop); int x1 = det_result->box.left; int y1 = det_result->box.top; int x2 = det_result->box.right; int y2 = det_result->box.bottom; // draw box img.draw_rectangle(x1, y1, x2, y2, blue, 1, ~0U); img.draw_text(x1, y1 - 12, text, white); } img.save("./out.bmp"); // 11:释放内存中的输出数据 ret = rknn_outputs_release(ctx, io_num.n_output, outputs); // release // 12: 释放rknn_context对象,以及其它资源 ret = rknn_destroy(ctx); drm_buf_destroy(&drm_ctx, drm_fd, buf_fd, handle, drm_buf, actual_size); drm_deinit(&drm_ctx, drm_fd); RGA_deinit(&rga_ctx); if (model_data) { free(model_data); } if (resize_buf) { free(resize_buf); } stbi_image_free(input_data); return 0; }

4.4、芯片端运行

- adb push install /userdata/

- export LD_LIBRARY_PATH=./install/lib

- rknn_yolov5_demo <model_path> <image_path>

或者可以创建【sh】脚本运行,具体脚本如下:

#!/bin/bash

set -e # 如果单个文件运行错误,则报错,并停止运行;如果没有该语句,则报错,但不会停止运行

# 输入数据文件夹

data_path="image_dir/"

# 保存数据文件夹

savepath="result/"

export LD_LIBRARY_PATH=/tmp/lib

# 遍历路径下的每一个图片,运行程序

for dir in $(ls $data_path)

do

echo dir

./model/yolov5_rknn_demo--model_path=./model/yolov5.rknn --data_path=$path/$dir --save_path=$savepath/

done

5、量化算法解析

-

基本简介

量化的目的:量化模型使用较低精度(如 int8/uint8/int16)保存模型的权重信息,在部署时可以使用更少的存储空间,获得更快的推理速度。但各深度学习框架训练、保存模型时,通常使用浮点数据,所以模型量化是模型转换过程中非常重要的一环。

RKNN Toolkit 对量化模型的支持主要有以下两种形式:

-

训练后静态量化

使用这种方式时,RKNN Toolkit 加载用户训练好的浮点模型,然后根据 config 接口指定的量化方法和用户提供的校准数据集(训练数据或验证数据的一个小子集,大约 100~500张)估算模型中所有浮点数据的范围(最小值, 最大值)。目前,RKNN Toolkit 支持 3 种量化方法。

方式1:asymmetric_quantized-u8(默认量化方法)

这是 TensorFlow 支持的训练后量化算法,也是 Google 推荐的。根据论文” Quantizing deep convolutional networks for efficient inference: A whitepaper”的描述,这种量化方式对精度的损失最小。计算公式如下:

quant:表示量化后的数,float_num:代表量化前的浮点数,scale:缩放系数(float32),zero-points 代表实数为 0 时对应的量化值(int32 类型),最后把 quant 饱和到 [range_min, range_max]。因为要量化到 uint8 类型,所以 range_max=255,range_min=0.

方式2:dynamic_fixed_point-i8

方式3:dynamic_fixed_point-i16

dynamic_fixed_point-i16 的量化公式与 dynamic_fixed_point-i8 一样,只不过它的位宽 bw 是 16,饱和的范围。RK3399Pro 或 RK1808 的 NPU 自带 300Gops int16 计算单元,对于某些量化到 8位后精度损失较大的模型,可以考虑使用此量化方式。

-

量化感知训练

通过量化感知训练可以得到一个带量化权重的模型。RKNN Toolkit 目前支持 TensorFlow 和 PyTorch 这两种框架量化感知训练得到的模型。量化感知训练技术细节请参考如下链接:

TensorFlow:https://www.tensorflow.org/model_optimization/guide/quantization/training

Pytorch:https://pytorch.org/blog/introduction-to-quantization-on-pytorch/