Pytorch梯度下降——up主:刘二大人《PyTorch深度学习实践》

教程: https://www.bilibili.com/video/BV1Y7411d7Ys?p=2&vd_source=715b347a0d6cb8aa3822e5a102f366fe

数据集:

x

d

a

t

a

=

[

1.0

,

2.0

,

3.0

]

y

d

a

t

a

=

[

2.0

,

4.0

,

6.0

]

x_{data} = [1.0, 2.0, 3.0] \\y_{data} = [2.0, 4.0, 6.0]

xdata=[1.0,2.0,3.0]ydata=[2.0,4.0,6.0]

参数:

w

l

i

s

t

=

[

0.0

,

4.0

,

0.1

]

b

l

i

s

t

=

[

−

2.0

,

2.1

,

0.1

]

w_{list} = [0.0, 4.0, 0.1]\\b_{list} = [-2.0, 2.1, 0.1]

wlist=[0.0,4.0,0.1]blist=[−2.0,2.1,0.1]

模型:

y

=

w

∗

x

y = w*x

y=w∗x

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

epoch_log = []

cost_log = []

#随机化参数

w = 1.0

def forward(x):

return x * w

#定义损失函数

def loss(xs, ys):

cost = 0

for x,y in zip(xs, ys):

y_pred = forward(x)

cost += (y_pred - y) **2

return cost / len(xs)

def gradient(xs, ys):

grad = 0

for x, y in zip(xs, ys):

grad += 2 * x * (x * w - y)

return grad / len(xs)

for epoch in range(100):

cost_val = loss(x_data, y_data)

cost_log.append(cost_val)

grad_val = gradient(x_data, y_data)

epoch_log.append(epoch)

w -= 0.01 * grad_val

print('Epoch:', epoch, 'w =', w, 'loss =', cost_val)

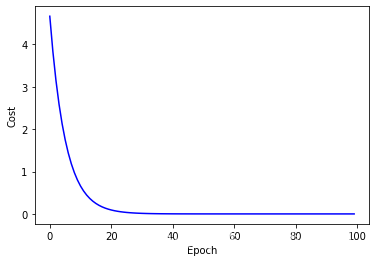

plt.figure()

plt.plot(epoch_log, cost_log, c='b')

plt.xlabel('Epoch')

plt.ylabel('Cost')

plt.show()