Coursera上Golang专项课程3:Concurrency in Go 学习笔记(完结)

Concurrency in Go

本文是 Concurrency in Go 这门课的学习笔记,如有侵权,请联系删除。

文章目录

- Concurrency in Go

- MODULE 1: Why Use Concurrency?

- Learning Objectives

- M1.1.1 - Parallel Execution

- M1.1.2 - Von Neumann Bottleneck

- M1.1.3 - Power Wall

- M1.2.1 - Concurrent vs Parallel

- M1.2.2 - Hiding Latency

- Module 1 Quiz

- Peer-graded Assignment: Module 1 Activity

- MODULE 2: CONCURRENCY BASICS

- Learning Objectives

- M2.1.1- Processes

- M2.1.2 - Scheduling

- M2.1.3 - Threads and Goroutines

- M2.2.1 - Interleavings

- M2.2.2 - Race Conditions

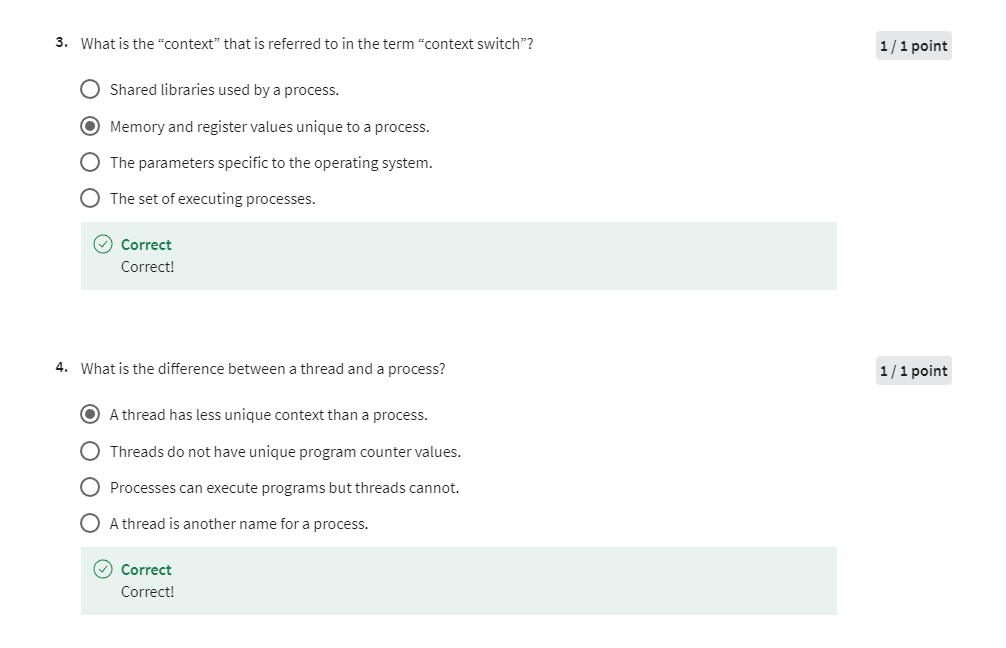

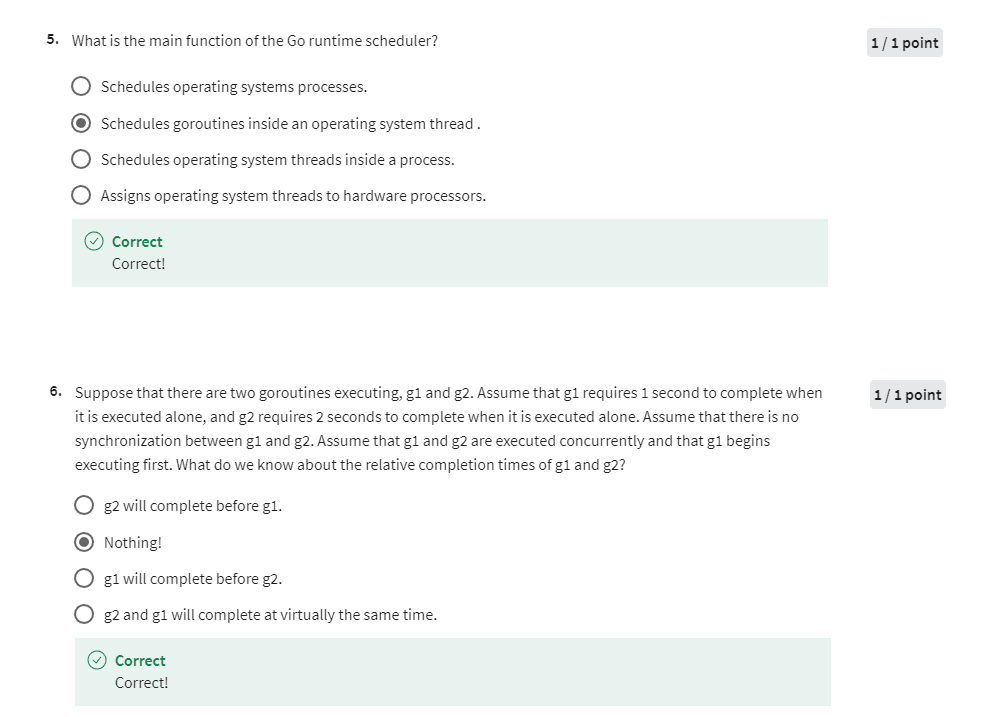

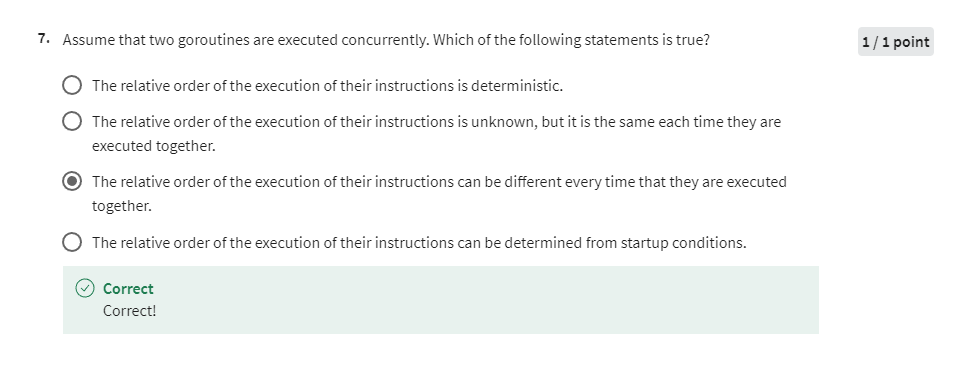

- Module 2 Quiz

- Peer-graded Assignment: Module 2 Activity

- MODULE 3: THREADS IN GO

- Learning Objectives

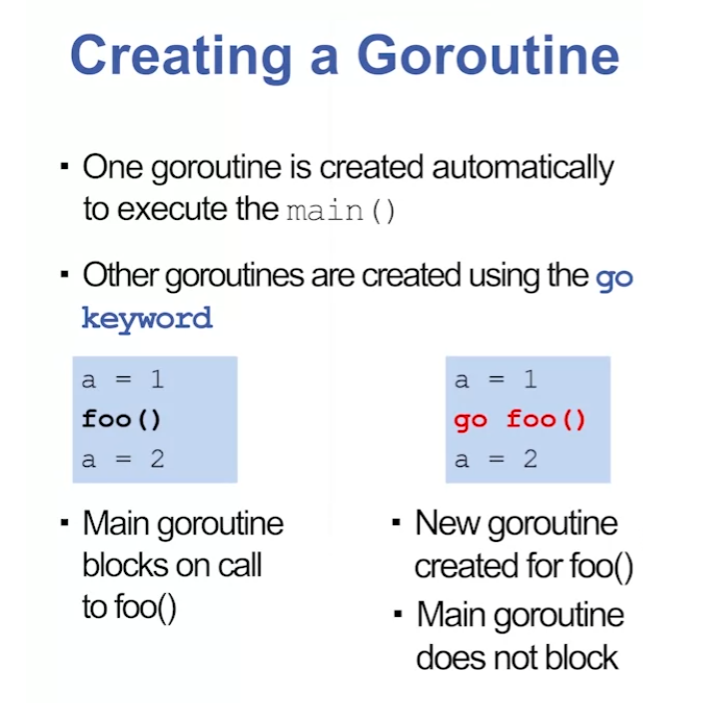

- M3.1.1 - Goroutines

- M3.1.2 - Exiting Goroutines

- M3.2.1 - Basic Synchronization

- M3.2.2 - Wait Groups

- M3.3.1 - Communication

- M3.3.2 - Blocking on Channels

- M3.3.3 - Buffered Channels

- Module 3 Quiz

- Peer-graded Assignment: Module 3 Activity

- MODULE 4: SYNCHRONIZED COMMUNICATION

- Learning Objectives

- M4.1.1 - Blocking on Channels

- M4.1.2 - Select

- M4.2.1 - Mutual Exclusion

- M4.2.2 - Mutex

- M4.2.3 - Mutex Methods

- M4.3.1 - Once Synchronization

- M4.3.2 - Deadlock

- M4.3.3 - Dining Philosophers

- Module 4 Quiz

- Peer-graded Assignment: Module 4 Activity

- 后记

MODULE 1: Why Use Concurrency?

This course introduces the concept of concurrency in Go. The first module sets the stage by reviewing the physical factors that can restrict microprocessor performance increases in the future.

Learning Objectives

- Identify basic terms and concepts related to concurrency.

- Identify hardware limitations that affect the design of future architectures.

- Explain Moore’s Law and how it affects the future of microprocessor design.

M1.1.1 - Parallel Execution

Parallel Execution

并行执行指的是同时执行多个任务或进程。在 Go 中,可以利用 goroutine 和 channel 来实现并行执行。Goroutine 是由 Go 运行时管理的轻量级线程,它们允许函数并发执行。Channel 提供了 goroutine 之间同步和交换数据的通信机制。

要在 Go 中利用并行执行,可以启动多个 goroutine 来并发执行任务。这样可以利用现代 CPU 的多核架构,提高程序的效率。

以下是如何在 Go 中实现并行执行的简要概述:

- Goroutine(协程):Goroutine 是可以与其他 goroutine 并发执行的函数。可以使用

go关键字后跟函数调用来创建新的 goroutine。

func main() {

// 同时启动两个 goroutine

go 任务1()

go 任务2()

// 等待 goroutine 完成

time.Sleep(time.Second)

}

func 任务1() {

// 任务1 的实现

}

func 任务2() {

// 任务2 的实现

}

- Channel(通道):Channel 用于实现 goroutine 之间的通信和同步。它们允许 goroutine 发送和接收值以协调它们的执行。

func main() {

// 创建用于通信的通道

ch := make(chan int)

// 启动一个 goroutine 执行任务

go 任务(ch)

// 从通道接收数据

结果 := <-ch

fmt.Println("结果:", 结果)

}

func 任务(ch chan int) {

// 执行任务

// 通过通道发送结果

ch <- 42

}

通过利用 goroutine 和 channel,可以轻松实现 Go 中的并行执行,实现任务的高效并发处理。

M1.1.2 - Von Neumann Bottleneck

冯·诺依曼瓶颈(Von Neumann Bottleneck)是指计算机体系结构中的一种瓶颈,源于传统冯·诺依曼计算机体系结构的设计。这一瓶颈主要是指在传统计算机体系结构中,CPU(中央处理器)和内存之间的数据传输速度相对较慢,导致CPU在处理数据时需要等待内存的读写操作完成,从而限制了整个计算机系统的性能表现。

具体来说,冯·诺依曼计算机体系结构包括一个中央处理器(CPU)、一个内存(Memory)、一个输入/输出(I/O)系统和一个外部存储器(Storage)。在这种体系结构中,CPU 通过总线与内存进行数据交换。然而,由于内存的读写速度相对较慢,CPU 在执行指令时经常需要等待内存的读写操作完成,导致 CPU 的处理速度受限。

冯·诺依曼瓶颈对计算机系统的性能产生了负面影响,尤其是在处理大规模数据和复杂计算任务时。为了解决这一问题,人们提出了许多优化策略和技术,如高速缓存(Cache)、指令级并行(Instruction-Level Parallelism)、多核处理器(Multi-Core Processor)等,以提高 CPU 和内存之间的数据传输速度,从而缓解冯·诺依曼瓶颈带来的性能瓶颈。

The “Von Neumann Bottleneck” refers to the limitation imposed by the sequential nature of traditional von Neumann architecture, which slows down overall system performance. This bottleneck arises due to the architectural design of traditional computers, which separate memory and processing units and require data to be transferred between them through a single communication pathway. As a result, the speed at which data can be processed is constrained by the rate at which data can be moved between the memory and processing units, leading to a bottleneck in system performance.

The von Neumann architecture, proposed by John von Neumann in the 1940s, consists of a central processing unit (CPU) that executes instructions fetched from memory. The CPU and memory are connected by a bus, which serves as the communication pathway for data transfer between the two components. While this architecture has been highly successful and widely used in conventional computers, it suffers from the Von Neumann Bottleneck due to the sequential processing of instructions and data transfer operations.

The Von Neumann Bottleneck becomes increasingly problematic as the speed of CPUs continues to outpace the rate of improvement in memory access times and data transfer speeds. This limitation hinders the overall performance of computing systems, particularly in applications requiring high-speed data processing and large-scale data analytics.

To mitigate the Von Neumann Bottleneck, various approaches have been proposed, including:

-

Caching techniques: Introducing cache memory closer to the CPU to reduce memory access times and improve data transfer speeds by storing frequently accessed data and instructions.

-

Parallel computing: Leveraging parallel processing architectures and techniques to perform multiple tasks simultaneously, thereby reducing the reliance on sequential data processing and mitigating the bottleneck.

-

Advanced memory architectures: Exploring new memory technologies, such as non-volatile memory (NVM) and high-bandwidth memory (HBM), to improve memory access speeds and data transfer rates, thereby alleviating the bottleneck.

-

Hardware accelerators: Offloading specific computational tasks to specialized hardware accelerators or coprocessors designed to perform those tasks more efficiently, thus reducing the burden on the CPU and memory subsystem.

By adopting these approaches and exploring new architectural paradigms, researchers and engineers aim to overcome the limitations imposed by the Von Neumann Bottleneck and unlock new levels of performance and efficiency in computing systems.

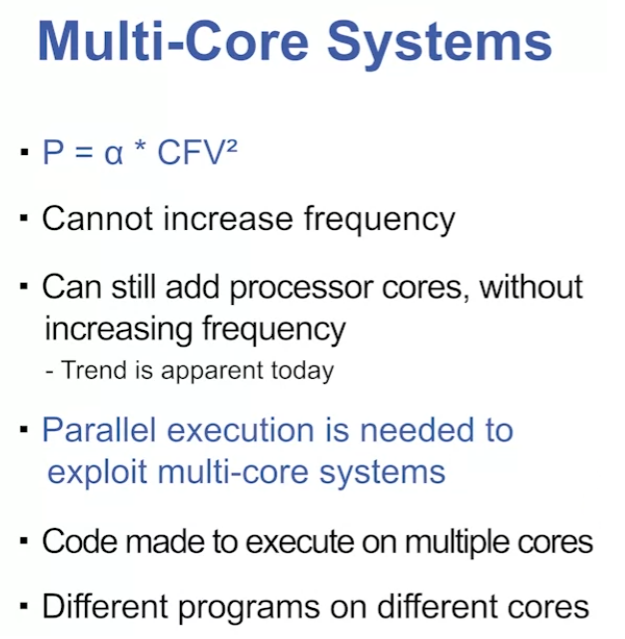

M1.1.3 - Power Wall

Power Wall(功耗墙)是指在计算机芯片设计中,由于功耗和散热问题而导致处理器性能提升受到限制的现象。随着芯片技术的不断进步,处理器的晶体管数量和工作频率不断增加,导致芯片的功耗也呈指数级增长。由于功耗增长导致散热问题变得愈加严重,处理器在工作时需要消耗更多的电力并产生更多的热量,这就形成了“功耗墙”。

功耗墙对计算机系统的影响主要体现在以下几个方面:

-

散热困难:随着芯片功耗的增加,散热问题变得越来越困难。传统的散热方法已经无法满足处理器的散热需求,需要采用更加复杂和昂贵的散热技术来应对高功耗。

-

能源消耗增加:处理器的高功耗导致计算机系统的能源消耗大幅增加,不仅增加了运行成本,也对环境造成了一定的压力。

-

性能提升受限:由于功耗墙的存在,处理器的性能提升受到限制。即使芯片制造技术不断进步,但处理器的功耗和散热问题依然是制约性能提升的重要因素。

为了应对功耗墙带来的挑战,计算机芯片设计者采取了一系列的措施,如优化芯片架构、采用低功耗工艺、引入动态电压调整技术等,以降低处理器的功耗和热量产生,从而延缓功耗墙的影响。同时,也在不断探索新的计算机体系结构和散热技术,以寻找突破功耗墙的解决方案,推动处理器性能的持续提升。

The “Power Wall” refers to the phenomenon in computer chip design where the performance improvement of processors is constrained due to power consumption and heat dissipation issues. As chip technology advances, the number of transistors and operating frequencies of processors increase, leading to exponential growth in chip power consumption. This increase in power consumption exacerbates heat dissipation problems, causing processors to consume more electricity and generate more heat during operation, thus forming the “Power Wall.”

The impact of the Power Wall on computer systems is mainly reflected in the following aspects:

-

Difficulties in heat dissipation: With the increasing power consumption of chips, heat dissipation becomes more challenging. Traditional cooling methods are insufficient to meet the heat dissipation requirements of processors, necessitating the use of more complex and expensive cooling technologies to cope with high power consumption.

-

Increased energy consumption: The high power consumption of processors leads to a significant increase in the energy consumption of computer systems, not only increasing operating costs but also exerting pressure on the environment.

-

Limited performance improvement: Due to the existence of the Power Wall, the performance improvement of processors is constrained. Even as chip manufacturing technology advances, power consumption and heat dissipation issues remain significant factors limiting performance improvements.

To address the challenges posed by the Power Wall, computer chip designers have taken a series of measures, such as optimizing chip architecture, adopting low-power processes, and introducing dynamic voltage adjustment technologies to reduce processor power consumption and heat generation, thereby delaying the impact of the Power Wall. Meanwhile, they continue to explore new computer architectures and cooling technologies to find solutions to break through the Power Wall and drive continuous performance improvement in processors.

Moore’s Law

Moore’s Law(摩尔定律)是指英特尔创始人之一戈登·摩尔在1965年提出的一个观点,该观点预言了集成电路的发展趋势。摩尔的观点是,集成电路上可容纳的晶体管数量每隔18至24个月会翻倍,而成本则保持不变,这导致了性能的指数级增长。摩尔定律为计算机科学和工业的发展指明了方向,推动了半导体技术的不断进步,以及计算设备的不断提升。

然而,随着技术的进步和晶体管尺寸的不断缩小,摩尔定律的有效性逐渐受到挑战。在微纳米尺度下,量子效应和物理限制导致晶体管的性能提升遇到了瓶颈,使得摩尔定律在近年来变得越来越难以实现。尽管如此,摩尔定律仍然被视为一种指导原则,激励着科学家和工程师不断寻求创新,以应对新的技术挑战,推动技术的发展和进步。

Dennard Scaling

Dennard Scaling(丹纳德缩放)是指1967年,罗伯特·丹纳德提出的一种芯片性能和功耗之间的关系模型。根据这个模型,当芯片尺寸减小,晶体管数量增加时,电源电压和晶体管尺寸会按比例缩小,从而使得晶体管的电流密度保持不变,同时功率密度也保持不变。这样,芯片的性能随着尺寸的缩小而提高,但功耗仍然保持稳定。

Dennard Scaling在过去几十年中对半导体工业的发展产生了深远影响,推动了芯片性能的不断提升。然而,随着芯片尺寸的进一步缩小和集成度的提高,Dennard Scaling的效应逐渐减弱。这是因为在微纳米尺度下,量子效应和晶体管尺寸的物理限制导致电源电压无法继续降低,从而使得功耗密度不再保持稳定。

随着技术的发展,Dennard Scaling的失效导致了摩尔定律的终结,即芯片性能不再以指数级增长。为了应对这一挑战,半导体行业开始探索新的技术和架构,如多核处理器、异构计算、新型材料和器件结构等,以继续推动芯片性能和能效的提升。

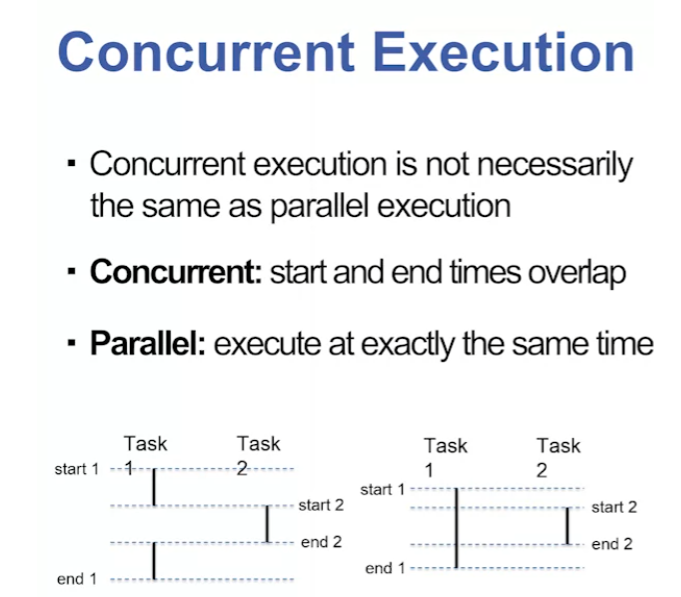

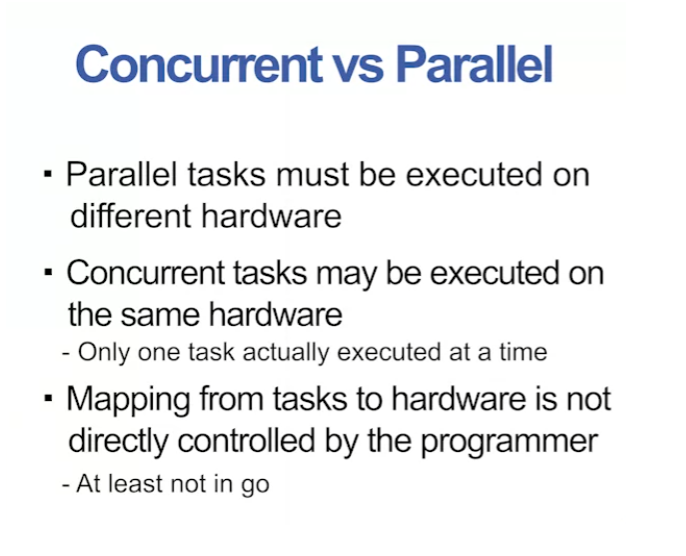

M1.2.1 - Concurrent vs Parallel

“Concurrent” 和 “Parallel” 都涉及同时处理多个任务,但它们之间有一些微妙的区别。

-

Concurrent(并发):并发指的是在同一时间段内执行多个任务,但不一定同时执行。在并发中,多个任务可能以交替的方式执行,通过时间片轮转或事件驱动的方式来切换执行。在单核处理器系统中,通过时间分片的方式实现并发。在多核系统中,不同的任务可以同时在不同的核心上执行,实现真正的并行。

-

Parallel(并行):并行指的是在同一时刻同时执行多个任务。在并行处理中,多个任务在不同的处理单元上同时执行,例如多个 CPU 核心或者多个计算机节点。并行处理通常需要硬件支持,例如多核处理器或者分布式系统。

因此,虽然并发和并行都涉及同时处理多个任务,但并发更侧重于任务的组织和调度,而并行更侧重于同时执行任务。在实际应用中,通常会将并发和并行结合使用,以提高系统的性能和效率。

“Concurrent” and “Parallel” both involve handling multiple tasks at the same time, but there are subtle differences between them.

-

Concurrent: Concurrency refers to executing multiple tasks within the same time frame, but not necessarily simultaneously. In concurrency, multiple tasks may be executed in an interleaved manner, switching execution through time slicing or event-driven mechanisms. In single-core processor systems, concurrency is achieved through time sharing. In multi-core systems, different tasks can execute simultaneously on different cores, achieving true parallelism.

-

Parallel: Parallelism refers to executing multiple tasks simultaneously at the same moment. In parallel processing, multiple tasks execute concurrently on separate processing units, such as multiple CPU cores or distributed computing nodes. Parallel processing typically requires hardware support, such as multi-core processors or distributed systems.

Thus, while concurrency and parallelism both involve handling multiple tasks concurrently, concurrency is more about organizing and scheduling tasks, while parallelism is about executing tasks simultaneously. In practical applications, concurrency and parallelism are often combined to improve system performance and efficiency.

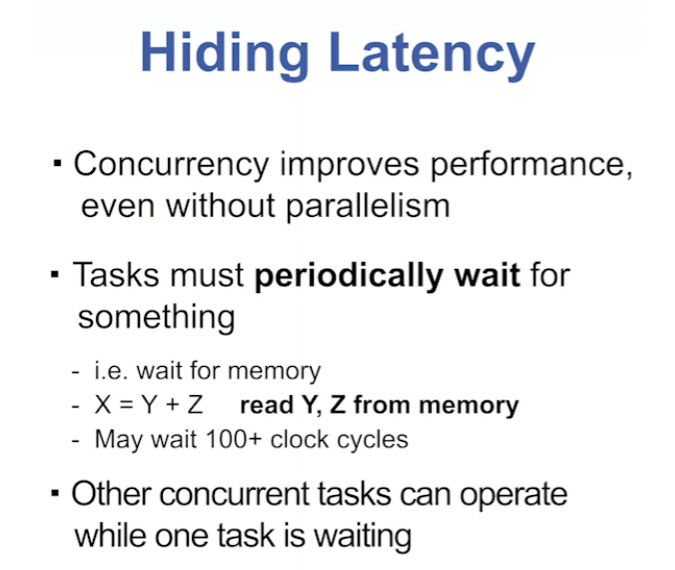

M1.2.2 - Hiding Latency

Hiding Latency of Concurrency

并发的隐藏延迟是指在处理多个任务时,尽可能地减少或隐藏由于任务等待资源或IO操作而导致的延迟。这种延迟通常是通过在执行等待期间执行其他任务来隐藏的,从而最大限度地利用系统资源。通过并发地执行多个任务,并在一个任务等待资源时切换到执行另一个任务,可以降低整体等待时间,提高系统的吞吐量和响应速度。隐藏延迟的目标是使系统在面对资源瓶颈或IO限制时仍能保持高效运行,提高系统的性能和用户体验。

Hiding the latency of concurrency refers to minimizing or masking the delays caused by tasks waiting for resources or I/O operations while processing multiple tasks concurrently. This latency is often concealed by executing other tasks during the waiting period, thus maximizing the utilization of system resources. By concurrently executing multiple tasks and switching to execute another task while one is waiting for resources, overall waiting times can be reduced, leading to improved system throughput and responsiveness. The goal of hiding latency is to ensure efficient operation of the system even in the face of resource bottlenecks or I/O constraints, enhancing system performance and user experience.

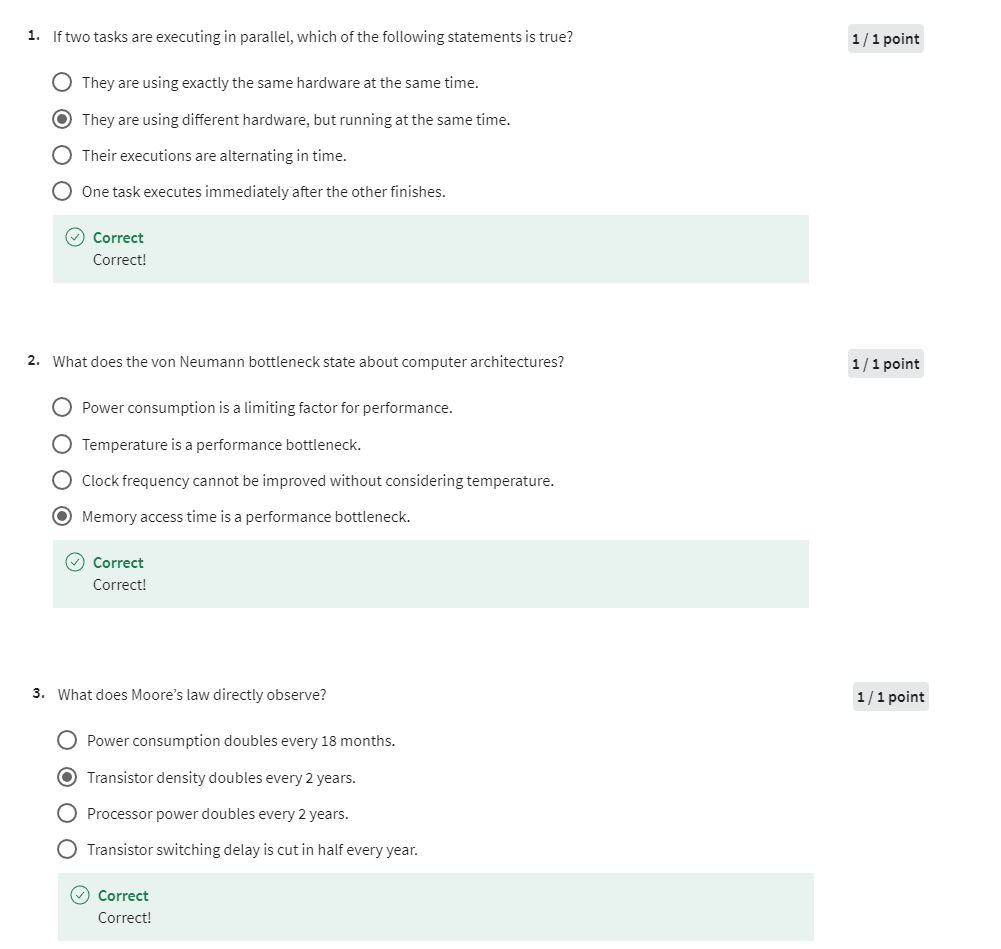

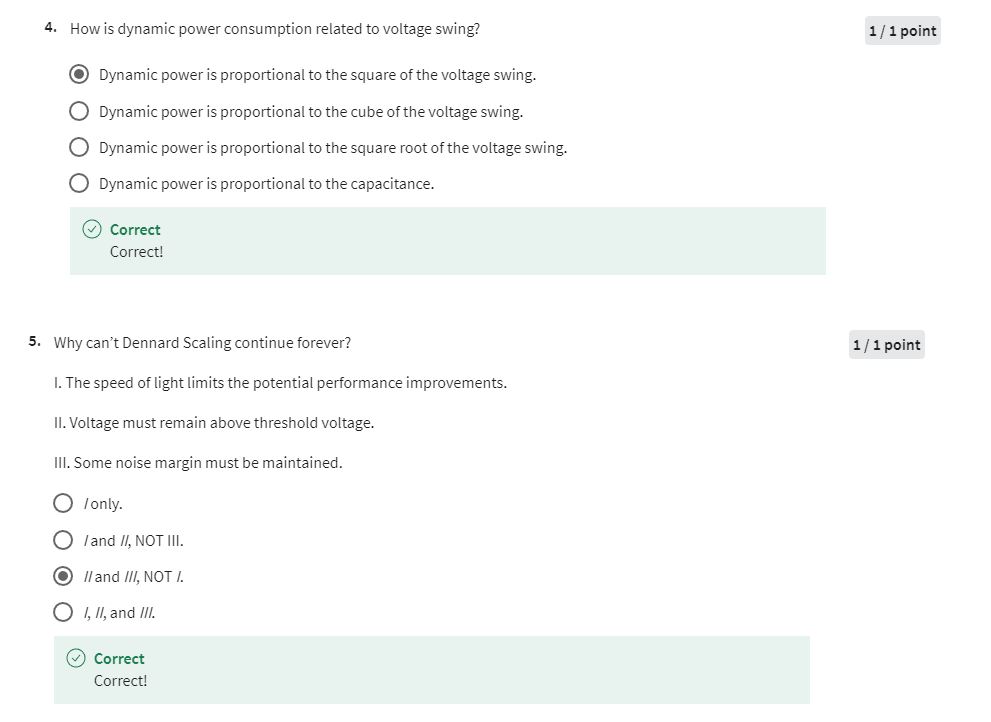

Module 1 Quiz

Peer-graded Assignment: Module 1 Activity

Define Moore’s law and explain why it has now stopped being true. Be sure to describe all of the physical limitations that have prevented Moore’s law from continuing to be true.

ChatGPT回答:

Moore’s Law, proposed by Gordon Moore in 1965, observed that the number of transistors on a microchip doubled approximately every two years, leading to a corresponding increase in computing power. This exponential growth in transistor density enabled continuous advancements in computing technology, driving improvements in performance, efficiency, and functionality of electronic devices.

However, Moore’s Law has gradually slowed down and is now considered to have stopped being true due to several physical limitations:

-

Transistor Size: As transistors have shrunk to atomic scales, they are reaching the limits of miniaturization imposed by quantum mechanics. At such small sizes, the behavior of electrons becomes unpredictable, leading to increased electron leakage and reduced reliability.

-

Heat Dissipation: With increasing transistor density, the heat generated by densely packed components has become a significant challenge. Heat dissipation becomes increasingly difficult, leading to thermal issues that affect performance and reliability.

-

Power Consumption: As transistor counts have continued to rise, so has the power consumption of microprocessors. This increased power consumption not only contributes to heat dissipation challenges but also limits the battery life of mobile devices and increases operational costs in data centers.

-

Economic Constraints: Building advanced semiconductor fabrication facilities (fabs) capable of manufacturing chips with smaller feature sizes requires enormous investments. As the cost of developing new manufacturing processes and equipment escalates, the economic feasibility of maintaining Moore’s Law becomes increasingly challenging.

-

Material Constraints: Traditional silicon-based transistors are reaching their physical limits in terms of performance and energy efficiency. Exploring alternative materials and new transistor architectures requires significant research and development efforts, further slowing down the pace of innovation.

In summary, Moore’s Law has ceased to hold true primarily due to the physical constraints imposed by transistor miniaturization, heat dissipation challenges, power consumption limitations, economic factors, and material constraints. While advancements in semiconductor technology continue, they no longer follow the exponential growth predicted by Moore’s Law.

摩尔定律是由戈登·摩尔于1965年提出的观察,指出微芯片上的晶体管数量大约每两年翻倍,从而导致计算能力的相应增加。晶体管密度的指数增长使得电子设备的性能、效率和功能不断改进。

然而,摩尔定律逐渐减缓,现在被认为不再成立,原因有以下几点物理限制:

-

晶体管尺寸:随着晶体管逐渐缩小到原子尺度,它们接近受到量子力学限制的微型化极限。在如此小的尺寸下,电子的行为变得难以预测,导致电子泄漏增加,可靠性降低。

-

散热:随着部件的紧密堆叠,由密集组件产生的热量成为一个重要挑战。散热变得越来越困难,导致影响性能和可靠性的热问题。

-

功耗:随着晶体管数量的不断增加,微处理器的功耗也在增加。增加的功耗不仅增加了热散发的挑战,还限制了移动设备的电池寿命,并增加了数据中心的运营成本。

-

经济约束:建造能够制造具有更小特征尺寸的芯片的先进半导体制造设施(fabs)需要巨额投资。随着开发新制造工艺和设备的成本不断上升,维持摩尔定律的经济可行性变得越来越具有挑战性。

-

材料约束:传统的基于硅的晶体管在性能和能源效率方面达到了物理极限。探索替代材料和新的晶体管架构需要进行重大的研究和开发工作,进一步放缓了创新的步伐。

总之,摩尔定律之所以不再成立,主要是由于晶体管微型化、散热挑战、功耗限制、经济因素和材料约束所导致的物理限制。尽管半导体技术的进步仍在进行中,但它们不再遵循摩尔定律所预测的指数增长。

MODULE 2: CONCURRENCY BASICS

This module looks at basic concurrency concepts and race conditions in preparation for a discussion of threads coming up in the next module.

Learning Objectives

- Identify the characteristics of processes, concurrency, and threads.

- Explain how and why race conditions can arise.

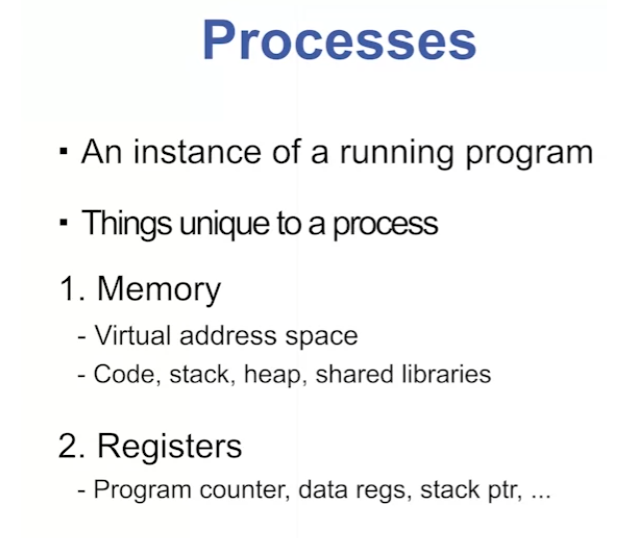

M2.1.1- Processes

进程(Processes)是计算机中正在执行的程序的实例。每个进程都有自己的内存空间、代码和数据,以及其他系统资源的分配,如打开的文件、网络连接等。进程是操作系统进行任务管理和资源分配的基本单位,它们可以同时运行多个程序,并提供了一种隔离和保护机制,使得不同程序之间互不干扰。

以下是进程的一些关键特点:

-

独立性: 每个进程都是相互独立的实体,它们彼此之间不共享内存空间,只能通过进程间通信机制进行数据交换。

-

资源分配: 操作系统负责对进程分配系统资源,包括内存、CPU时间、文件、网络连接等。每个进程都有自己独立的地址空间,使得它们可以独立地访问内存。

-

并发执行: 操作系统可以同时运行多个进程,通过时间片轮转或优先级调度等机制实现进程的并发执行,从而提高系统的吞吐量和响应速度。

-

通信和同步: 进程之间可以通过进程间通信(IPC)机制进行数据交换和同步操作,常用的通信方式包括管道、消息队列、共享内存和信号量等。

-

生命周期: 进程有自己的生命周期,包括创建、运行、挂起、终止等阶段。进程的创建和销毁由操作系统负责管理。

在现代操作系统中,进程是实现并发编程和多任务处理的基础,它们为程序提供了一种有效的隔离和资源管理机制,从而使得计算机系统能够同时运行多个程序,并保持系统的稳定性和可靠性。

Processes are instances of programs running on a computer. Each process has its own memory space, code, and data, as well as allocations of other system resources such as open files, network connections, etc. Processes are the fundamental units for task management and resource allocation by the operating system, enabling multiple programs to run concurrently and providing isolation and protection mechanisms so that different programs do not interfere with each other.

Here are some key features of processes:

-

Independence: Each process is an independent entity, with its own memory space. Processes do not share memory directly but communicate through inter-process communication (IPC) mechanisms.

-

Resource Allocation: The operating system is responsible for allocating system resources to processes, including memory, CPU time, files, network connections, etc. Each process has its own separate address space, allowing independent memory access.

-

Concurrent Execution: Modern operating systems can run multiple processes simultaneously, using mechanisms such as time-sharing or priority-based scheduling to achieve concurrent execution and improve system throughput and responsiveness.

-

Communication and Synchronization: Processes can communicate and synchronize with each other using IPC mechanisms such as pipes, message queues, shared memory, and semaphores.

-

Lifecycle: Processes have their own lifecycle, including creation, execution, suspension, termination, etc. The creation and destruction of processes are managed by the operating system.

In modern operating systems, processes are essential for implementing concurrent programming and multitasking. They provide an effective mechanism for isolating and managing resources, allowing computers to run multiple programs simultaneously while maintaining system stability and reliability.

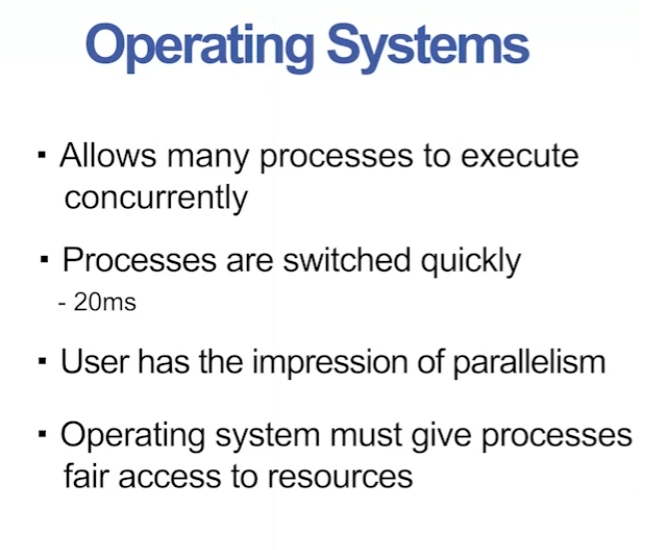

M2.1.2 - Scheduling

Scheduling Processes

进程调度是操作系统中的一个重要功能,它负责决定在多个正在运行的进程中,哪一个进程将被分配到 CPU 执行。进程调度的目标是提高系统的吞吐量、减少响应时间、提高资源利用率和公平性。

进程调度通常由操作系统的调度器(scheduler)负责实现。调度器根据一定的策略和算法,从就绪队列中选择一个进程,并将其分配给 CPU 执行。常见的调度算法包括先来先服务(FCFS)、最短作业优先(SJF)、轮转调度(Round Robin)、多级反馈队列调度(MLFQ)等。

在进程调度中,有几个重要的概念:

-

就绪队列(Ready Queue): 就绪队列是存放等待被 CPU 执行的进程的队列。调度器从就绪队列中选择一个进程,并将其分配给 CPU 执行。

-

调度策略(Scheduling Policy): 调度策略是调度器根据的一组规则或算法,用于决定选择哪个进程执行。常见的调度策略包括先来先服务、最短作业优先、轮转调度等。

-

调度开销(Scheduling Overhead): 调度开销是指在进行进程切换时所需要的额外开销,例如保存和恢复进程的上下文、更新进程状态等。

-

上下文切换(Context Switch): 上下文切换是指从一个进程切换到另一个进程时,操作系统保存当前进程的上下文,并恢复下一个进程的上下文的过程。上下文切换是进程调度的一个重要环节,它会消耗一定的系统资源。

进程调度在操作系统中扮演着重要的角色,它影响着系统的性能、响应速度和资源利用率。通过合理的调度算法和策略,操作系统可以实现高效的进程管理,提高系统的整体性能。

Process scheduling is a critical function in operating systems, responsible for determining which process among multiple running processes will be allocated to the CPU for execution. The goal of process scheduling is to improve system throughput, reduce response time, enhance resource utilization, and ensure fairness.

Process scheduling is typically implemented by the scheduler of the operating system. The scheduler selects a process from the ready queue based on certain policies and algorithms and assigns it to the CPU for execution. Common scheduling algorithms include First Come First Served (FCFS), Shortest Job First (SJF), Round Robin, Multilevel Feedback Queue (MLFQ), among others.

In process scheduling, several important concepts include:

-

Ready Queue: The ready queue is a queue that holds processes waiting to be executed by the CPU. The scheduler selects a process from the ready queue and assigns it to the CPU for execution.

-

Scheduling Policy: The scheduling policy consists of rules or algorithms used by the scheduler to decide which process to execute. Common scheduling policies include FCFS, SJF, Round Robin, etc.

-

Scheduling Overhead: Scheduling overhead refers to the additional overhead required during process switching, such as saving and restoring process context, updating process states, etc.

-

Context Switch: A context switch occurs when the operating system switches from executing one process to another. It involves saving the current process’s context and restoring the context of the next process. Context switching is a crucial aspect of process scheduling and consumes system resources.

Process scheduling plays a vital role in operating systems, affecting system performance, responsiveness, and resource utilization. With appropriate scheduling algorithms and policies, the operating system can achieve efficient process management, thereby enhancing overall system performance.

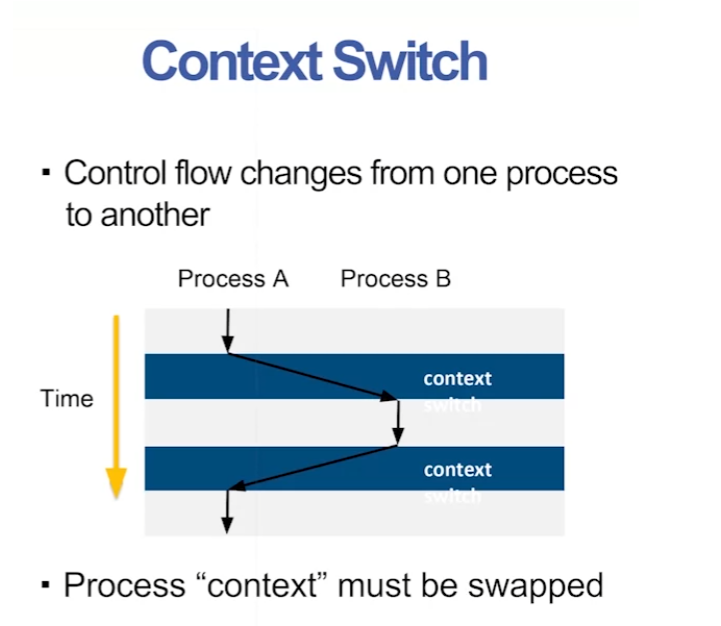

上下文切换是现代操作系统中的一种基本操作,指的是 CPU 从执行一个进程或线程切换到另一个的过程。这种切换涉及保存当前正在执行的进程或线程的状态,以便稍后可以恢复执行,然后加载要执行的新进程或线程的状态。

一个进程或线程的上下文通常包括 CPU 寄存器的值、程序计数器、堆栈指针和其他相关信息,这些信息是恢复执行所需的。上下文切换允许操作系统在多个进程或线程之间有效地共享 CPU,提供并发执行的假象。

上下文切换由各种事件触发,例如进程主动放弃 CPU(例如通过系统调用如 sleep 或 yield),或发生中断(例如硬件中断或定时器中断)。当发生上下文切换时,操作系统会保存当前进程或线程的上下文,并恢复下一个要执行的进程或线程的上下文。

上下文切换有一定的开销,因为它涉及保存和恢复 CPU 和其他相关资源的状态。因此,减少上下文切换的频率对于提高系统性能至关重要。操作系统采用各种技术,如优化调度算法、减少中断延迟和使用高效的数据结构,以减少上下文切换的开销。

Context switching is a fundamental operation in modern operating systems where the CPU switches from executing one process or thread to another. This switch involves saving the state of the currently executing process or thread so that it can be resumed later, and then loading the state of the new process or thread to be executed next.

The context of a process or thread typically includes the values of CPU registers, program counter, stack pointer, and other relevant information needed to resume execution. Context switching allows the operating system to efficiently share the CPU among multiple processes or threads, providing the illusion of concurrent execution.

Context switching is triggered by various events, such as a process voluntarily relinquishing the CPU (e.g., through system calls like sleep or yield) or an interrupt occurring (e.g., a hardware interrupt or a timer interrupt). When a context switch occurs, the operating system saves the context of the current process or thread and restores the context of the next process or thread to be executed.

Context switching has a non-negligible overhead, as it involves saving and restoring the state of the CPU and other relevant resources. Therefore, minimizing the frequency of context switches is essential for improving system performance. Various techniques, such as optimizing scheduling algorithms, reducing interrupt latency, and using efficient data structures, are employed to mitigate the overhead associated with context switching in operating systems.

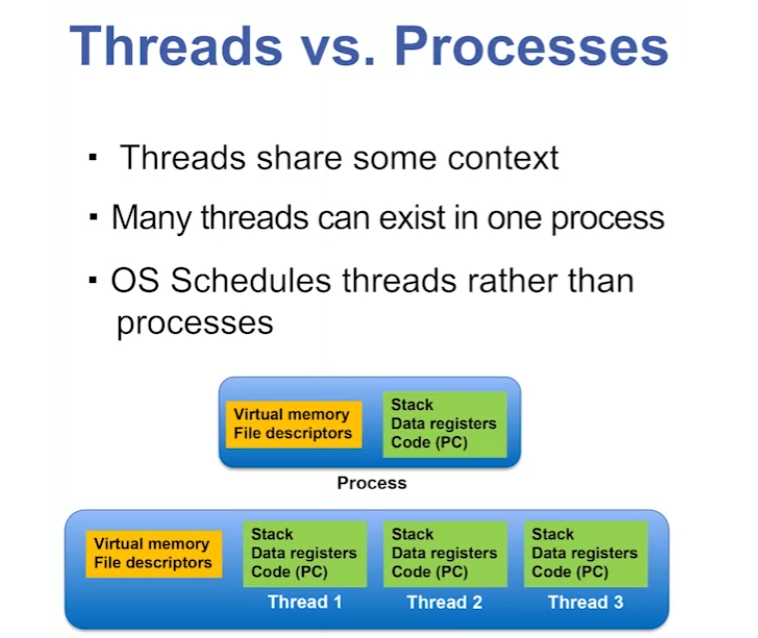

M2.1.3 - Threads and Goroutines

Threads VS. Processes

线程和进程是操作系统中用于实现并发执行的重要概念,它们之间有几个关键区别:

-

调度单位:

- 进程是程序的执行实例,每个进程都有自己的地址空间、代码、数据和资源。进程是操作系统中的基本调度单位,由操作系统负责管理和调度。

- 线程是进程中的执行单元,共享进程的地址空间和其他资源。一个进程可以包含多个线程,这些线程可以并发执行,共享进程的资源。

-

资源开销:

- 进程之间相互独立,每个进程都有自己的内存空间和系统资源,因此进程之间的切换开销较大。

- 线程共享同一个进程的内存空间和资源,因此线程之间的切换开销相对较小。

-

通信和同步:

- 进程之间的通信和同步较为复杂,通常需要使用进程间通信(IPC)机制,如管道、消息队列、共享内存等。

- 线程之间可以直接访问共享的内存空间,因此通信和同步较为简单,可以使用共享内存、信号量、互斥锁等机制。

-

健壮性:

- 由于进程之间相互独立,一个进程的崩溃不会影响其他进程,因此进程具有较强的健壮性。

- 线程之间共享同一进程的资源,一个线程的错误可能会影响整个进程的稳定性。

总的来说,进程和线程都是实现并发执行的重要手段,它们各有优缺点,选择使用哪种方式取决于具体的应用场景和需求。

Threads and processes are important concepts in operating systems for achieving concurrent execution. Here are several key differences between them:

-

Scheduling Unit:

- A process is an instance of a program, with its own address space, code, data, and resources. Processes are the basic scheduling units managed and scheduled by the operating system.

- A thread is an execution unit within a process, sharing the process’s address space and other resources. A process can contain multiple threads, which can execute concurrently and share the process’s resources.

-

Resource Overhead:

- Processes are independent of each other, with each process having its own memory space and system resources. As a result, context switching between processes incurs relatively high overhead.

- Threads share the same memory space and resources within a process, leading to lower overhead when switching between threads.

-

Communication and Synchronization:

- Inter-process communication and synchronization are more complex, often requiring inter-process communication (IPC) mechanisms such as pipes, message queues, shared memory, etc.

- Threads can directly access shared memory space, making communication and synchronization simpler through mechanisms like shared memory, semaphores, mutexes, etc.

-

Robustness:

- Processes are isolated from each other, so a crash in one process typically does not affect others, providing strong isolation and robustness.

- Threads share the same process resources, so errors in one thread may impact the stability of the entire process.

In summary, both threads and processes are essential for concurrent execution, each with its own advantages and disadvantages. The choice between them depends on specific application requirements and scenarios.

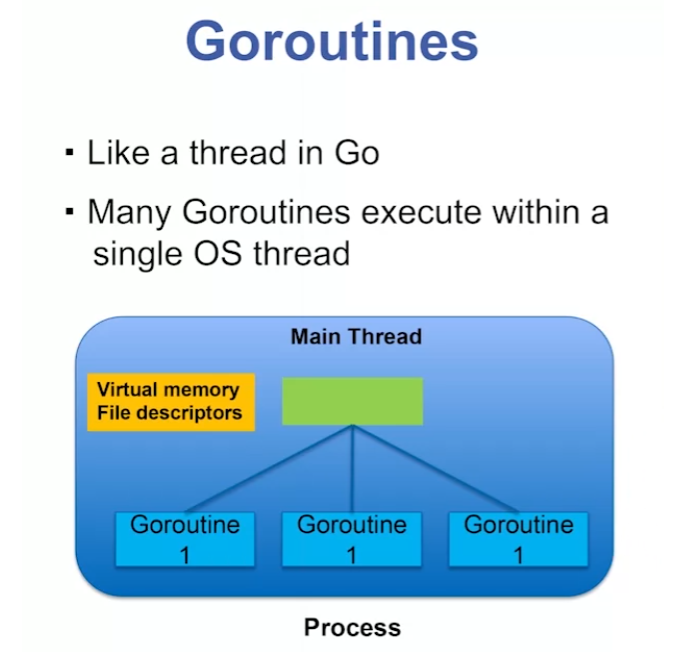

Gorourtines

Goroutines are lightweight threads managed by the Go runtime environment. They serve as concurrent execution units in Go programs and offer several advantages over traditional threads:

-

Lightweight: Goroutines are lightweight compared to traditional threads, allowing programs to create thousands or even millions of goroutines without consuming excessive system resources.

-

Concurrent Execution: Goroutines enable functions to execute concurrently, with multiple goroutines running simultaneously without the need for explicit thread management or locking.

-

Simple Creation: Goroutines are created simply by using the

gokeyword followed by a function call, for example,go func() { /* do something */ }(). This makes goroutine creation straightforward and concise, without the overhead of thread creation and management. -

Automatic Scheduling: The Go runtime environment automatically schedules the execution of goroutines, ensuring they run efficiently across different operating system threads. This enables optimal utilization of multicore processors.

-

Communication Mechanism: Goroutines communicate and synchronize with each other using channels. Channels are a key concurrency mechanism in Go, allowing safe data transfer and synchronization between goroutines.

-

Shared Memory: Goroutines share the same address space, allowing them to easily share memory state. However, it’s important to use channels to avoid race conditions and data races when accessing shared memory.

In summary, goroutines are concurrent execution units in Go programs that offer lightweight, simple creation, automatic scheduling, and communication mechanisms. They make concurrent programming in Go more straightforward and efficient.

协程(Goroutine)是 Go 语言中的一种轻量级线程,由 Go 运行时环境(Runtime)管理。与传统的线程相比,协程具有更低的内存占用和更高的创建和销毁速度,因此可以轻松创建大量的并发执行单元。以下是关于 Go 协程的一些重要信息:

-

轻量级: 协程是轻量级的执行单元,相比传统的线程更加轻量,一个程序可以创建成千上万个协程而不会消耗太多的系统资源。

-

并发执行: Go 协程允许函数以并发的方式执行,多个协程可以同时运行而不需要显式的管理线程和锁。

-

简单创建: 使用关键字

go可以简单地创建一个新的协程,例如go func() { /* do something */ }()。这种方式非常简洁,不需要像传统线程一样创建和管理。 -

自动调度: Go 运行时环境会自动调度协程的执行,确保它们在不同的操作系统线程上以适当的方式运行。这样可以充分利用多核处理器的性能优势。

-

通信机制: 协程之间可以通过通道(Channel)进行通信和同步,这是 Go 语言中实现并发的重要机制之一。通道可以安全地在协程之间传递数据,并且可以轻松实现同步操作。

-

内存共享: 协程之间共享相同的地址空间,因此可以方便地共享内存状态。但需要注意的是,使用通道来避免竞争条件和数据竞态。

总的来说,协程是 Go 语言中的并发执行单元,具有轻量级、简单创建、自动调度和通信机制等特点,使得并发编程变得更加简单和高效。

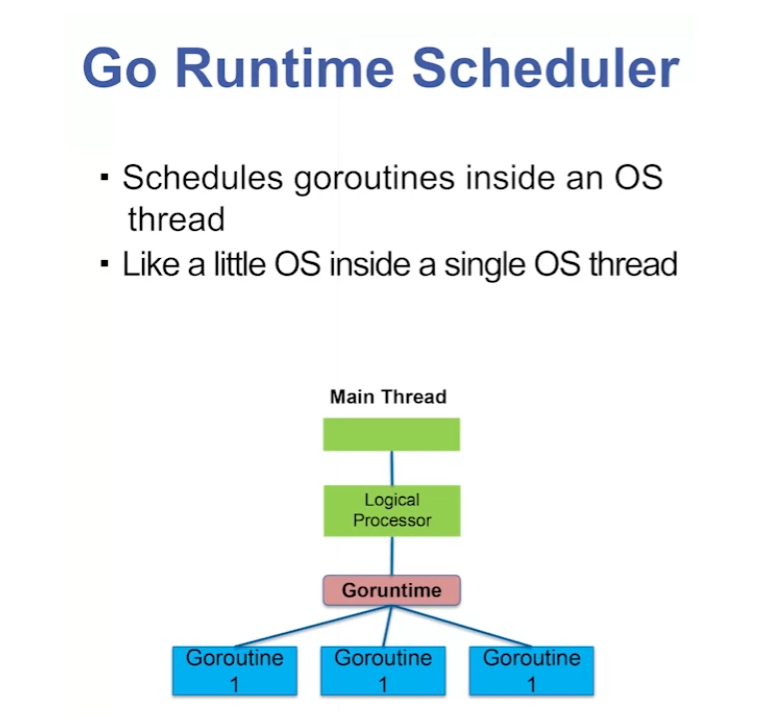

Go Runtime Scheduler

Go Runtime Scheduler(GRS)是 Go 语言运行时环境的一部分,负责管理和调度 goroutines 的执行。下面是关于 GRS 和 goroutines 的一些重要信息:

-

Go Runtime Scheduler(GRS):

- GRS 是 Go 语言运行时环境的组成部分之一,负责管理和调度 goroutines 的执行。

- GRS 使用的调度算法是 M:N 调度器,其中 M 代表操作系统线程(OS Thread),N 代表 goroutines。

- GRS 将一定数量的 goroutines 调度到少量的操作系统线程上执行,这样可以有效地利用系统资源。

-

Goroutines:

- Goroutines 是 Go 语言中的轻量级执行单位,由 Go 运行时环境管理。

- Goroutines 可以看作是轻量级的线程,但相比传统的线程更加高效和简单。

- 通过关键字

go可以创建一个新的 goroutine,例如go func() { /* do something */ }()。 - Goroutines 可以并发执行,而且可以方便地进行通信和同步,通过通道(channel)等机制实现。

-

调度器特性:

- GRS 使用的调度算法是抢占式的,它可以在任何时刻抢占当前正在执行的 goroutine,并将 CPU 时间分配给其他等待执行的 goroutines。

- 调度器会根据系统的负载和 goroutines 的状态动态调整执行策略,以实现最佳的性能和资源利用率。

-

调度器配置:

- Go 语言提供了一些环境变量来配置调度器的行为,例如 GOMAXPROCS 用于设置最大并发执行的操作系统线程数。

- 调度器还可以通过 runtime 包提供的函数来手动配置,例如 runtime.Gosched() 可以让出当前 goroutine 的执行权限。

总的来说,Go Runtime Scheduler 负责管理和调度 goroutines 的执行,它使用 M:N 调度器来有效地利用系统资源,并提供了一些配置选项和函数来调整调度器的行为。 Goroutines 是 Go 语言中的轻量级执行单位,可以方便地进行并发编程。

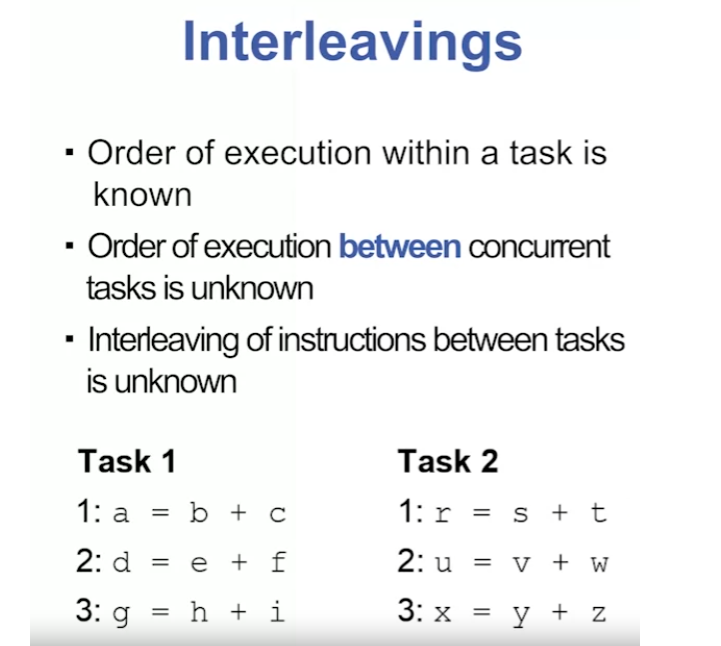

M2.2.1 - Interleavings

InterLeavings

Interleavings是指在并发程序中,不同的线程或进程之间交替执行的顺序。它反映了在多任务系统中各个任务之间切换的方式。在并发程序中,可能存在多种不同的交错方式,每种交错方式都会产生不同的执行结果。Interleavings的理解对于并发程序的正确性分析和调试非常重要,因为不同的Interleavings可能会导致不同的行为和结果。

M2.2.2 - Race Conditions

Race Conditions

Race Conditions 是指在多线程或并发编程中,由于竞争条件造成的程序行为不确定性。当两个或多个线程同时访问共享资源,并且至少有一个线程试图修改这些资源时,如果操作的顺序导致结果取决于不同线程之间的执行顺序,就会出现竞争条件。

竞争条件可能导致程序出现不一致的行为,例如数据损坏、逻辑错误、死锁等问题。解决竞争条件通常需要采取同步机制,如互斥锁、条件变量等,以确保在访问共享资源时只有一个线程能够执行修改操作,从而避免出现数据竞争问题。

Race Conditions refer to the uncertainty in program behavior caused by concurrent access to shared resources in multi-threaded or concurrent programming. When two or more threads access shared resources simultaneously, and at least one thread attempts to modify these resources, if the order of operations leads to results that depend on the execution order between different threads, a race condition occurs.

Race conditions can lead to inconsistent behavior in a program, such as data corruption, logical errors, deadlocks, and other issues. Resolving race conditions often requires the use of synchronization mechanisms such as mutex locks, condition variables, etc., to ensure that only one thread can execute modification operations when accessing shared resources, thereby avoiding data race issues.

Module 2 Quiz

Peer-graded Assignment: Module 2 Activity

Write two goroutines which have a race condition when executed concurrently. Explain what the race condition is and how it can occur.

Submission: Upload your source code for the program along with your written explanation of race conditions.

Here’s an example of two goroutines with a race condition:

package main

import (

"fmt"

"sync"

)

var counter int

var wg sync.WaitGroup

func main() {

wg.Add(2)

go incrementCounter()

go incrementCounter()

wg.Wait()

fmt.Println("Final counter value:", counter)

}

func incrementCounter() {

defer wg.Done()

for i := 0; i < 1000; i++ {

// Race condition occurs here

counter++

}

}

Explanation:

In this program, two goroutines are launched concurrently to increment the counter variable by 1000 each. The race condition occurs because multiple goroutines are accessing and modifying the counter variable concurrently without any synchronization mechanism in place. Due to the non-atomic nature of the increment operation (counter++), it’s possible for one goroutine to read the counter value while another goroutine is in the process of updating it, leading to unpredictable behavior and incorrect results.

To fix this race condition, we can use synchronization primitives like mutexes or atomic operations to ensure that only one goroutine can access the counter variable at a time.

Here’s the improved version of the code using a mutex to prevent the race condition:

package main

import (

"fmt"

"sync"

)

var counter int

var wg sync.WaitGroup

var mu sync.Mutex

func main() {

wg.Add(2)

go incrementCounter()

go incrementCounter()

wg.Wait()

fmt.Println("Final counter value:", counter)

}

func incrementCounter() {

defer wg.Done()

for i := 0; i < 1000; i++ {

mu.Lock()

counter++

mu.Unlock()

}

}

Explanation:

In the improved version, a mutex (mu) is used to synchronize access to the counter variable. The Lock method is called before accessing the counter variable to prevent other goroutines from accessing it concurrently. After the increment operation is completed, the Unlock method is called to release the mutex, allowing other goroutines to acquire it. This ensures that only one goroutine can access the counter variable at a time, preventing the race condition.

MODULE 3: THREADS IN GO

In this module, you’ll work with threaded goroutines and explore the benefits of synchronization. The week’s assignment has you using a threaded approach to create a program that sorts integers via four separate sub-arrays, then merging the arrays into a single array.

Learning Objectives

- Identify features and operational characteristics of goroutines.

- Identify reasons for introducing synchronization in a goroutine.

- Write a goroutine that uses threads to sort integers via four sub-arrays that are then merged into a single array.

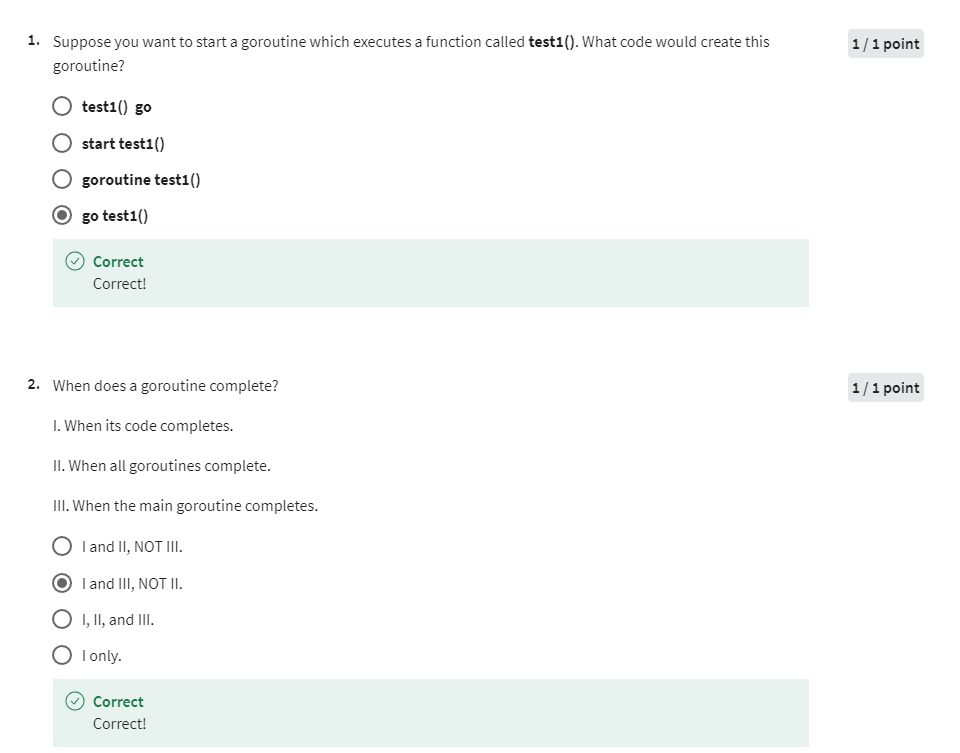

M3.1.1 - Goroutines

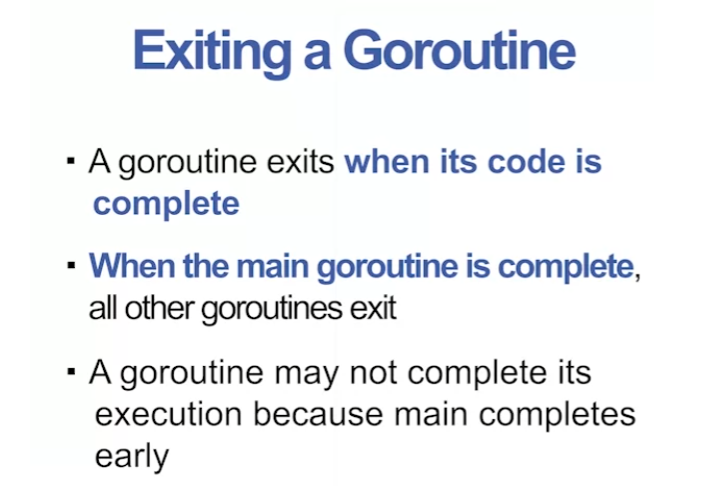

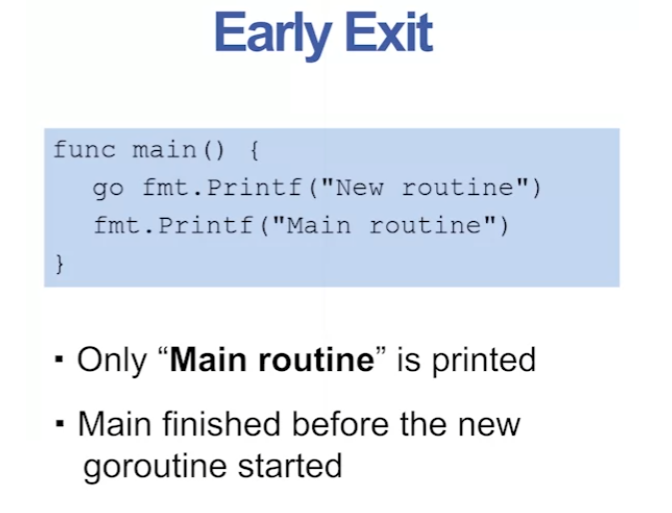

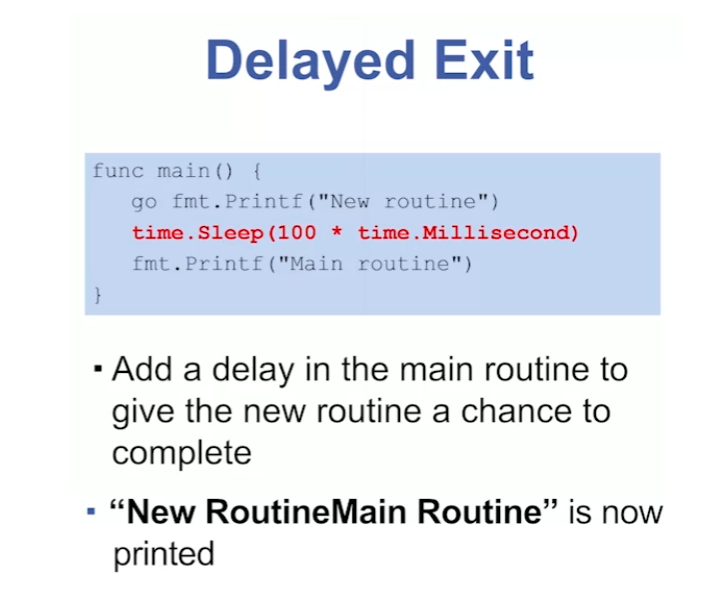

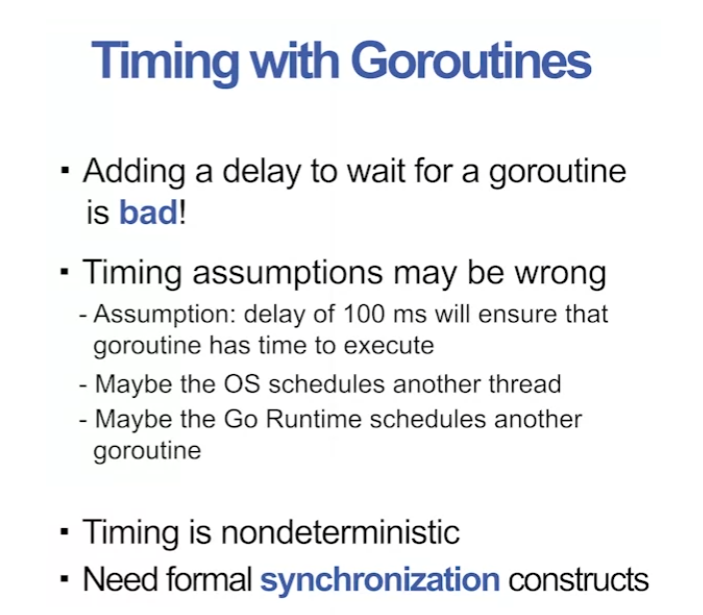

M3.1.2 - Exiting Goroutines

在Go语言中,如果你在一个goroutine中希望提前退出,你可以使用return语句来结束该goroutine的执行。当goroutine执行到return语句时,它会立即停止执行并退出。这样做可以有效地提前结束goroutine的执行,而不必等待所有代码都执行完毕。

以下是一个示例代码,演示了如何在goroutine中使用return语句进行提前退出:

package main

import (

"fmt"

"time"

)

func myGoroutine() {

for i := 0; i < 5; i++ {

fmt.Println("Doing some work...")

time.Sleep(time.Second)

if i == 2 {

fmt.Println("Encountered a condition, exiting early")

return // 提前退出goroutine

}

}

}

func main() {

fmt.Println("Main function started")

go myGoroutine() // 启动goroutine

time.Sleep(3 * time.Second) // 等待一段时间,确保goroutine有时间执行

fmt.Println("Main function ended")

}

在上面的示例中,myGoroutine函数会在执行到i == 2的时候提前退出。在这种情况下,return语句会立即结束myGoroutine的执行,而不会等待所有循环结束。

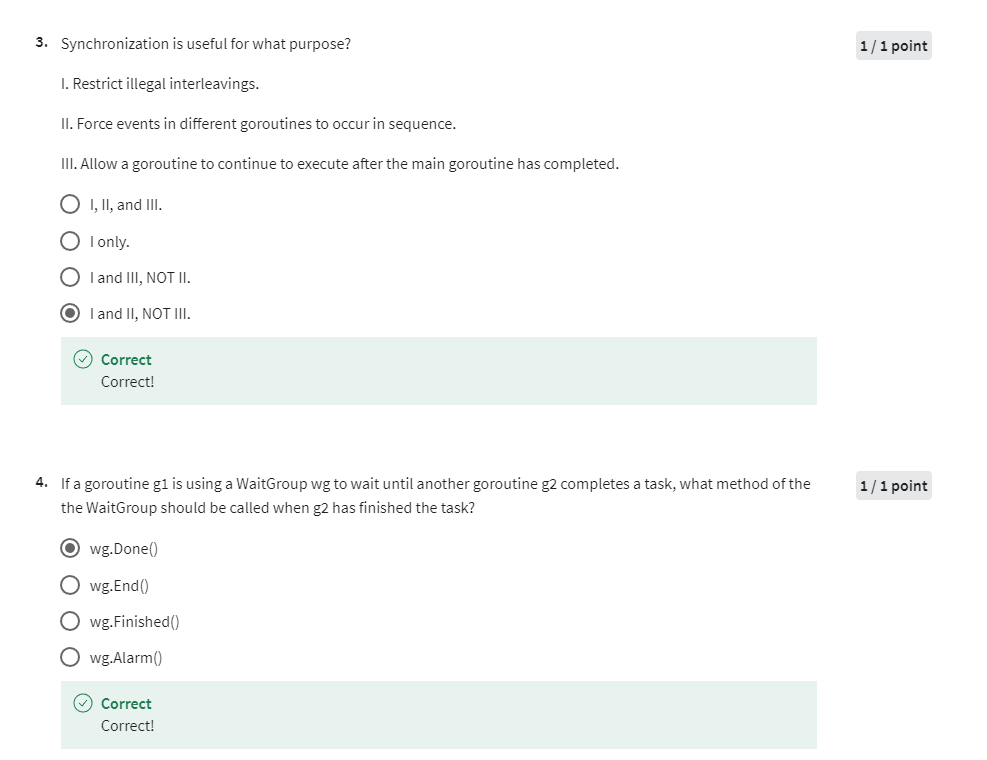

M3.2.1 - Basic Synchronization

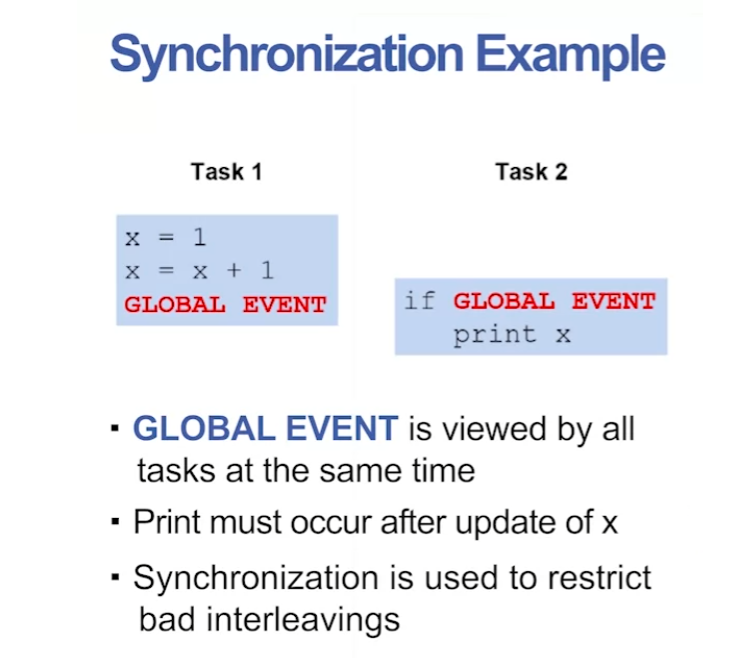

Synchronization

在Go语言中,同步(Synchronization)是一种管理并发操作的技术,用于确保在多个goroutine之间正确共享资源和协调操作的顺序。同步机制的目的是避免竞态条件(Race Condition)和数据竞争(Data Race),以及确保并发程序的正确性和可靠性。

以下是Go语言中常用的同步机制:

-

互斥锁(Mutex):互斥锁是最常用的同步机制之一,用于保护共享资源不被多个goroutine同时访问。通过在关键代码段前后加锁和解锁操作,可以确保在任何时候只有一个goroutine能够访问临界区。

-

读写锁(RWMutex):读写锁是一种特殊的互斥锁,允许多个goroutine同时读取共享资源,但只允许一个goroutine进行写操作。这种机制可以提高读取操作的并发性能。

-

条件变量(Cond):条件变量是一种用于goroutine之间的通信的机制,它允许goroutine等待特定的条件发生,然后再继续执行。条件变量通常与互斥锁配合使用,以确保在等待条件时,共享资源处于一致的状态。

-

通道(Channel):通道是Go语言中一种用于在goroutine之间传递数据和同步执行的机制。通道提供了一种安全、简单和高效的方式来实现goroutine之间的通信和同步。

-

原子操作(Atomic Operations):原子操作是一种特殊的操作,可以保证在并发执行的情况下,对共享变量的读取和写入是原子性的,不会被打断。原子操作通常用于对简单数据类型进行并发安全的增加、减少、赋值等操作。

通过合理地使用这些同步机制,可以有效地管理并发程序中的竞态条件和数据竞争,保障程序的正确性和稳定性。

Synchronization in Go refers to the coordination of concurrent goroutines to ensure proper execution and data integrity in a concurrent program. It involves techniques to control access to shared resources, such as variables or data structures, by multiple goroutines to prevent race conditions and ensure consistency.

There are several mechanisms provided by Go for synchronization:

-

Mutexes (Mutual Exclusion): Mutexes are used to lock and unlock critical sections of code to ensure that only one goroutine can access a shared resource at a time. This prevents race conditions where multiple goroutines try to modify the same data simultaneously.

-

Channels: Channels are communication primitives that allow goroutines to send and receive data synchronously. They provide a safe way to exchange data between goroutines without the need for explicit locking. Channels enforce synchronization by ensuring that data is sent and received in a coordinated manner.

-

WaitGroups: WaitGroups are used to wait for a collection of goroutines to finish their execution before proceeding. They provide a way to synchronize the execution of multiple goroutines by blocking until all goroutines have completed their tasks.

-

Atomic Operations: Go provides atomic operations for low-level synchronization tasks, such as atomic memory accesses and updates. Atomic operations ensure that certain operations on shared variables are performed atomically, without the possibility of interruption by other goroutines.

By using these synchronization mechanisms effectively, developers can write concurrent Go programs that are safe, efficient, and free from race conditions. Proper synchronization ensures that goroutines cooperate and coordinate their activities to achieve the desired behavior of the program.

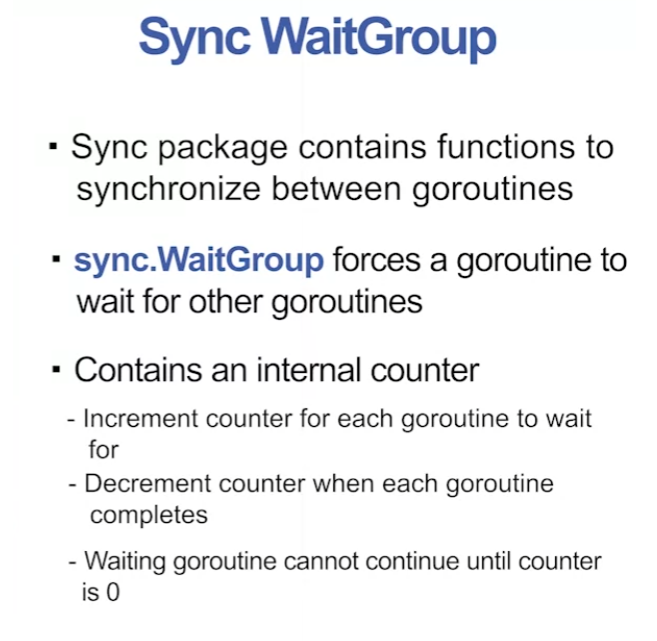

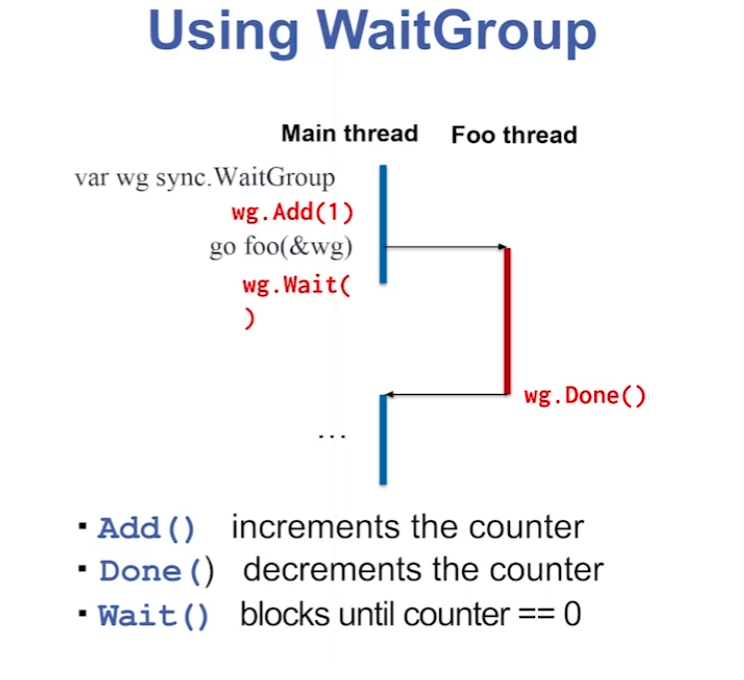

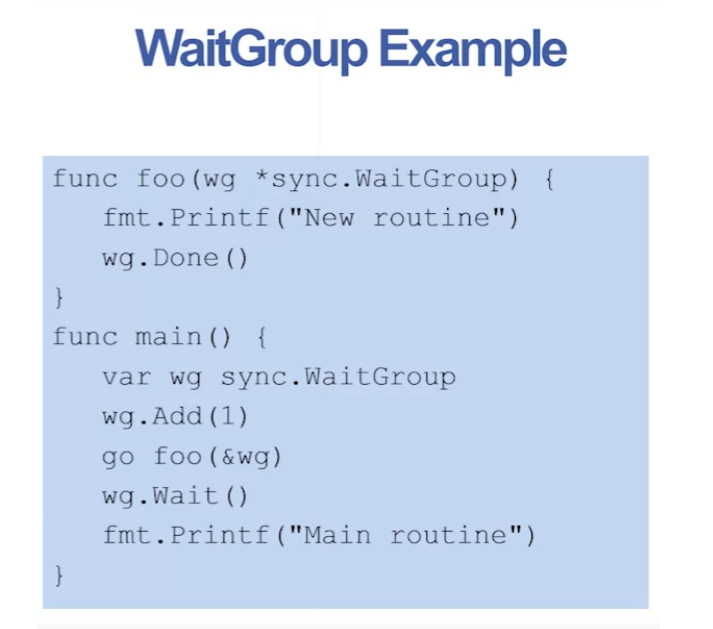

M3.2.2 - Wait Groups

Sync WaitGroup

The sync.WaitGroup type in Go is a synchronization primitive that allows you to wait for a collection of goroutines to finish executing before proceeding with further code. It’s particularly useful when you have a variable number of goroutines to wait for, as it can dynamically adapt to the number of goroutines you’re dealing with.

Here’s how sync.WaitGroup typically works:

-

You create a new

sync.WaitGroupinstance using thesync.WaitGroup{}expression. -

Before starting a new goroutine, you call the

Addmethod on theWaitGroupand increment the counter by the number of goroutines you’re about to start. -

Inside each goroutine, you perform the task and then call

Doneon theWaitGroupto signal that the goroutine has finished its work. -

Finally, you call the

Waitmethod on theWaitGroupfrom the main goroutine to block until all goroutines have completed their work. Once the counter reaches zero (indicating that all goroutines have calledDone), theWaitmethod returns, and the program can continue execution.

Here’s a simple example illustrating the usage of sync.WaitGroup:

package main

import (

"fmt"

"sync"

)

func worker(id int, wg *sync.WaitGroup) {

defer wg.Done() // Decrements the counter when the function returns

fmt.Printf("Worker %d starting\n", id)

// Simulate some work

for i := 0; i < 3; i++ {

fmt.Printf("Worker %d: Working...\n", id)

}

fmt.Printf("Worker %d done\n", id)

}

func main() {

var wg sync.WaitGroup

// Launching three worker goroutines

for i := 1; i <= 3; i++ {

wg.Add(1) // Increment the counter for each goroutine

go worker(i, &wg)

}

// Wait for all worker goroutines to finish

wg.Wait()

fmt.Println("All workers have completed their work")

}

In this example, three worker goroutines are started, each performing some simulated work. The main goroutine waits for all worker goroutines to finish using wg.Wait(). Once all workers are done, the main goroutine resumes execution.

在worker函数中,defer wg.Done()语句的作用是确保在函数返回时调用sync.WaitGroup的Done方法,无论函数如何返回都会执行。

具体来说,defer的作用如下:

-

当调用

wg.Done()时,它会将sync.WaitGroup的计数器减少1。 -

使用

defer语句,可以确保wg.Done()在worker函数结束之前被调用,即在函数返回之前。 -

即使函数遇到

panic或提前return,defer语句仍会执行,确保WaitGroup的计数器始终得到正确的减少。

总之,defer wg.Done()用于确保在worker函数退出时正确减少WaitGroup的计数器,从而实现正确的同步,并防止goroutine并发执行时出现潜在的死锁问题。

如果不使用defer语句来调用wg.Done(),则需要确保在函数的每个可能的返回路径上都调用wg.Done(),以确保sync.WaitGroup的计数器能够正确减少。

如果不使用defer,需要在函数的每个return语句之前手动调用wg.Done(),这可能会导致遗漏调用,从而导致WaitGroup的计数器无法正确减少,可能会引发死锁或其他同步问题。因此,使用defer语句能够确保在函数退出时正确地调用wg.Done(),是一种更简洁、更安全的做法。

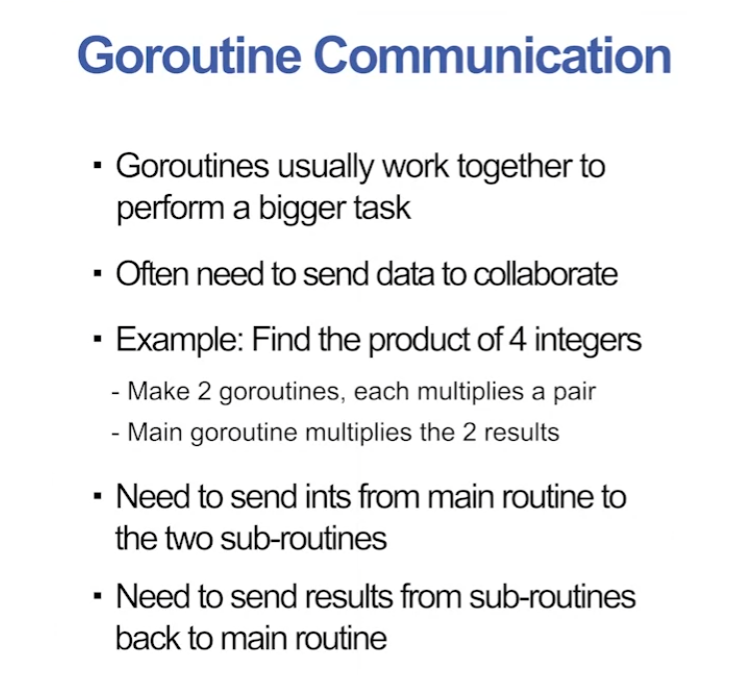

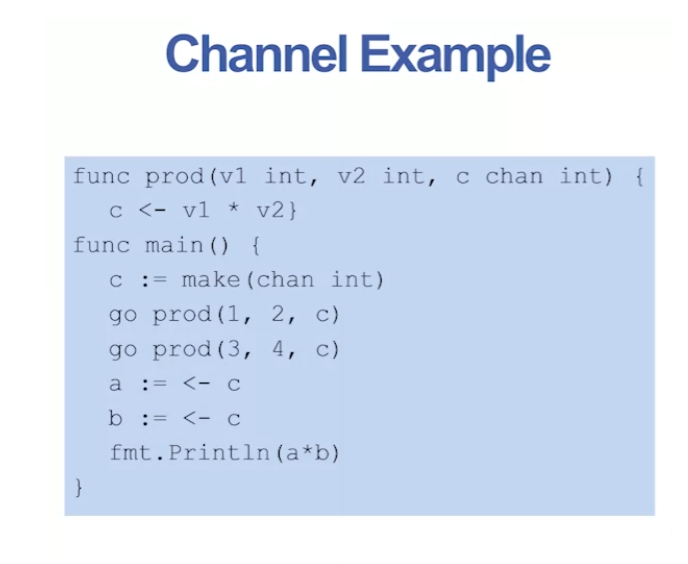

M3.3.1 - Communication

Goroutine Communication

Goroutine communication in Go refers to the mechanism through which goroutines (concurrently executing functions) can interact and share data. There are several ways goroutines can communicate with each other:

-

Channels: Channels are the primary means of communication between goroutines in Go. They provide a way for one goroutine to send data to another goroutine. Channels can be created with the

makefunction, and data can be sent and received using the<-operator. Channels are both safe and efficient for communication between goroutines. -

Buffered Channels: Buffered channels are similar to regular channels, but they have a buffer that allows them to hold a certain number of elements before blocking. This can be useful when the sender and receiver are not perfectly synchronized.

-

Channel Direction: Channels can be defined to be either send-only or receive-only. This allows you to restrict access to channels to prevent accidental misuse.

-

Select Statement: The

selectstatement in Go allows you to wait on multiple channels simultaneously and execute the corresponding case when one of the channels is ready to send or receive data. -

Close Channel: Channels can be closed using the

closefunction. This indicates to the receiver that no more data will be sent on the channel. Receivers can use the second return value from a receive operation to determine if the channel has been closed.

Overall, goroutine communication in Go is designed to be simple, safe, and efficient, allowing for the creation of highly concurrent and scalable programs.

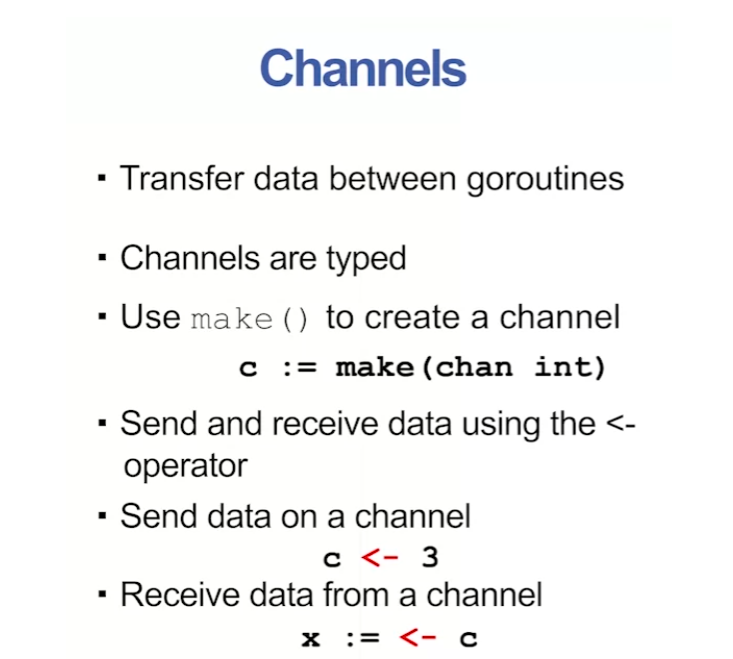

Channels

在Go中,通道(channels)是用于在goroutines之间进行通信的一种特殊类型。以下是通道的基本语法:

- 通道的声明:通道的声明使用

chan关键字,后跟通道元素的类型。例如:

var ch chan int // 声明一个整数类型的通道

- 通道的创建:使用

make函数创建通道。例如:

ch := make(chan int) // 创建一个整数类型的无缓冲通道

ch := make(chan int, 10) // 创建一个整数类型的有缓冲通道,缓冲区大小为10

- 通道的发送和接收:使用

<-运算符进行通道的发送和接收操作。例如:

ch <- 42 // 发送数据到通道ch

val := <-ch // 从通道ch接收数据并赋值给val

- 关闭通道:使用

close函数关闭通道,以向接收方指示不再发送数据。例如:

close(ch)

- 循环接收数据:可以使用

range关键字在循环中接收通道中的数据,直到通道被关闭。例如:

for val := range ch {

fmt.Println("Received:", val)

}

这些是Go中通道的基本语法。通过使用通道,可以实现goroutines之间的同步和数据传输,从而实现并发编程。

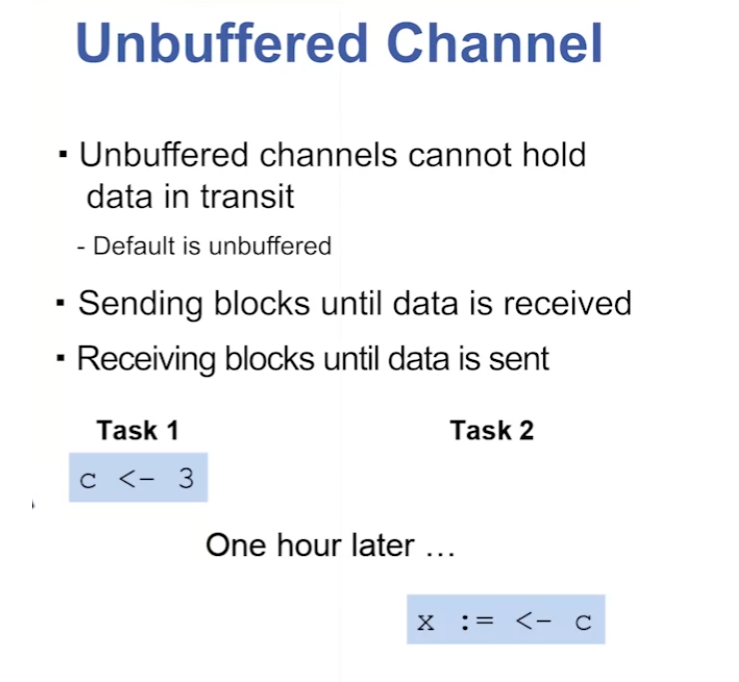

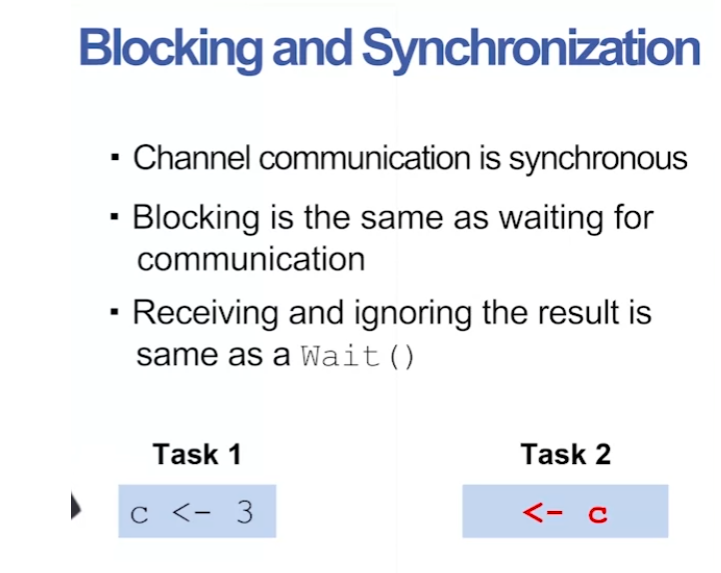

M3.3.2 - Blocking on Channels

Unbuffered Channel

无缓冲通道(Unbuffered Channel)是一种在Go语言中用于goroutines之间同步和通信的机制。它不存储任何元素,必须要有另一个goroutine正在尝试接收数据时,才能成功发送数据。

无缓冲通道的特点包括:

-

阻塞特性:当向无缓冲通道发送数据时,如果没有goroutine正在等待接收数据,发送操作将被阻塞,直到有其他goroutine开始接收数据。同样,当从无缓冲通道接收数据时,如果通道中没有数据可用,接收操作也会被阻塞,直到有其他goroutine开始发送数据。

-

同步性:无缓冲通道在发送和接收操作之间提供了同步机制,确保发送和接收操作发生在不同goroutines之间的同步点上。这种同步性确保了数据的安全传输和可靠性。

-

传递性:无缓冲通道是一种传递性机制,意味着当发送操作完成后,接收操作将立即发生。这种特性对于实现数据的精确传递非常有用,可以确保发送的数据不会被丢失或损坏。

总之,无缓冲通道是一种用于实现goroutines之间同步和通信的重要机制,在Go语言中被广泛应用于并发编程中。

An unbuffered channel in Go is a mechanism for synchronization and communication between goroutines. Unlike buffered channels, it does not store any elements and requires another goroutine to be attempting to receive data for a send operation to succeed.

Key characteristics of unbuffered channels include:

-

Blocking behavior: When data is sent to an unbuffered channel, the send operation blocks until another goroutine is ready to receive the data. Similarly, when data is received from an unbuffered channel, the receive operation blocks until there is data available to be received.

-

Synchronization: Unbuffered channels provide synchronization between send and receive operations, ensuring that they occur at a synchronization point between different goroutines. This synchronization ensures the safe and reliable transfer of data.

-

Immediate handoff: Unbuffered channels operate on a “handoff” principle, meaning that when a send operation completes, the corresponding receive operation happens immediately. This property ensures precise data transfer and prevents data loss or corruption.

In summary, unbuffered channels are a fundamental mechanism for achieving synchronization and communication between goroutines in Go, widely used in concurrent programming.

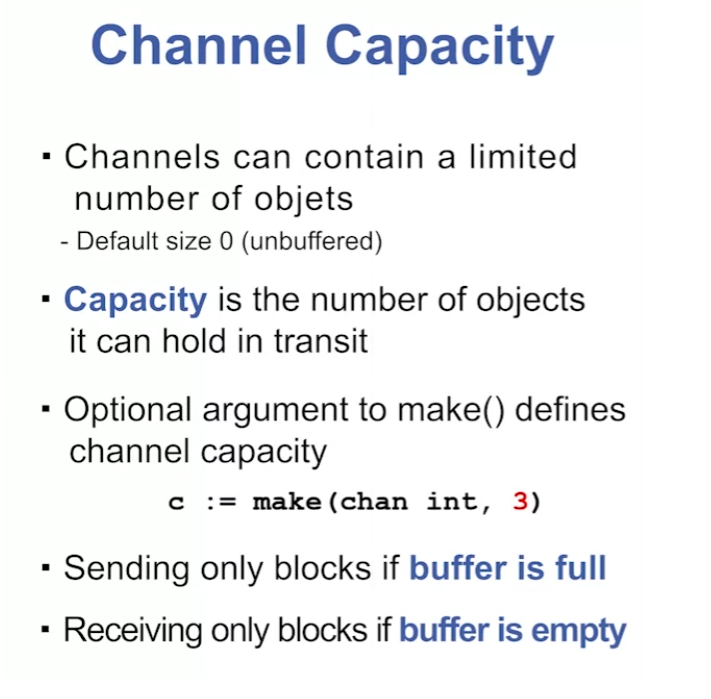

M3.3.3 - Buffered Channels

Buffered Channels

Buffered channels in Go provide a way for goroutines to communicate asynchronously while allowing a limited number of elements to be stored in the channel’s internal buffer. Unlike unbuffered channels, which block on send and receive operations until both sender and receiver are ready, buffered channels allow a degree of decoupling between senders and receivers.

Key characteristics of buffered channels include:

-

Capacity: Buffered channels have a specified capacity, which determines the maximum number of elements that can be stored in the channel’s buffer at any given time. This capacity is specified when the channel is created using the

make()function. -

Non-blocking sends: When a buffered channel has available space in its buffer, a send operation can proceed immediately, without blocking the sender. The send operation only blocks if the buffer is full and there are no available slots for new elements.

-

Non-blocking receives: When a buffered channel contains elements in its buffer, a receive operation can proceed immediately, without blocking the receiver. The receive operation only blocks if the buffer is empty and there are no elements available to be received.

-

Blocking behavior: Buffered channels exhibit blocking behavior when attempting to send data to a full buffer or receive data from an empty buffer. In these cases, the sender or receiver will block until the necessary conditions are met.

-

Decoupling of senders and receivers: Buffered channels provide a degree of decoupling between senders and receivers, allowing them to operate asynchronously. This decoupling can improve the efficiency and concurrency of Go programs by reducing the need for explicit synchronization between goroutines.

In summary, buffered channels offer a flexible and efficient mechanism for asynchronous communication and synchronization between goroutines in Go, allowing for the exchange of data with bounded buffering capacity.

Module 3 Quiz

Peer-graded Assignment: Module 3 Activity

Write a program to sort an array of integers. The program should partition the array into 4 parts, each of which is sorted by a different goroutine. Each partition should be of approximately equal size. Then the main goroutine should merge the 4 sorted subarrays into one large sorted array.

The program should prompt the user to input a series of integers. Each goroutine which sorts ¼ of the array should print the subarray that it will sort. When sorting is complete, the main goroutine should print the entire sorted list.

Submission: Upload your source code for the program.

package main

import (

"fmt"

"sort"

"sync"

)

func main() {

var nums []int

var wg sync.WaitGroup

// Prompt user for input

fmt.Println("Enter a series of integers (press 'x' to finish):")

for {

var num int

_, err := fmt.Scan(&num)

if err != nil {

break

}

nums = append(nums, num)

}

// Divide the array into 4 parts

partSize := len(nums) / 4

parts := make([][]int, 4)

for i := 0; i < 4; i++ {

start := i * partSize

end := start + partSize

if i == 3 { // Adjust the last part size to include remaining elements

end = len(nums)

}

parts[i] = nums[start:end]

}

// Print subarrays to be sorted by each goroutine

fmt.Println("Subarrays to be sorted by each goroutine:")

for i, part := range parts {

fmt.Printf("Goroutine %d: %v\n", i+1, part)

}

// Sort each subarray in parallel

for i := range parts {

wg.Add(1)

go func(i int) {

defer wg.Done()

sort.Ints(parts[i])

}(i)

}

wg.Wait()

// Merge sorted subarrays

merged := merge(parts)

// Print the entire sorted list

fmt.Println("Sorted array:", merged)

}

func merge(parts [][]int) []int {

var result []int

for _, part := range parts {

result = append(result, part...)

}

sort.Ints(result)

return result

}

MODULE 4: SYNCHRONIZED COMMUNICATION

This last module ties together the various features – including threads, concurrency, and synchronization – covered in this course. The week’s programming assignment requires you to use concurrent algorithms in the implementation of the “dining philosopher’s problem" and then address the ensuing synchronization issues.

Learning Objectives

- Identify the uses of channels and how they are implemented in code.

- Identify the purpose of the “select” keyword and the meaning of “default clause.”

- Identify the definition of “mutual exclusion” and characteristics of a “deadlock."

- Develop a goroutine that makes use of concurrent algorithms and addresses synchronization issues.

M4.1.1 - Blocking on Channels

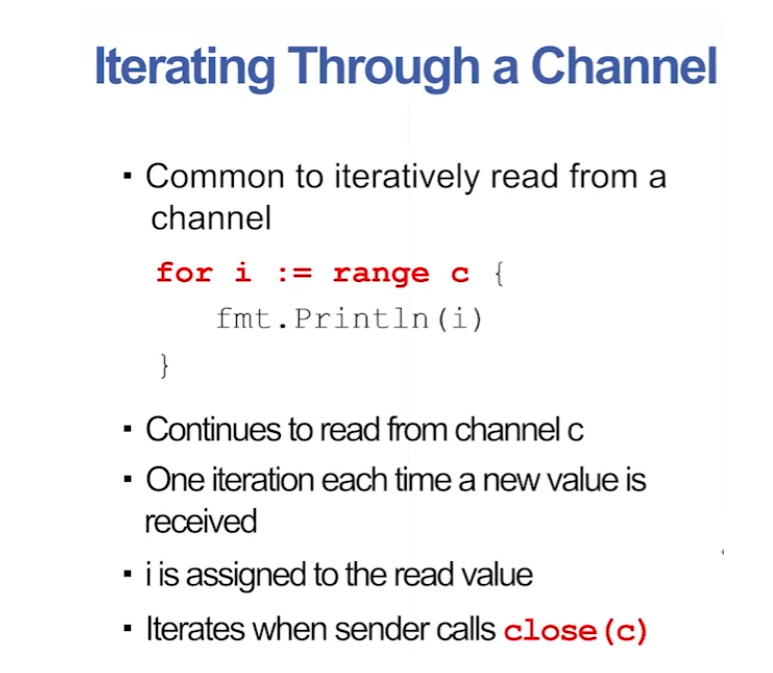

Iterating Through a Channel

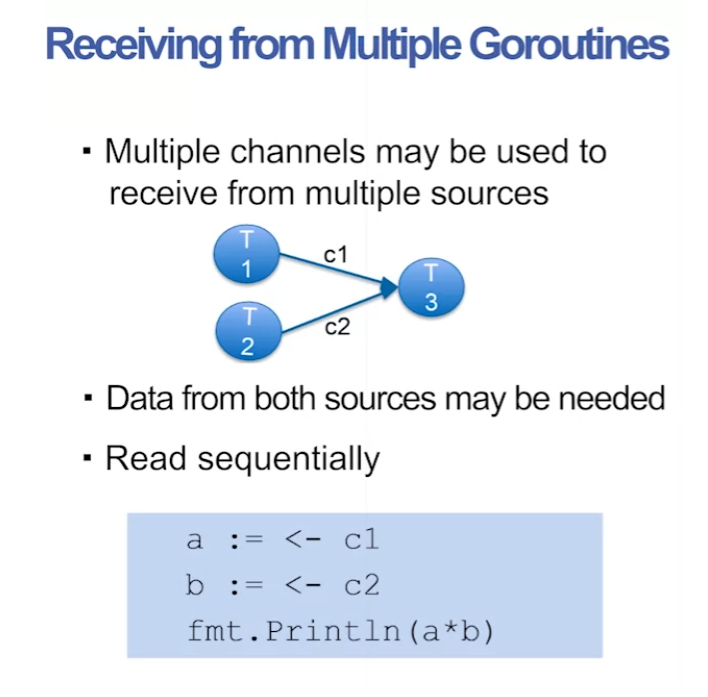

Receiving from Multiple Goroutines

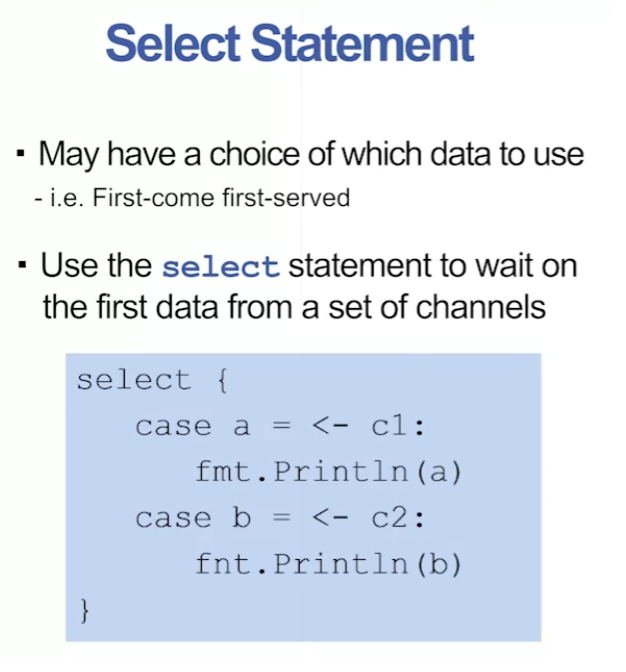

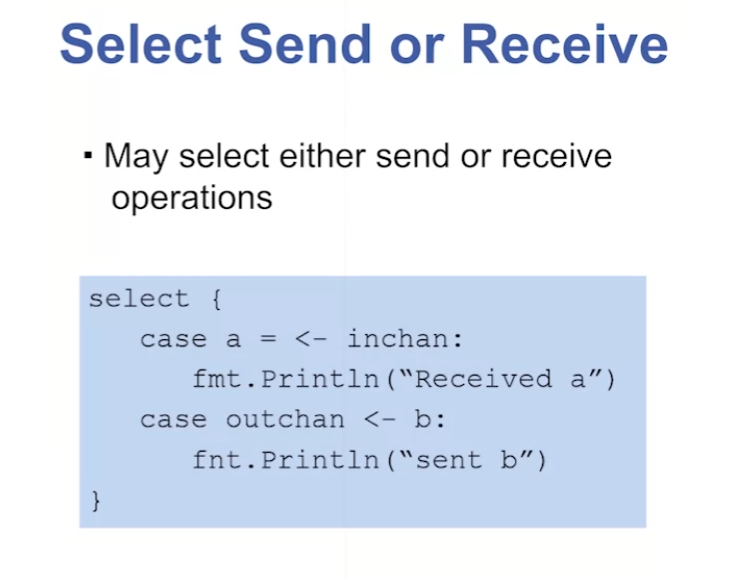

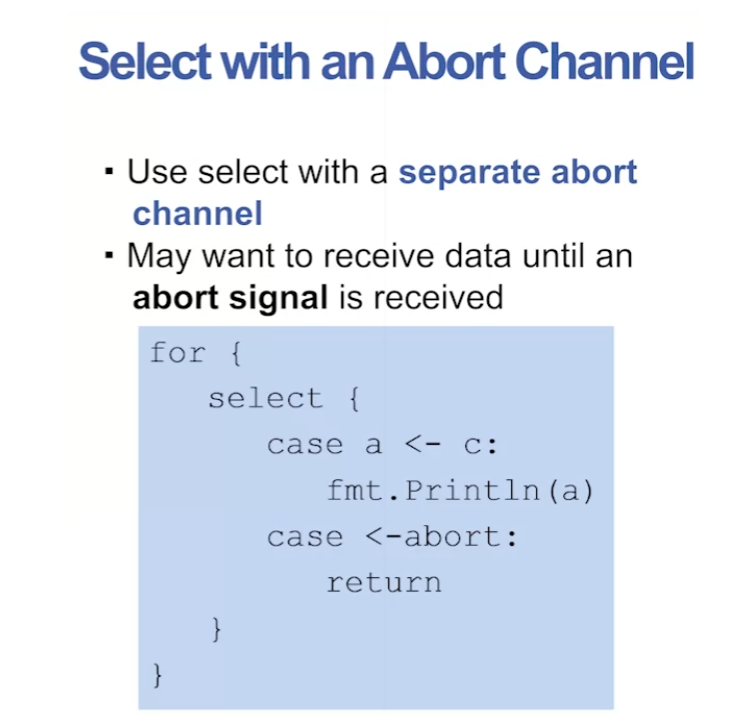

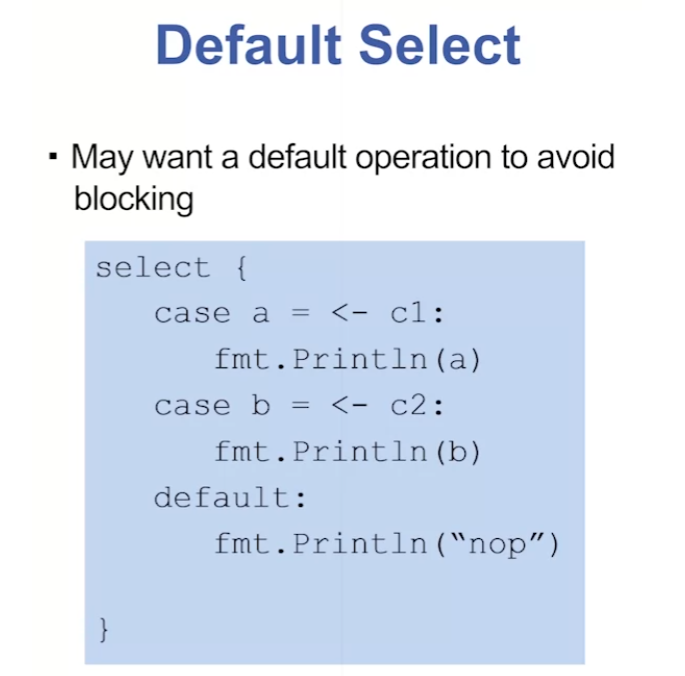

M4.1.2 - Select

在 Go 中,select 语句用于从多个通信操作中选择一个执行。它类似于 switch 语句,但是 select 用于通信操作而不是用于控制流程。select 语句使得一个 Go 协程可以等待多个通信操作中的任意一个完成。

select 语法如下所示:

select {

case communicationClause1:

// 执行 communicationClause1 中的代码

case communicationClause2:

// 执行 communicationClause2 中的代码

// 可以有更多的通信操作...

default:

// 当没有任何通信操作准备就绪时执行的代码

}

其中,每个通信操作称为一个 case,通常包括发送操作、接收操作或者 default 分支。当 select 语句执行时,它会按照顺序检查每个 case,并执行第一个准备就绪的通信操作。如果多个 case 同时准备就绪,那么 select 会随机选择一个进行执行。

select 语句通常与通道(channel)一起使用,用于在多个通道之间进行选择。它可以用于实现超时、非阻塞的发送或接收操作等场景。

当需要在多个通道之间进行选择时,可以使用 select 语句。下面是一个简单的示例,演示了如何使用 select 来从两个通道中选择接收数据:

package main

import (

"fmt"

"time"

)

func main() {

// 创建两个通道

ch1 := make(chan string)

ch2 := make(chan string)

// 向 ch1 发送数据的 Go 协程

go func() {

time.Sleep(2 * time.Second)

ch1 <- "Hello from ch1"

}()

// 向 ch2 发送数据的 Go 协程

go func() {

time.Sleep(1 * time.Second)

ch2 <- "Hello from ch2"

}()

// 使用 select 语句选择从两个通道中接收数据

select {

case msg1 := <-ch1:

fmt.Println("Received message from ch1:", msg1)

case msg2 := <-ch2:

fmt.Println("Received message from ch2:", msg2)

}

}

在上面的示例中,我们创建了两个通道 ch1 和 ch2,然后启动了两个 Go 协程,分别向这两个通道发送数据。接着,在 main 函数中使用 select 语句,它会等待从 ch1 或 ch2 接收到数据,然后打印接收到的消息。

这样,即使 ch2 发送的消息先到达,select 也会立即选择接收来自 ch2 的消息并打印。

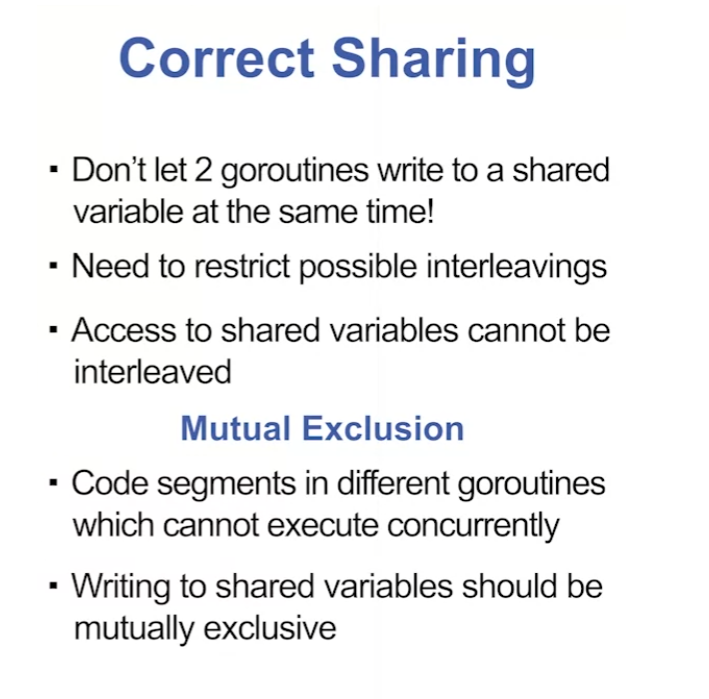

M4.2.1 - Mutual Exclusion

M4.2.2 - Mutex

M4.2.3 - Mutex Methods

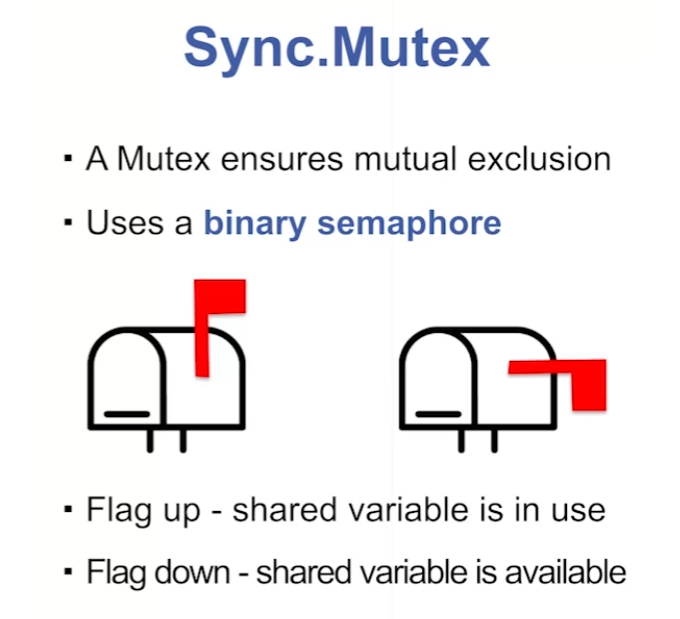

在Go语言中,互斥锁(Mutual Exclusion)通常用于保护共享资源,以确保在任何给定时刻只有一个goroutine可以访问该资源,从而防止并发访问导致的数据竞争和不确定行为。互斥锁可以确保在任何时刻只有一个goroutine可以执行被保护的临界区代码。

Go语言中的标准库中提供了sync包,其中包含了Mutex类型,用于实现互斥锁。通过Mutex类型可以创建互斥锁,并使用其Lock和Unlock方法来保护临界区代码块。

下面是一个使用互斥锁的示例:

package main

import (

"fmt"

"sync"

)

var counter int

var mutex sync.Mutex

func increment() {

// 加锁保护临界区代码

mutex.Lock()

counter++

// 解锁

mutex.Unlock()

}

func main() {

var wg sync.WaitGroup

// 启动多个goroutine并发地增加计数器的值

for i := 0; i < 10; i++ {

wg.Add(1)

go func() {

defer wg.Done()

increment()

}()

}

// 等待所有goroutine完成

wg.Wait()

fmt.Println("Counter:", counter)

}

在上面的示例中,increment函数用于增加counter计数器的值。在increment函数内部,通过调用mutex.Lock()方法来获取互斥锁,保护了counter的访问。在临界区代码执行完毕后,通过mutex.Unlock()方法释放互斥锁。

通过使用互斥锁,我们确保了在任何时刻只有一个goroutine可以执行increment函数中的临界区代码,从而避免了数据竞争和不确定行为。

M4.3.1 - Once Synchronization

在Go语言中,sync包提供了Once类型,用于在并发程序中执行一次初始化操作。Once类型保证初始化函数仅在第一次被调用时执行,之后调用时会被忽略,从而确保初始化操作只会执行一次。

Once类型的常见用法是在并发程序中执行全局变量或单例对象的初始化操作,以确保初始化操作不会被重复执行。

下面是一个使用Once类型的示例:

package main

import (

"fmt"

"sync"

)

var (

initialized bool

initializing sync.Once

)

func initGlobalData() {

fmt.Println("Initializing global data...")

// 进行一次性的全局数据初始化操作

initialized = true

}

func main() {

// 多个goroutine同时调用initGlobalData函数

for i := 0; i < 10; i++ {

go func() {

initializing.Do(initGlobalData)

}()

}

// 等待所有goroutine完成

// 注意:这里只是简单等待,实际生产环境中可能需要更复杂的等待逻辑

// 这里主要演示Once的使用,不关注等待的实现细节

fmt.Println("Waiting for initialization...")

}

在上面的示例中,initGlobalData函数用于执行全局数据的初始化操作。initializing变量是一个sync.Once类型的实例,用于确保initGlobalData函数仅在第一次调用时执行。

在main函数中,启动了多个goroutine同时调用initializing.Do(initGlobalData),但由于sync.Once类型的特性,initGlobalData函数只会被执行一次。因此,全局数据的初始化操作仅会在第一次调用时执行,之后的调用会被忽略。

通过使用sync.Once类型,我们可以方便地实现并发程序中的一次性初始化操作,避免了重复执行初始化代码的问题。

M4.3.2 - Deadlock

在Go中,死锁(deadlock)是一种并发编程中常见的问题,它发生在多个goroutine之间相互等待彼此持有的资源,导致所有goroutine都无法继续执行的情况。

死锁通常发生在以下情况下:

-

互斥锁未正确释放:如果一个goroutine获取了某个互斥锁,但在释放之前发生了某种异常或逻辑错误,导致锁无法被其他goroutine获取而造成死锁。

-

通道阻塞:当一个goroutine试图向一个已满的通道发送数据,或者尝试从一个空的通道接收数据时,它会被阻塞。如果没有其他goroutine来接收或发送数据,那么就会导致所有涉及的goroutine都被阻塞而无法继续执行。

-

循环依赖:当多个goroutine之间存在循环依赖关系时,它们可能会出现相互等待对方释放资源的情况,从而导致死锁。

下面是一个简单的死锁示例:

package main

import "fmt"

func main() {

ch := make(chan int)

// 发送goroutine向通道发送数据,但没有接收者

go func() {

ch <- 1

}()

// 主goroutine尝试从通道接收数据,但没有发送者

<-ch

fmt.Println("This line will never be reached")

}

在上面的示例中,主goroutine尝试从通道ch接收数据,但是在此之前并没有goroutine向通道ch发送数据。因此,主goroutine会一直阻塞在接收操作上,导致程序无法继续执行,从而产生了死锁。

要解决死锁问题,通常需要仔细检查代码中的并发逻辑,确保互斥锁的正确使用、通道的合理关闭以及避免循环依赖等情况。另外,使用Go语言内置的工具如go vet、go race等可以帮助检测潜在的死锁问题。

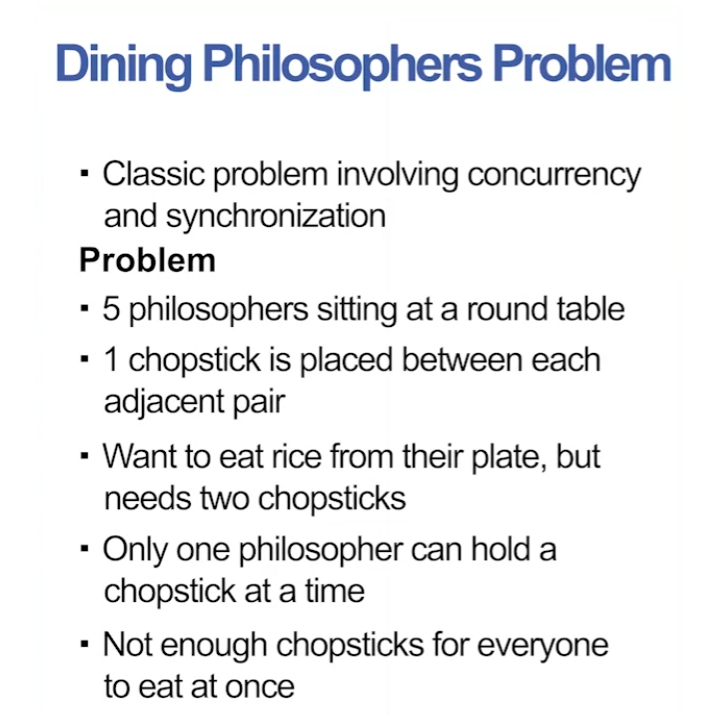

M4.3.3 - Dining Philosophers

哲学家进餐问题是一个经典的并发编程问题,通常用来展示并发编程中的死锁和资源竞争等问题。问题的描述如下:

假设有五位哲学家坐在圆桌周围,每位哲学家前面有一碗面和一只叉子。每位哲学家的生活方式是交替地进行思考和进餐,但为了进餐,他们需要拿起左右两边的叉子。叉子只能由坐在其旁边的哲学家拿起,而当一位哲学家使用完叉子后,其他哲学家才能继续使用。

这个问题的关键在于如何避免死锁和资源竞争,以确保所有哲学家都能进餐,同时避免产生竞争条件。

在Go语言中,可以使用goroutine和通道来模拟哲学家进餐问题。每个哲学家可以表示为一个goroutine,而叉子可以表示为一个互斥锁或信号量。通过合理设计并发结构和使用同步原语,可以确保所有哲学家都能安全地进餐,避免产生死锁和资源竞争。

以下是一个简单的示例,展示了如何在Go中实现哲学家进餐问题:

package main

import (

"fmt"

"sync"

)

const numPhilosophers = 5

var forks [numPhilosophers]sync.Mutex

func philosopher(id int, wg *sync.WaitGroup) {

defer wg.Done()

leftFork := id

rightFork := (id + 1) % numPhilosophers

// 死循环,模拟哲学家的思考和进餐过程

for {

think(id)

eat(id, leftFork, rightFork)

}

}

func think(id int) {

fmt.Printf("Philosopher %d is thinking\n", id)

}

func eat(id, leftFork, rightFork int) {

fmt.Printf("Philosopher %d is hungry and trying to pick up forks\n", id)

// 先获取左边的叉子

forks[leftFork].Lock()

fmt.Printf("Philosopher %d picked up left fork\n", id)

// 然后获取右边的叉子

forks[rightFork].Lock()

fmt.Printf("Philosopher %d picked up right fork and started eating\n", id)

// 模拟进餐过程

fmt.Printf("Philosopher %d finished eating and put down forks\n", id)

forks[rightFork].Unlock()

forks[leftFork].Unlock()

}

func main() {

var wg sync.WaitGroup

wg.Add(numPhilosophers)

// 创建并启动每位哲学家的goroutine

for i := 0; i < numPhilosophers; i++ {

go philosopher(i, &wg)

}

// 等待所有哲学家完成进餐

wg.Wait()

}

在这个示例中,每位哲学家使用两个互斥锁(表示叉子)来模拟进餐过程。每位哲学家在思考和进餐之间交替,并使用互斥锁来保护对叉子的访问,从而避免了竞争条件和死锁的发生。

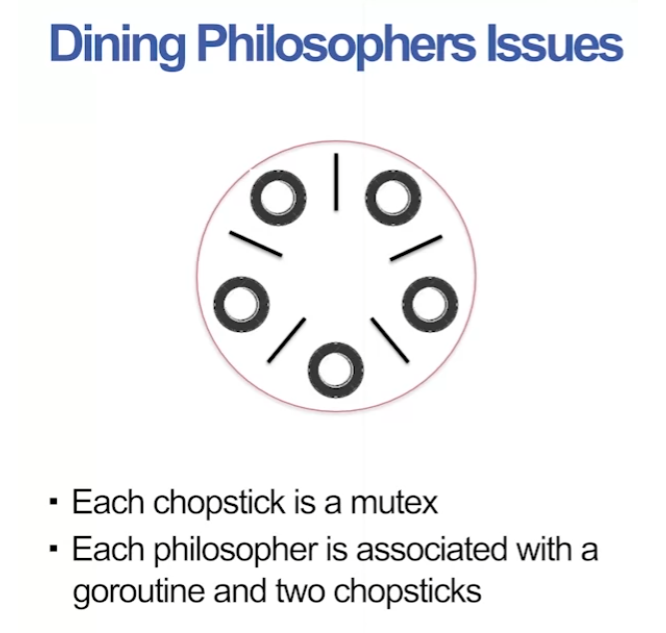

Module 4 Quiz

Peer-graded Assignment: Module 4 Activity

Implement the dining philosopher’s problem with the following constraints/modifications.

- There should be 5 philosophers sharing chopsticks, with one chopstick between each adjacent pair of philosophers.

- Each philosopher should eat only 3 times (not in an infinite loop as we did in lecture)

- The philosophers pick up the chopsticks in any order, not lowest-numbered first (which we did in lecture).

- In order to eat, a philosopher must get permission from a host which executes in its own goroutine.

- The host allows no more than 2 philosophers to eat concurrently.

- Each philosopher is numbered, 1 through 5.

- When a philosopher starts eating (after it has obtained necessary locks) it prints “starting to eat ” on a line by itself, where is the number of the philosopher.

- When a philosopher finishes eating (before it has released its locks) it prints “finishing eating ” on a line by itself, where is the number of the philosopher.

Submission: Upload your source code for the program.

package main

import (

"fmt"

"sync"

)

type ChopStick struct{ sync.Mutex }

type Philosopher struct {

number int

leftChopstick *ChopStick

rightChopstick *ChopStick

host chan bool

}

func (p Philosopher) eat() {

for i := 0; i < 3; i++ {

p.host <- true

p.leftChopstick.Lock()

p.rightChopstick.Lock()

fmt.Printf("starting to eat %d\n", p.number)

fmt.Printf("finishing eating %d\n", p.number)

p.rightChopstick.Unlock()

p.leftChopstick.Unlock()

<-p.host

}

}

func main() {

chopSticks := make([]*ChopStick, 5)

for i := 0; i < 5; i++ {

chopSticks[i] = new(ChopStick)

}

host := make(chan bool, 2)

philosophers := make([]*Philosopher, 5)

for i := 0; i < 5; i++ {

philosophers[i] = &Philosopher{i + 1, chopSticks[i], chopSticks[(i+1)%5], host}

}

var wg sync.WaitGroup

wg.Add(5)

for i := 0; i < 5; i++ {

go func(p *Philosopher) {

defer wg.Done()

p.eat()

}(philosophers[i])

}

wg.Wait()

}

Output

$ go run dining.go

starting to eat 5

finishing eating 5

starting to eat 2

finishing eating 2

starting to eat 4

finishing eating 4

starting to eat 1

finishing eating 1

starting to eat 2

finishing eating 2

starting to eat 5

finishing eating 5

starting to eat 4

finishing eating 4

starting to eat 1

finishing eating 1

starting to eat 3

finishing eating 3

starting to eat 5

finishing eating 5

starting to eat 4

finishing eating 4

starting to eat 2

finishing eating 2

starting to eat 3

finishing eating 3

starting to eat 3

starting to eat 1

finishing eating 1

finishing eating 3

后记

2024年3月17日下午16点08分开始学习这门课,截至2024年3月17日21点31分,学习完module3,希望明天完成这门课程。

次日更新:

2024年3月18日12点24分完成这门课的学习。